How Adobe’s latest updates will revolutionise the way creatives work

Artists and creators will see their tools in a whole new way.

Every autumn, Adobe Max is the event to watch to see what the next year in creativity will bring. The creative software giant behind Firefly, Photoshop, Illustrator and Premiere announces new tools and features, while artists and creators in different fields showcase the apps in action to provide inspiration.

This year’s event has been no exception, with so many game-changing new features announced across Adobe’s ecosystem. What’s more, they have the power to fundamentally change how creators, well, create.

Here’s what’s new - and why it’s reshaping the future of creative workflows and content production.

AI-powered image editing is here with Firefly Image Model 5

One of the most exciting announcements at Adobe MAX was the launch of Firefly Image Model 5. As you would expect, this builds on Image Model 4 with improved fidelity and photorealism, particularly in lighting and textures. It also improves on the relatively low resolution that we normally have from AI-generated imagery, providing native 4MP images without upscaling.

But more significantly, Firefly Image Model 5 also introduces powerful image-editing capabilities. One of the biggest challenges with AI imagery has been the inability to make precise changes while maintaining consistency in all the elements you don’t want to modify. Firefly’s new model introduces a new Prompt to Edit tool that lets you describe how you want to edit an image using natural language commands to add, remove, or modify elements.

And it’s going to get more granular. Adobe’s working on Layered Image Editing for Firefly (above). This will allow precise, context-aware compositing of individual layers in an image, keeping changes coherent while making it easier to get the exact result you’re after.

There’s also a tool in the works to speed up handling large volumes of images. Firefly Creative Production, in a private beta for now, allows pros to power through thousands of images at once, automating background replacement, colour grading and cropping all via a no-code interface.

Tedious tasks just got quicker: time-saving new AI tools in Premiere, Firefly, Photoshop and Lightroom

Adobe MAX also saw announcements for smaller features that make a big difference when it comes to taking the hassle out of repetitive, time-consuming tasks.

For video production, Premiere has a new AI Object Mask (above, public beta) that automatically identifies and isolates people and objects so they can be edited and tracked without finicky manual rotoscoping, allowing much easier localised colour grading, or the addition of special effects.

Firefly’s web-based video tools are expanding too. Its multi-track video editor is optimised for quick on-the-go transcript-based editing for social media video formats, and Firefly now has Generate Soundtrack and Generate Speech models, both in public betas, enabling creators to produce music and voiceovers for fully edited videos without even leaving Firefly.

For image compositing, AI-powered Harmonize in Photoshop is a game changing addition for quickly combining elements from different images even if they have radically different lighting or colour tones, saving hours of laborious manual editing.

Photographers can also rejoice at the launch of Assisted Culling in public beta in Lightroom. This speeds up the ordeal of trawling through hundreds of images from a shoot to find the keepers, allowing photographers to get down to editing faster.

The future of creative gen AI will be personal

Adobe has already been turning the possibilities of generative AI into practical tools for creatives and marketers. New features revealed at Adobe MAX show the next step is a move beyond a one-size-fits-all approach to personalised generative AI.

Business users will be able to work with Adobe directly via the new Adobe Firefly Foundry to create tailored generative AI models unique to their brand. Trained on business’s own IPs, these deeply tuned proprietary models will be built on top of existing commercially safe Firefly models. They will be able to generate image, video, audio, vector and even 3D assets, making on-brand consistency a reality at scale across a huge array of AI output.

Custom AI for artists

Customisation isn’t only for enterprise users. A private beta is now introducing customisable models for individual artists and creators directly in the Firefly app and Firefly Boards. This will allow Adobe users to personalise their own AI models to generate new assets consistent with their own art style.

AI assistants will change how creatives use their tools

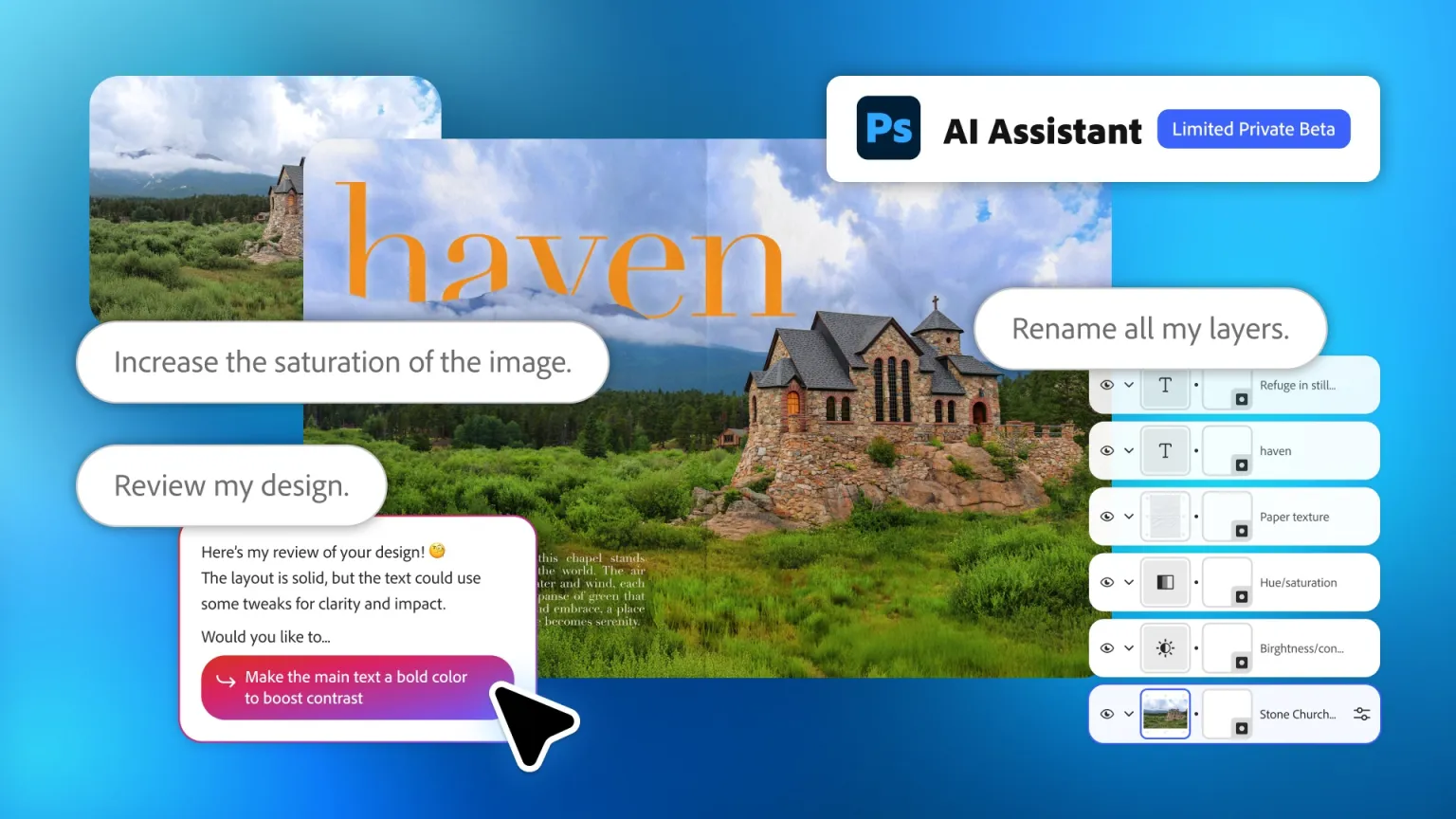

Mastering creative tools used to mean hours of trial and error – navigating dense menus, memorising shortcuts, and bingeing YouTube tutorials. Now, Adobe’s new AI Assistants change that entirely: instead of hunting for the right tool, you simply ask, and the software does the rest.

Project Moonlight will see the addition of agentic AI across apps, powering conversational interfaces that can help users accomplish whatever they want to achieve, from simply renaming layers in Photoshop to creating or reviewing a new design. Users will be able to talk with the assistant in natural language, saving the time it takes to work out how to complete a task.

This is a fundamental change in our relationship with our tools, making them less static, and more reactive and versatile to individual needs. It will even be possible to connect assistants to social media to analyse what content has done well, brainstorm ideas and create new content faster.

Tools that reflect the creative process in Firefly Boards

Creativity has always been collaborative. Teams bounce ideas around, and clients then come in with feedback, but software hasn’t always been well adapted to this reality. Firefly Boards has changed that with tools that better reflect the non-linear nature of the creative process.

The moodboarding app allows teams to collaborate in real time in infinite time, generating and mixing ideas on the fly. New updates in the browser-based app include Rotate Object, which converts 2D images to 3D so objects and people can be positioned in different poses and perspectives. There are also now Presets for one-click image generation in various styles and Generative Text Edit to instantly change the text in a photo for quick mock ups.

More flexibility than ever

The big takeaway is that creative tools are getting more flexible. We can see this in Adobe’s incorporation of third-party models. You can now choose image and video generation from the likes of Google, Runway. OpenAI, ElevenLabs and more directly in Adobe apps. And Photoshop now has Generative Upscale to 4K thanks to the integration of Topaz Labs’ AI upscaling tech.

We can also see it in how we’re no longer tied to a desk. There are Photoshop and Premiere mobile apps, allowing creatives to work wherever they find themselves without having to wait to get back to a laptop.

Adobe MAX 2025 offered a glimpse of how the future of creative apps will be more flexible, accessible, personalised and generally helpful for creatives. These advances reduce the time it takes to complete the most tedious tasks and open new creative possibilities for others, empowering more creatives and brands to create high-quality content faster across formats. Catch up on what’s been happening at the Adobe MAX website

Daily design news, reviews, how-tos and more, as picked by the editors.