Humans in the metaverse (and how to create them)

The future is already here. A BMW factory in Germany now has its identical digital twin in the metaverse, designed and operated in a real-time 3D simulation where digital humans and robots perform various real-life tasks.

The real factory is gigantic: it occupies 600,000 square feet, employs 57,000 people, contains tens of thousands of objects, thousands of materials, and produces hundreds of cars simultaneously. And the digital factory is an exact copy of the real one.

The way it looks and functions, and even the materials, textures, and lighting are physically accurate – and obey the laws of physics. Thanks to such precision, BMW's teams can now fully simulate complex workflows with humans, robots, and vehicles working in sync.

Now the engineers can easily test new solutions and make quick iterations. They can also foresee the outcomes of their experiments before making any actual changes or moving a single screw. And these experiments don't cause lengthy downtimes and high interruption expenses as they happen virtually, as though in a video game.

Building, refining, and iterating efficiently, these teams can find optimal solutions far earlier in the design process. This keeps operations smooth, saves time and resources for the company, and makes planning processes much more efficient.

What is the metaverse, and how does it work?

All this is now possible in the metaverse – a virtual-reality space where users can interact with each other and a digital environment. This 3D space is like a combination of virtual reality, a multiplayer online game and the internet. Users (via avatars) can move around, work, play, and socialise in real time. The metaverse is becoming a reality fast, especially now when significant parts of our lives have moved online due to the pandemic.

To illustrate interaction in the metaverse, let's return to BMW's digital factory. It 'employs' 120 digital humans, whose job is to simulate and test ergonomics, efficiency, and workflows for the real workers. They can move around the factory and perform various tasks. These avatars were created using real-life data and are physically similar to the real workers (they have unique faces and features that reflect the diversity of real employees).

Omniverse – a doorway to the metaverse

BMW's digital factory is designed and run in Omniverse, an open metaverse platform for 3D simulation and collaboration. It makes such complex projects possible in the first place. And it also significantly speeds up production processes. To work in the Omniverse, you don't need to switch from design and 3D apps that you are familiar with and love, because most of them already have integrations with the platform. For instance, designers can simultaneously work from such applications as 3ds Max, Blender, Character Creator, Maya, Unreal Engine, or Archicad, among others.

In Omniverse, users can render characters, place them into a simulation, or collaborate with other people in real-time. For example, while you are focusing on characters, your team members can work on other elements of the environment. You can all work from different design applications simultaneously, and all the changes will be immediately reflected in Omniverse. These features would be incredibly valuable for distributed and remote teams.

How were BMW's digital workers created?

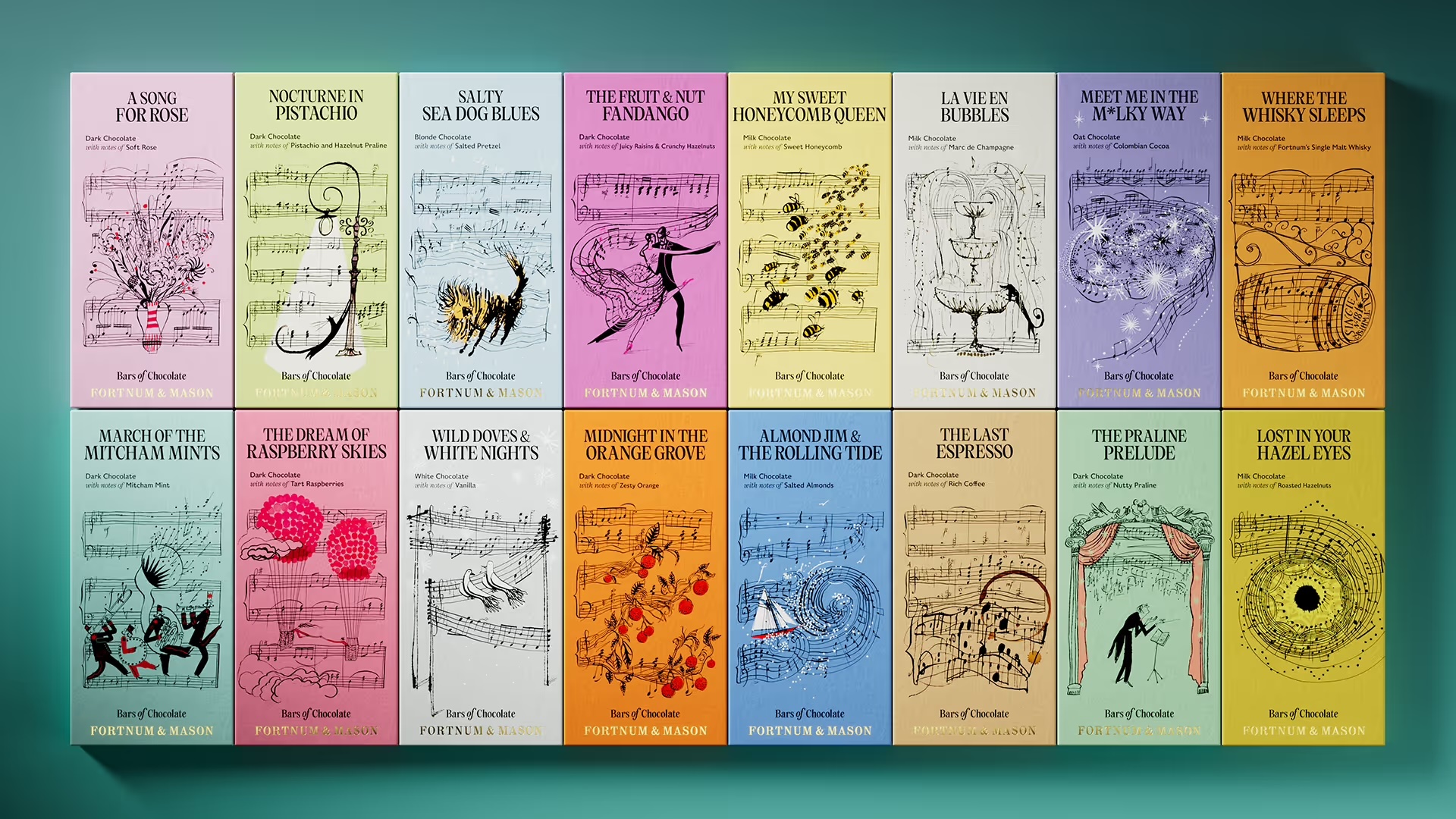

Users can turn a single photo into a 3D human in seconds in Character Creator, an all-in-one AI software for character development. The designers used it to create and animate the factory workers. Once you convert a photo into 3D, you can fully customize the character: change the shape of their head, add photo-realistic details and skin features, dress them, and, finally, animate. Plus, the software comes with massive asset and motion libraries to choose from.

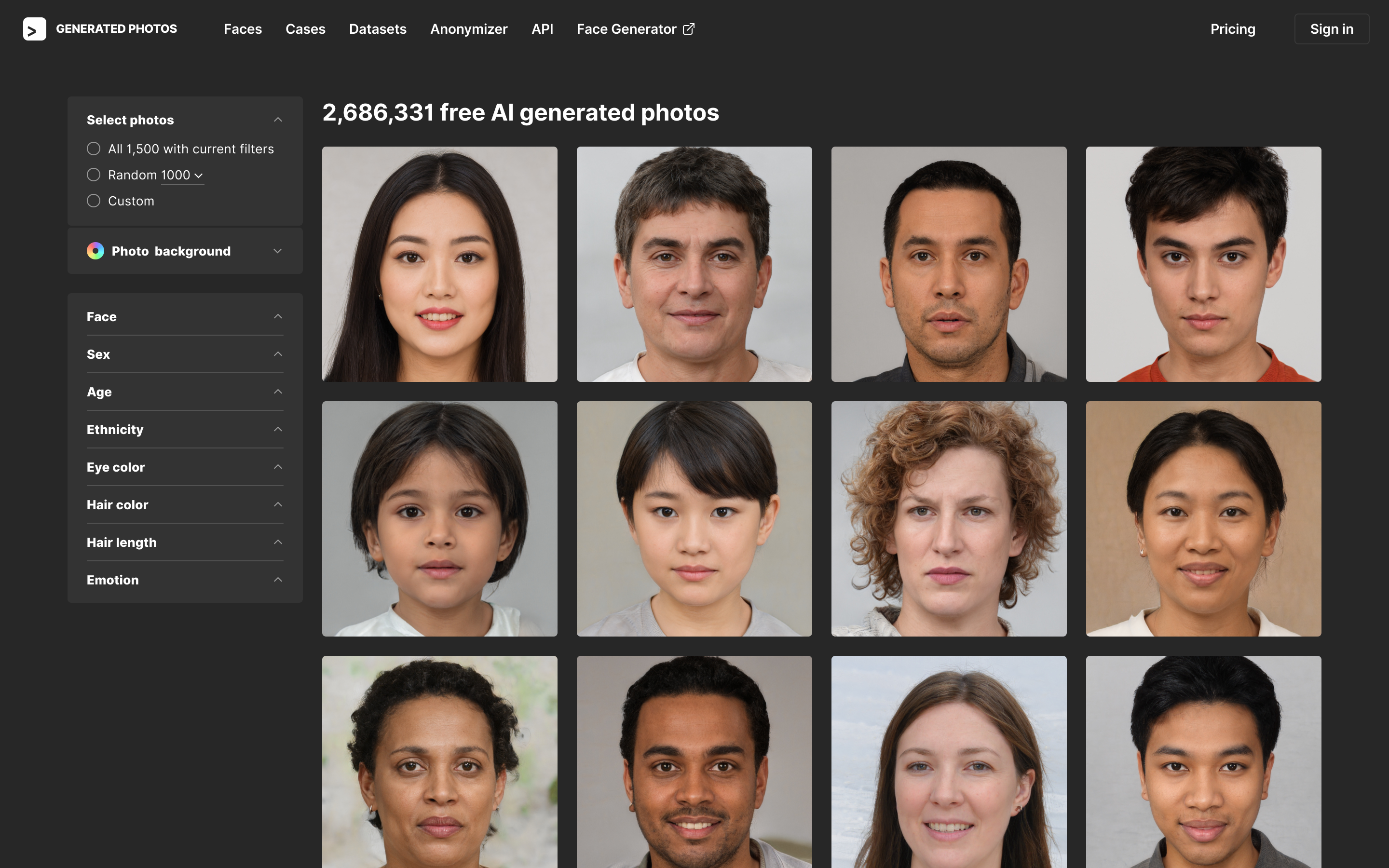

To personify the workers, the designers used AI-generated images from Generated Photos. In this library, you can choose from 2.6M strikingly realistic headshots of people of all colours and ages. You can also generate unique faces in real-time by setting desired parameters, or find synthetic look-alikes to keep the privacy of real people. These tools would be especially handy when looking for peculiar character features or demographics.

This step-by-step guide illustrates how to create a full-body 3D character from a single headshot.

The combination of Character Creator and Generated Photos is a big time saver. Generating a single character from scratch would take around five days. With a ready-made model and unique life-like face, it would take from a few hours to one day depending on how elaborate the character is; thus, it saves nearly 80-90% of the time and effort without compromising the quality.

Once the characters are ready, you can export them into Omniverse for further editing, rendering, simulation, and working with others. Character Creator Connector, a special plugin for Omniverse, also supports drag-and-drop export of motions and accessories.

Are there other uses for digital avatars?

Besides the use in large industrial projects such as the BMW factory, the demand for digital avatars is growing across many other fields, particularly in animation, game development, visualisations, AR/VR, and interactive design.

Brands like Samsung, IBM and Gucci are already deploying AI avatars that interact with their audiences and make communication more human and personalised. Other businesses use AI avatars for online learning and training their staff. For example, Walmart introduced them to train and prepare their employees for challenging retail situations (like the Black Friday rush).

Digital avatars are now also a global phenomenon in the media and entertainment. Virtual influencers like Lu do Magalu and Lil Miquela attract millions of followers. And several real musicians, including Ariana Grande and Travis Scott, have recently given massive virtual concerts where their digital avatars represented them.

Gaming and animation are some of the main consumers of digital characters. With Character Creator and Generated Photos, makers can develop protagonists in great detail as well as create secondary characters at scale. Using Generated Photos and ready-made assets, such as morphs, hair, clothes, and skin textures, they can make hundreds or even a thousand secondary characters in a day.

Architects and property developers use digital crowds to make their visualisations appealing, vibrant, and life-like. And there is now a constantly increasing demand for diversity in the field.

Not only large corporations and producers can benefit from the AI tools – small teams and amateurs can join in, too. The toolset combining Omniverse, Character Creator, and Generated Photos, saves a great deal of time and effort. Even creators on a tight budget can now be in line with the industry leaders. They can also focus more on creative tasks while the AI is doing all the heavy lifting.

Read more:

Daily design news, reviews, how-tos and more, as picked by the editors.

Daniel John is Design Editor at Creative Bloq. He reports on the worlds of design, branding and lifestyle tech, and has covered several industry events including Milan Design Week, OFFF Barcelona and Adobe Max in Los Angeles. He has interviewed leaders and designers at brands including Apple, Microsoft and Adobe. Daniel's debut book of short stories and poems was published in 2018, and his comedy newsletter is a Substack Bestseller.