Picture elements: a new perspective on pixels

CG artist Denis Kozlov explains why a pixel is not a colour square and why you should think of images as data containers.

Sign up to Creative Bloq's daily newsletter, which brings you the latest news and inspiration from the worlds of art, design and technology.

You are now subscribed

Your newsletter sign-up was successful

Want to add more newsletters?

Five times a week

CreativeBloq

Sign up to Creative Bloq's daily newsletter, which brings you the latest news and inspiration from the worlds of art, design and technology.

Once a week

By Design

Sign up to Creative Bloq's daily newsletter, which brings you the latest news and inspiration from the worlds of art, design and technology.

Once a week

State of the Art

Sign up to Creative Bloq's daily newsletter, which brings you the latest news and inspiration from the worlds of art, design and technology.

Seasonal (around events)

Brand Impact Awards

Sign up to Creative Bloq's daily newsletter, which brings you the latest news and inspiration from the worlds of art, design and technology.

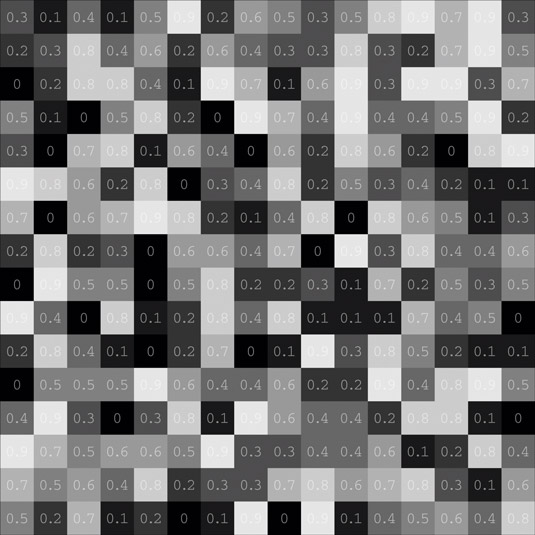

Although those raster image files filling our computers and lives are most commonly used to represent pictures, I find it useful for a CG artist to have yet another perspective – a geekier one. And from that perspective, a raster image is essentially a set of data organised into a particular structure, to be more specific – a table filled with numbers (a matrix, mathematically speaking).

The number in each table cell can be used to represent a colour, and this is how the cell becomes a pixel, which stands for 'picture element'. Many ways exist to encode colours numerically. For instance, (probably the most straightforward one) to explicitly define a number-to-colour correspondence for each value, ie. 3 stands for dark red, 17 for pale green and so on. This method was frequently used in the older formats like .gif as it allowed for certain size benefits at the expense of a limited palette.

Another way (the most common one) is to use a continuous range from 0 to 1 (not 255!), where 0 stands for black, 1 for white, and the numbers in between denote the shades of grey of the corresponding lightness. This way we get a logical and elegantly organised way of representing a monochrome image with a raster file.

The term 'monochrome' happens to be a more appropriate than 'black and white' since the same data set can be used to depict gradations from black to any other colour depending on the output device – like many old monitors were black-and-green rather than black-and-white.

This system, however, can be easily extended to the full-colour case with a simple solution – each table cell can contain several numbers, and again there are multiple ways of describing the colour with few (usually three) numbers each in 0-1 range. In an RGB model they stand for the amounts of red, green and blue light, in HSV they stand for hue, saturation and brightness accordingly. But what's vital to note is that those are still nothing but numbers, which encode a particular meaning, but don't have to be interpreted that way.

A logical unit

Now let me move onto why a pixel is not a square: It's because the table, which is what a raster image is, tells us how many elements are in each row and column, in which order they are placed, but nothing about what shape or even what proportion they are.

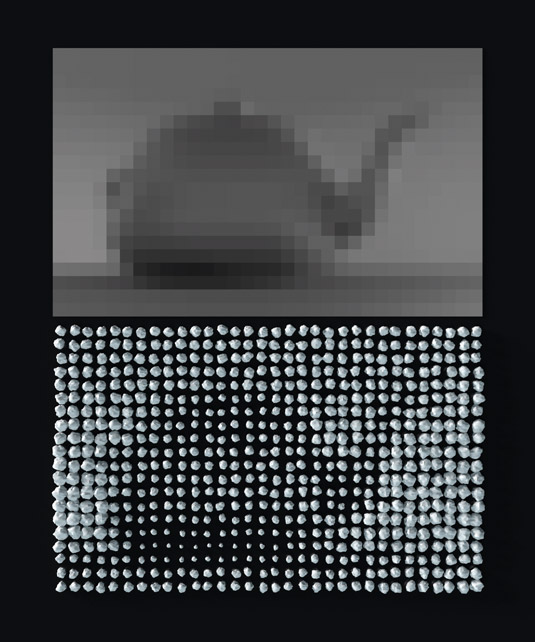

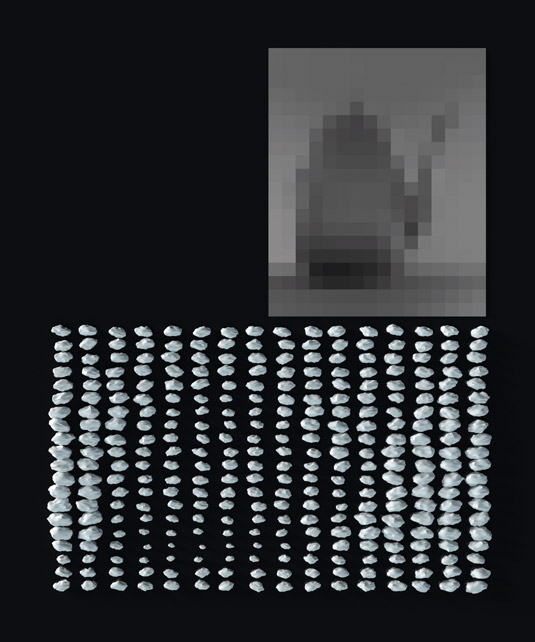

We can form an image from the data in a file by various means, not necessarily with a monitor, which is only one option for an output device. For example, if we take our image file and distribute pebbles of sizes proportional to pixel values on some surface – we shall still form essentially the same image.

Sign up to Creative Bloq's daily newsletter, which brings you the latest news and inspiration from the worlds of art, design and technology.

And even if we take only half of the columns, but instruct ourselves to use the stones twice wider for the distribution – the result would still show principally the same picture with the correct proportions, only lacking half of the horizontal details.

'Instruct' is the key word here. This instruction is called pixel aspect ratio, which describes the difference between the image’s resolution (number of rows and columns) and proportions. It enables you to store frames stretched or compressed horizontally and is used in certain video and film formats.

Now let's talk about resolution – it shows the maximum amount of detail that an image can hold, but says nothing about how much it actually holds. A badly focused photograph can't be improved no matter how many pixels the camera sensor has. In the same way, upscaling a digital image in Photoshop or any other editor will increase the resolution without adding any detail or quality to it – the extra rows and columns would be just filled with interpolated (averaged) values of originally neighbouring pixels.

In a similar fashion, a PPI (pixels per inch, commonly also called DPI – dots per inch) parameter is only an instruction establishing the correspondence between the image file's resolution and the output's physical dimensions. And so PPI is pretty much meaningless on its own, without either of those two.

Storing custom data

Returning to the numbers stored in each pixel, of course they can be any, including so-called out-of range numbers (values above 1 and negative ones), and there can be more than three numbers stored in each cell. These features are limited only by the particular file format definition and are widely utilised in OpenEXR to name one.

The great advantage of storing several numbers in each pixel is their independence, as each of them can be studied and manipulated individually as a monochrome image called Channel – or a kind of sub-raster.

Additional channels to the usual colour-describing Red, Green and Blue ones can carry all kinds of information. The default fourth channel is Alpha, which encodes opacity (0 denotes a transparent pixel, 1 stands for completely opaque). Z-depth, normals, velocity (motion vectors), world position, ambient occlusion, IDs and anything else you could think of can be stored in either additional or the main RGB channels.

Every time you render something out, you decide which data to include and where to place it. In the same way you decide in compositing how to manipulate the data you possess to achieve the result you want. This numerical way of thinking about images is of paramount importance, and will benefit you greatly in your visual effects and motion graphics work.

The benefits

Applying this way of thinking to your work – as you use render passes and carry out the compositing work – is vital.

Basic colour corrections, for example, are nothing but elementary mathematical operations on pixel values and seeing through them is pretty essential for production work. Furthermore, maths operations like addition, subtraction or multiplication can be performed on pixel values, and with data like Normals and Position many 3D shading tools can be mimicked in 2D.

Words: Denis Kozlov

Denis Kozlov is a CG generalist with 15 years' experience in the film, TV, advertising, game and education industries. He is currently working in Prague as a VFX supervisor. This article originally appeared in 3D World issue 181.

The Creative Bloq team is made up of a group of art and design enthusiasts, and has changed and evolved since Creative Bloq began back in 2012. The current website team consists of eight full-time members of staff: Editor Georgia Coggan, Deputy Editor Rosie Hilder, Ecommerce Editor Beren Neale, Senior News Editor Daniel Piper, Editor, Digital Art and 3D Ian Dean, Tech Reviews Editor Erlingur Einarsson, Ecommerce Writer Beth Nicholls and Staff Writer Natalie Fear, as well as a roster of freelancers from around the world. The ImagineFX magazine team also pitch in, ensuring that content from leading digital art publication ImagineFX is represented on Creative Bloq.