What is motion capture?

Discover what mo-cap is, what it's used for, and how AI is bringing it to the masses.

Sign up to Creative Bloq's daily newsletter, which brings you the latest news and inspiration from the worlds of art, design and technology.

You are now subscribed

Your newsletter sign-up was successful

Want to add more newsletters?

Five times a week

CreativeBloq

Sign up to Creative Bloq's daily newsletter, which brings you the latest news and inspiration from the worlds of art, design and technology.

Once a week

By Design

Sign up to Creative Bloq's daily newsletter, which brings you the latest news and inspiration from the worlds of art, design and technology.

Once a week

State of the Art

Sign up to Creative Bloq's daily newsletter, which brings you the latest news and inspiration from the worlds of art, design and technology.

Seasonal (around events)

Brand Impact Awards

Sign up to Creative Bloq's daily newsletter, which brings you the latest news and inspiration from the worlds of art, design and technology.

Motion capture is one of those terms that gets bandied about, with the assumption that everyone knows what it means. But what is motion capture, exactly? And how is it relevant to those making 3D movies, as well as creatives in different areas?

Let's find out...

What is motion capture?

Motion capture – also known as mo-cap – is when a person or object's movements are tracked through a number of sensors that are placed on them. This data is then collated and can be used to replicate the motions of the person or object. It is also possible to track a person in relation to other objects such as the elements of a set.

The technique is often used in films, for when animated or CGI characters need to replicate the motions of a real person, such as for characters like Gollum in The Lord of The Rings. It can also be used to form a pattern, such as the motion capture of Sir Simon Rattle's conduction that was used to create the visuals for the London Symphony Orchestra's identity, by The Partners (now Superunion).

What are the drawbacks of mo-cap?

Motion capture can be very expensive, and requires a lot of specialist kit for it to work. You may have to reshoot the scene if there's a problem, rather than trying to fix the data later on, and you can't use it to replicate movements that an object or a person can't do (i.e. ones that don't follow the laws of physics).

However, nowadays, innovations in AI are addressing these problems and helping to bring mo-cap to the masses.

How is AI shaking up mo-cap?

When Gavan Gravesen and Anna Bellini co-founded RADiCAL, it wasn’t with a mind to move the needle on their profits; they aspired to propel massive acceleration in the 3D content pipeline.

Sign up to Creative Bloq's daily newsletter, which brings you the latest news and inspiration from the worlds of art, design and technology.

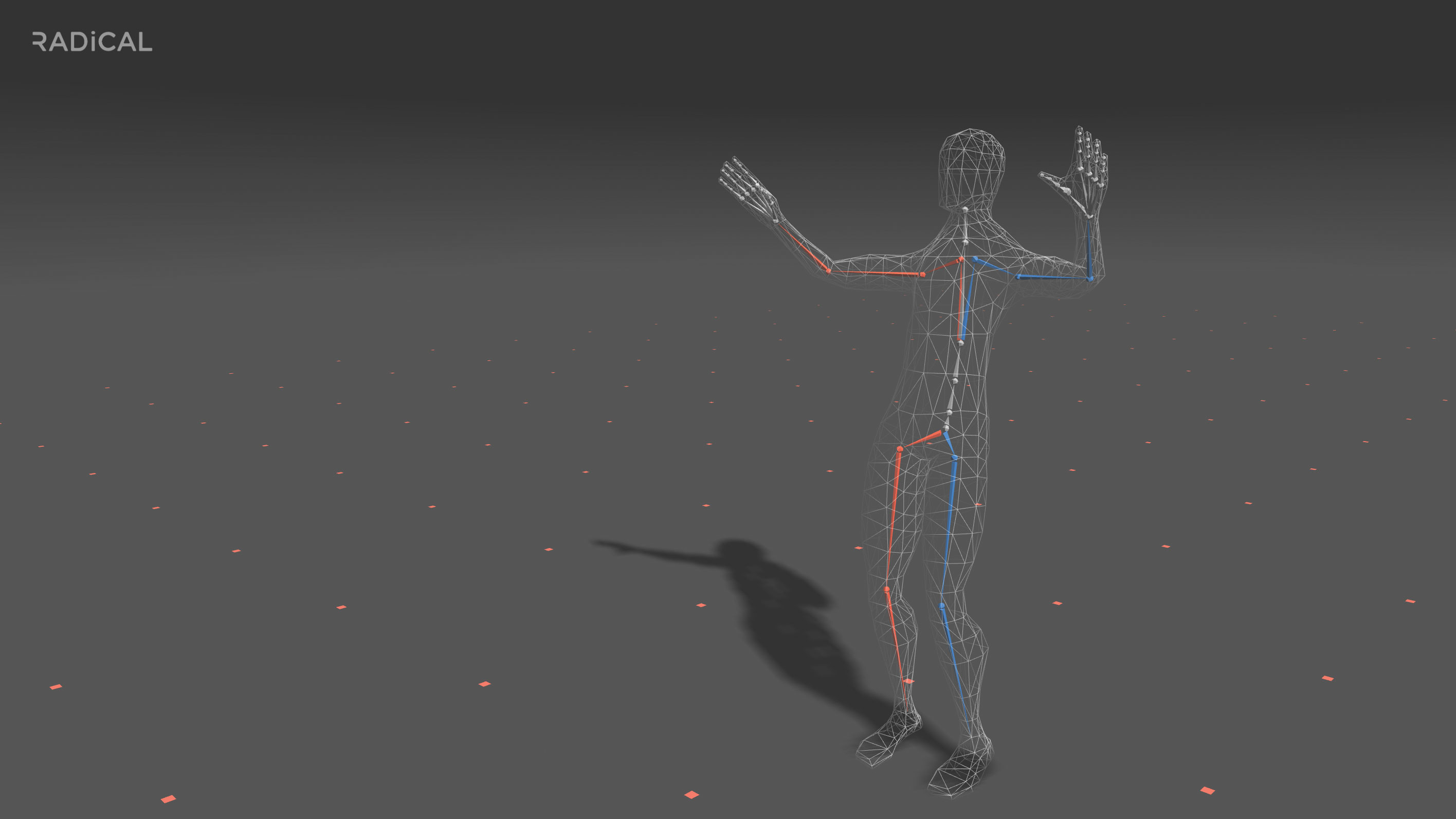

Two months after its public unveiling, RADiCAL’s AI-powered motion capture solution presents a spectacular break from tradition, cutting down the cost, hardware and skills users need to bring to the table. RADiCAL has already amassed close to 10,000 users, landed a partnership with NVIDIA, and seen interest from a number of larger studios.

What is RADiCAL?

The simple yet innovative AI turns ordinary 2D video into 3D data, without the need for any dedicated equipment or constrained environments. It allows 3D content creators of all stripes to utilise motion capture when animating humanoid characters.

“The idea was born after Anna and I had worked together in a previous venture in a related field,” explains Gravesen. “In 2017, we decided to put all of our efforts behind building the technology that became RADiCAL – we loved the idea of solving a real problem, shipping products and breaking down a major roadblock in the 3D content pipeline in the process.”

Guided by the simple principle that the solution had to be entirely hardware-agnostic and easy to use, the team combined deep learning, generative models, robotics, computer vision and biomechanics to “create an AI that would be easy enough for a novice to use, but sufficiently robust for an expert to add to their toolkit,” as Bellini explains.

How can RADiCAL be used?

In other words, RADiCAL aims to provide a solution that is universally available and integrates seamlessly with existing workflows. Gravesen explains: “Our hardware independence means that any camera can become your capture device, and any environment, indoors or outdoors, can be your studio.”

Users are able to record and upload 2D video footage from within RADiCAL’s iOS or Android apps, or use a cloud-based custom profile that can process content from other cameras.

Gravesen explains: “The final output is an FBX file rigged to the HumanIK standard that you download from our cloud service, for use in any 3D software or gaming engine. And we’ll add more rigs and file standards over the next months.”

What's next for RADiCAL?

The company will soon turn their attention towards those that remain reluctant to work in the cloud, promising to offer on-premise installations to select customers in the near future.

Bellini explains how she built the technology: “Massive amounts of data are being processed to support an AI model capable of plausibly reconstructing human motion. We strive to capture and express subtle motion loyal to the actor’s movements. We don’t rely on canned motion or existing machine learning solutions. Instead, we crafted custom, low-level CUDA kernels for use with NVIDIA GPUs.”

RADiCAL’s solution is not yet real-time, but the team is optimistic it’ll get there soon. “We expect to release a version of our AI that runs device-side and in real-time in early 2019, perhaps earlier,” says Bellini. “Future versions will also feature multiple cameras and accommodate multiple actors. In other words, we will offer a whole range of products with different features over the next 12 months.”

The co-founders’ mission is to enable and empower independent creators and studios to embrace motion capture in a variety of new ways. Gravesen notes that “for the 3D ecosystem to grow as fast as we want it to, we need to change things up. We can’t rely on the AAAs and majors alone to create the content that we all know is needed. Rather, we need independent creators, creative agencies and studios of all sizes, corporate in-house teams, even students and academics to be empowered.”

Bellini concludes: “By making a tool that’s accessible no matter the size of the project, we can help to genuinely accelerate the 3D content pipeline across the board… for film, TV, gaming, AR and VR alike. Our users have brought us into their pipelines at all stages: we see independents, AAAs and majors using us in previz as well as in production.” RADiCAL’s apps are now available, for anyone to try, on both the App Store and Google Play.

Gravesen hopes that by giving motion capture to everyone, the team can continue to remove the roadblocks in content creation that involves human motion.

This article was originally published in issue 237 of 3D World. Buy issue 237 or subscribe.

Read more:

Brad Thorne was Creative Bloq's Ecommerce Writer, and now works for a PR company specialising in 3D and VFX, Liaison. He previously worked as Features Writer for 3D World and 3D Artist magazines, and has written about everything from 3D modelling to concept art, archviz to engineering, and VR to VFX. For Creative Bloq, his role involved being responsible for creating content around the most cutting-edge technology (think the metaverse and the world of VR) and keeping a keen eye on prices and stock of all the best creative kit.