The ethics of digital humans

Vertex speaker and CG industry veteran Chris Nichols debates the rise and role of virtual people.

Sign up to Creative Bloq's daily newsletter, which brings you the latest news and inspiration from the worlds of art, design and technology.

You are now subscribed

Your newsletter sign-up was successful

Want to add more newsletters?

Five times a week

CreativeBloq

Sign up to Creative Bloq's daily newsletter, which brings you the latest news and inspiration from the worlds of art, design and technology.

Once a week

By Design

Sign up to Creative Bloq's daily newsletter, which brings you the latest news and inspiration from the worlds of art, design and technology.

Once a week

State of the Art

Sign up to Creative Bloq's daily newsletter, which brings you the latest news and inspiration from the worlds of art, design and technology.

Seasonal (around events)

Brand Impact Awards

Sign up to Creative Bloq's daily newsletter, which brings you the latest news and inspiration from the worlds of art, design and technology.

The truth about digital humans is that they make most people uncomfortable. There’s a biological reason for this. Since birth, you have been bred to look for threats. When something looks slightly off, alarms go off in your body, activating adrenal functions and big emotions like fear, anger and sometimes, disgust. This is supposed to help you survive.

But appearances can be deceiving, and our brains can be tricked. Digital humans are one such example. When they look just slightly off, they can trigger the same sensations in us, and right now, most things still feel wrong.

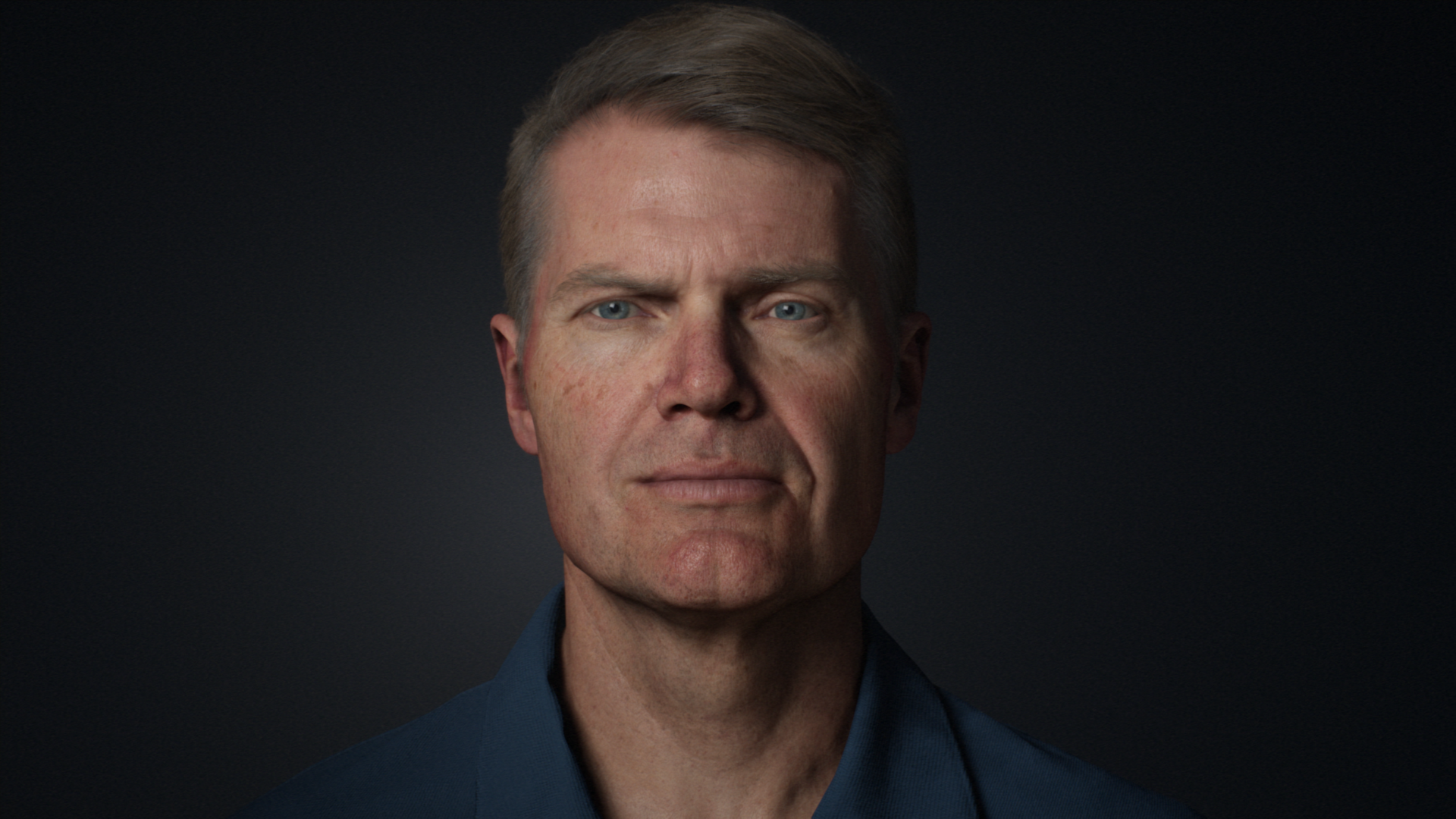

The world of computer graphics has spent the last few decades trying to overcome this challenge, which is known as the Uncanny Valley. And we’ve made progress, a lot of progress. With better technology and better trained artists in the mix, getting a digital human out of the Valley is much more achievable.

As such, the demand for digital humans has gone up. We are also starting to investigate the role that they will play in our everyday lives. And because of this, a whole new set of problems have emerged, including questions of ethics, and the safety of our own well-being.

Back from the dead

The question of ethics really came into play when the entertainment industry started to bring celebrities back from the dead. You might remember hologram 2Pac; or hologram Michael Jackson (who some say is being prepped for a tour); or even Paul Walker’s last scene in Furious 7, which like the others was a product of digital restoration after his death. No matter what you think about these projects, they all raise serious questions. Including, first and foremost, who owns your likeness after you die? And what should they be allowed to do with it?

If the thought of bringing dead actors back from the dead makes you upset, recent research may actually terrify you. The concept of fake news and doctored information has been one of 2017’s top stories. But in the last few years, several papers have emerged that demonstrate how digital humans can be used to distort the truth through video manipulation.

2016’s main example was face2face. This was a paper from the University of Erlangen-Nuremberg, Max-Planck-Institute for Informatics, and Stanford University that showed how webcams could be used to digitally puppeteer subjects from pre-existing video. So, if you were to grimace next to a video version of Donald Trump, for example, digital Donald would grimace too, in the exact same way.

Sign up to Creative Bloq's daily newsletter, which brings you the latest news and inspiration from the worlds of art, design and technology.

A second paper called Synthesizing Obama: Learning Lip Sync from Audio came out of Washington University in 2017 and depicted a similar technology, with one key difference. Instead of using a live person as their modifier, this project seamlessly superimposed audio and lip movements from one video to another. It’s so good, it’s spooky.

When you see things like this, it’s easy to come back to fear. Fear of digital humans. Fear of technology. Fear of what could go wrong. But if you stop there, you’ll miss out on all the benefits. I like to equate it to the internet.

There are a ton of evil things you can do on the internet, but is the internet all bad? Of course not. It’s a revolutionary tool that changed the world - in many ways for the better. The same will be said for digital humans. The process of achieving realism is already teaching us so much about human needs, desires, and biological responses, you have to wonder what we’ll learn when we achieve full photorealism.

Or when digital assistants break out of their current box. In time, they’ll meet us where we live. Looking us in the eyes with faces that feel complete. And with the help of AI, they’ll be able to recognise our emotions and then respond appropriately. Suddenly, the same biological mechanisms that used to set off warning bells around weird humans, will help you feel soothed by ones that accurately mimic our characteristics - facial and otherwise.

This can be seen in the recent research by Autodesk called AVA, which helps us remember that at our core, humans are social animals. We want to be a part of a greater whole, with like-people, who get us. The rise of digital humans will be one avenue to this feeling.

Another will be digital avatars. If VR takes off, as many of us believe it will, we’re heading for a worldwide MetaVerse that will allow users to spend countless hours interacting with virtual versions of ourselves. At first, these will be low-poly versions, far from the types that cause discomfort in the Valley. But over time, people will want these avatars to reflect their attributes (whether those are real or fantasy-driven), meaning we’ll either have to confront the Valley over and over again during our travels, or finally cross it.

Mike Seymour, who works with me at the Wikihuman project, is especially invested in this subject and is making progress every day. You might have seen his #MEETMIKE exhibition at SIGGRAPH, which allowed him to conduct interviews using the most realistic digital avatar ever produced, in real-time, with people in a digital space. It was exciting to watch.

And if you are like me, that’s the biggest takeaway. We are living in an exciting time. Yes, there are things to be cautious of. And just like the internet, there are things we have to self regulate. But ultimately, the technological leaps we are making are going to completely change the world. It won’t happen for years, but it’s coming. And it’s on all of us to make sure we do it right. I believe we can.

Get your ticket to Vertex 2018 now

For more insight into the future of CG, don't miss Chris Nichols' keynote presentation at Vertex, our debut event for the CG community. Book your ticket now at vertexconf.com, where you can find out more about the other amazing speakers, workshops, recruitment fair, networking event, expo and more.

Related articles:

Rob Redman is the editor of ImagineFX magazines and former editor of 3D World magazine. Rob has a background in animation, visual effects, and photography.