Sign up to Creative Bloq's daily newsletter, which brings you the latest news and inspiration from the worlds of art, design and technology.

You are now subscribed

Your newsletter sign-up was successful

Want to add more newsletters?

Five times a week

CreativeBloq

Sign up to Creative Bloq's daily newsletter, which brings you the latest news and inspiration from the worlds of art, design and technology.

Once a week

By Design

Sign up to Creative Bloq's daily newsletter, which brings you the latest news and inspiration from the worlds of art, design and technology.

Once a week

State of the Art

Sign up to Creative Bloq's daily newsletter, which brings you the latest news and inspiration from the worlds of art, design and technology.

Seasonal (around events)

Brand Impact Awards

Sign up to Creative Bloq's daily newsletter, which brings you the latest news and inspiration from the worlds of art, design and technology.

AI art tutorials are a good place to start if you're looking to experiment with the best AI art generators, or if you've tried using them but aren't getting the results you hoped for. AI art remains controversial, but it's now everywhere on social media and it seems inevitable that it will play at least some role in various creative areas, from experimentation by established digital artists to the creation of social media memes.

AI image generators themselves have advanced rapidly in the past couple of years, but many find that their initial experiments don't quite come out like the images they've seen on social media. That's partly because the best results often take a long process of trial and error, honing text prompts on the way, but it can help to know how to get the most out of each model.

Below, we've collected the best AI art tutorials that we've found, including Adobe Firefly tutorials, Midjourney tutorials, Flux tutorials, DALL-E 3 tutorials, Stable Diffusion tutorials and more. We've included both general guides to how each model works and how to use the UI to more specific AI art tutorials on things like making AI QR codes and optical illusions. You can use the quick links to skip to each section. We've also included FAQs on how AI image generators work.

The best AI art tutorials

We've divided our pick of the best AI art tutorials into sections for the best Midjourney tutorials, DALL-E 2 tutorials, Stable Diffusion tutorials and other AI art tutorials. Use the quick links to jump to the section you want or scroll down to browse. If you're looking to combine AI image generation with other creative tools, we also have roundups of the best Photoshop tutorials, Illustrator tutorials and After Effects tutorials.

Adobe Firefly tutorials

01. Adobe Firefly V3 3D Tutorial

The graphic designer Will Paterson was blown away with the results he was able to get using Adobe Firefly V3 to create 3D shapes and letters. In this video, he shares the process, including creating an initial image in Firefly to bringing an AI-created image back into Firefly to influence the creation of a new one. He also touches on using AI upscaling to increase the resolution of images before taking them to Photoshop to use in design assets or to create mockups much more easily.

02. A first look at Adobe Firefly

If there remained any doubt about whether text-to-image AI art was here to stay, that changed with the launch of Adobe Firefly. The giant of creative apps entering the space is sure to make the tech more mainstream in all kinds of fields. Still in beta, Adobe Firefly is among the easiest AI image generators to use thanks to a more user-friendly UI, and it promises that its model is trained only on work by artists who have given permission.

The UI guides the user more, providing preset styles to choose from, although this means you don't get the same level of control through prompting that you have with generators like Stable Diffusion. It's also the only AI art generator that can generate text (at least so far). In the tutorial above web designer Payton Clark Smith demonstrates how both the image generator and the Firefly text effects tools work.

Sign up to Creative Bloq's daily newsletter, which brings you the latest news and inspiration from the worlds of art, design and technology.

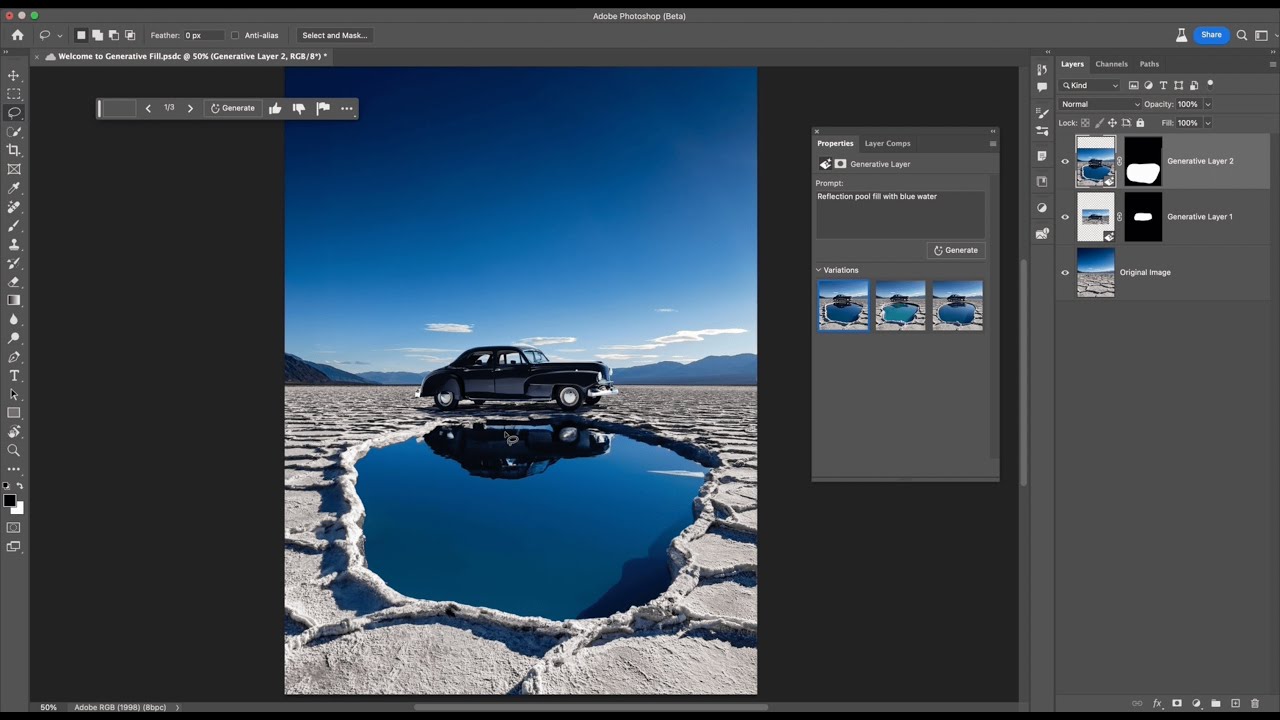

03. Adobe Firefly Generative Fill tutorial for Photoshop

As of May 2023, Adobe has added the power of Adobe Firefly's generative AI capabilities to Photoshop in the form of the new AI Generative Fill tool. This allows text prompts to be used to generate imagery in non-destructive layers directly in Photoshop itself. You can also use the tools to select and transform elements in an existing image and you can build up layers as you would with any other elements in Photoshop.

04. Recolor Vectors in Adobe Firefly

In this Adobe Firefly tutorial, Andrew Goodman walks through one of the newer additions to Adobe's generative AI tools, Recolor Vectors. The simple process allows users to upload an svg file of their work and use Firefly's AI generator to change the colours, ever using their own prompts or example prompts provided.

05. Adobe Firefly Text Effects in Adobe Express

The process for creating AI Text Effects in Adobe Express is pretty intuitive, but if you want a quick overview of the process and the various options for changing parameters, this walkthrough from Claudio Zavala Jr covers everything clearly and succinctly.

The best Midjourney tutorials

01. Ultimate Midjourney guide: beginner to advanced

Updated in July 2024 to cover the Midjourney website version, this comprehensive Midjourney AI art tutorial from Futurepedia provides a thorough guide to one of the most popular AI image generators. The 57-minute deep dive provides examples to demonstrate all the parameters in Midjourney and provides guides to syntax for successful prompting, tips on using style references, achieving consistent characters, personalisation, character repaint, creating 360 images and more.

02. Midjourney V6.1 tutorial

If you're already familiar with Midjourney, and just want a quick summary of what's new in Midjourney V6.1, Wade McMaster provides a quick breakdown in the video above, including

was released in December 2023 and provided a yet another massive upgrade in terms of the photorealism of image generations, with incredible detail. It also has a more advanced understanding of prompts and much better handling of in-image text. In this Midjourney video tutorial, Theoretically Media provides a deep dive into the update.

03. Midjourney Alpha tutorial

A big change for Midjourney has been the launch of its own dedicated website, meaning that the social platform Discord is no longer the only way to access the model (at least if you've generated enough images to gain access to the alpha site in the initial launch). The move to a dedicated site sees the introduction of new features and a more intuitive UI. Theoretically Media runs through some of the main features.

03. Midjourney V.6 advanced guide for cinematic photography

For a deeper dive into Midjourney V6, this tutorial focuses specifically on the creation of cinematic photorealistic images, something that the new model excels at. CyberJungle explores prompt engineering, keywords, camera angles, motion, fashion, film stocks, lighting techniques and more.

04. Building the perfect prompt for Midjourney

If you have more time on your hands, this lengthy delve into Midjourney (actually the second part of two) is well worth digesting. Analog Dreams describes it as a masterclass, and this is one occasion where that isn't an overstatement. Clocking in at over 50 minutes, it goes deeper into promptcrafting, with examples of how to flesh out wording with styles and inspirations as well as parameters.

05. How to use separators in Midjourney prompts

Still sticking with prompts, this Midjourney tutorial focuses specifically on the different separators that you can use in them. Thaeyne shows the impact that punctuation choice (colon, vertical bar, parentheses, backslash, dashes and so on) can have on the results, with 60 clear examples.

06. Midjourney Blend tutorial

Analog Dreams also has a dedicated AI art tutorial on one of Midjourney's newest features, Blend, which can be used to combine up to five images. The tutorial shows examples of what the tool can do, what kind of images work best, what its limitations are and how to enable Remix in order to edit blended images using prompts.

07. Why you need to use seed in Midjourney

Seed is perhaps one of the least understood parameters in image creation with generative AI. You might have noticed that repeating the same prompt in an AI art generator can result in wildly different results each time. A seed is an identifier for each individual image that you generate, By using a seed number as a parameter, you can generate images similar to one you've already generated, helping to eliminate some of that 'randomness'. In this quick AI art tutorial, Glibatree shows how it works, and how you can use it to learn how different words affect your prompt and avoid starting from scratch with each new image generation.

08. How to transform sketches into masterpieces in Midjourney

AI art doesn't have to mean leaving the machine to do all of the work; you can also use AI image generators to transform your own drawings, using prompts to develop them into particular styles. In this AI art tutorial, Samson Vowles shows how he uses Midjourney to transform rough sketches by uploading his drawings, matching the aspect ratio, adding prompts and then further building on the results.

09. Midjourney tips for beginners

If you're new to Midjourney, Future Tech Pilot has plenty of tips that could help you get better results from your first experiments with the AI art generator. The video above covers everything from permutations to the use of sref, inpainting, and how to generate different styles of imagery in Midjourney.

10. How to photobash in Midjourney

It's a common practice to generate different images in Midjourney and then combine them in Photoshop, but this tutorial from Theoretically Media takes a different approach. It shows how some very rough photobashing in an image editing program can be polished in Midjourney (at least to an extent), providing another possible way to achieve the image you want if you're finding that prompts aren't getting you there. You could photobash using your own images, images generated by AI or by using images from one of the best stock photo libraries.

The best DALL-E tutorials

01. DALL-E 3 tutorial

The release of DALL-E 3 in late 2023 saw OpenAI catch up with Midjourney again in terms of photorealism and prompt handling. It's also one of the easiest AI image generators to get started with since it's a little more intuitive than Midjourney or Stable Diffusion. There's no need to run any code, communicate with a bot or sign up for a social media platform. This DALL-E 3 tutorial from AI Cents makes getting started even easier, breaking down the process in a way that's very easy to follow, from prompts basics to editing images and the ChatGPT integration.

02. How to make DALL-E 3 better

Not getting the results you hoped for? In this DALL-E 3 tutorial, AI Andy provides some suggestions for getting better results. This includes leveraging the integrations with ChatGPT for a conversational approach, editing images and parameters. Andy also provides pointers on how to achieve consistent-looking characters using the AI image generators.

03. DALL-E 2 tutorial

DALL-E 2 is still available, and it's more economical than DALL-E 3 (or free) and doesn't require use of ChatGPT. It also has a range of editing tools. In this AI image generator tutorial, Promo Ambitions walks through the features.

04. DALL-E 2 inpainting/editing demo

Now I said that using DALL-E is easy, but getting decent results using reference images is a different story. "Inpainting" is the term DALL-E 2 uses to refer to editing your own images, or previously generated images using its generative AI. You can upload an image and use prompts to manipulate it and add new elements. As we see in the demo, the results can be extremely hit-and-miss, but Bakz T. Future suggests a couple of ideas for troubleshooting.

05. DALL-E 2 real-time outpainting tutorial

While 'inpainting' refers to editing inside the frame of an image, 'outpainting' refers to editing beyond those borders, effectively expanding, or 'uncropping' an image. In works in a similar way to inpainting, but there are a few things to bear in mind about how to upload your starting image. The nice thing about this DALL-E 2 tutorial from ArtistsJourney is that it's filmed in real time, so we see the whole process (there's no 'now draw the rest of the owl').

The best Stable Diffusion tutorials

01. How to install Stable Diffusion in Windows in 3 minutes

One of the hardest things about Stable Diffusion for those not familiar with the tech is getting started, at least if you want to use it directly rather than via a third party's implementation of the model. There are online versions of Stable Diffusion, including Stability AI's own Dream Studio, but since it's open source, you can also use Stable Diffusion for free... with a little bit of work to get set up. This Stable Diffusion tutorial from Royal Skies quickly and succinctly summarises the process for installing the model on Windows with not a word wasted (you'll probably need to pause it a few times).

02. How to install Stable Diffusion on Mac

Using a Mac? No problem. Analog Dreams has a Stable Diffusion tutorial showing a straightforward option for installing it on MacOS by downloading a single file. You'll need at least an M1 Mac. 16GB is recommended for running the program, but 8GB seems to cope.

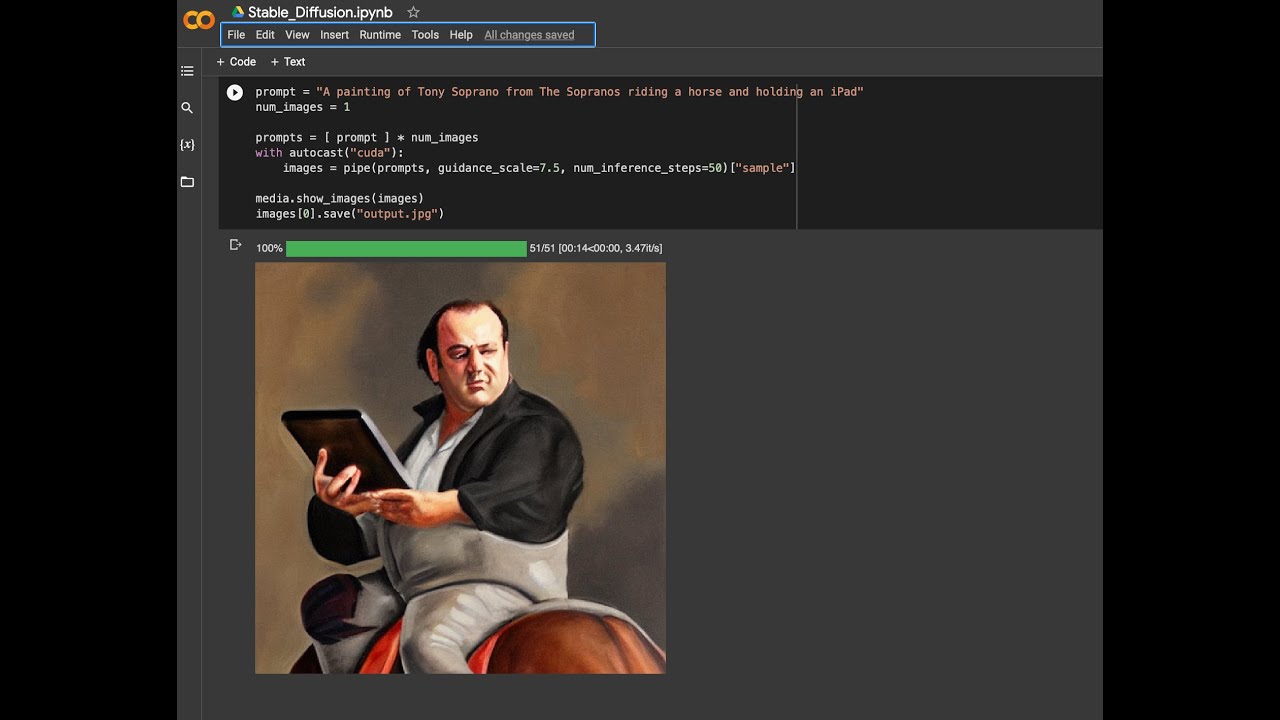

03. How to use Google Collab to run Stable Diffusion

Another way to run Stable diffusion – and with no major technical requirements – is in your browser via Google Collab. This AI art tutorial from The Digital Dilettante shows how to run it, what it looks like and how to use it. It might look a little intimidating if you're not used to looking at code, but you don't need to know how to code, and this is a free way to use the open-source model. You'll need a Google account and to join Hugging Face.

04. Run Automatic1111 Stable Diffusion on Google Collab

If you want to use Stable Diffusion in Google Collab with a more friendly user interface, here's another option that you might want to try out. It's quick, and the tutorial also provides a walk-through of the Automatic1111 UI and the options available for image generation. This is an accessible way to start with Stable Diffusion without local installation or a powerful computer.

05. Stable Diffusion prompt guide

This Stable Diffusion tutorial from AI fan Nerdy Rodent offers a good primer on how to use prompts to generate images using the AI model. We see which words work (and which really don't) as wel as how things like word order and punctuation can impact the resulting images.

06. Stable Diffusion settings explained

Know how to write a prompt but wondering what all the different Stable Diffusion settings mean? In the Stable Diffusion tutorial above, the ever energetic and direct Royal Skies talks us through them all, from sample steps to batch count, CFG and seed. He has individual tutorials on several of the settings.

07. How to make AI videos with Stable Diffusion

In this AI art tutorial, self-confessed tech nerd Matt Wolfe explores how animations can be made using Stable Diffusion Automatic 1111 and the Deforum add-on. It's a lengthy deep dive, and the interview format is a little unusual for a tutorial, but if you're interested in creating moving images with Stable Diffusion, this video provides a good overview of the tabs and settings provided in Deforum, including how options in the Keyframes tab can be used to change angles and zoom. You'll need to be running Stable Diffusion locally or on a cloud server (the tutorial is based on running it in RunDiffusion.

08. Inject yourself into the AI and make any image with your face

Think you can do better than Donald Trump’s NFT trading cards? Well, this Stable Diffusion tutorial shows how you can put your face into any scene. There are easy-to-use Stable Diffusion-based apps that can do this, but they have a cost and and often limit you to certain styles. You can do the same thing with more freedom for free if you're running Stable Diffusion yourself. This guided AI art tutorial is quick, to the point and easy to follow.

Flux tutorials

01. How to train a Flux Lora

Several Flux AI images went viral back in August due to their incredible realism. But they weren't created using Flux alone. That's because early experimenters running the model on their own devices have paired it with low-rank adaptations (loras), a technique designed to refine and optimise large language models.

These fine-tuning scripts can provide more guidance to lead the model towards a particular style. In the case of the viral photorealistic images, the user had employed XLabs' lora, but users can also train their own loras to get the look they want. Nerdy Rodent wanted to use Flux to generate images in a Japanese woodblock art style but found the results were too much like a photo style. His solution was to train his own lora to get the results he wanted. In the video above he shows how to do it. He also shows how to use Flux loras in the open-source interface Comfy UI.

Ideogram tutorial

Another AI image generator to burst onto the scene in 2024 has been Ideogram 2. Users have been particularly impressed with how accurately it generates text compared to many other AI image generators. In the Ideogram tutorial above, Theoretically Media explores the model, its presets, and of course, it's main highlight: how it generates text.

Other AI art tutorials

01. How to use AI art for product photography

AI image generators can create some stunning imagery, but what are the practical uses? Anyone proclaiming the death of photography is getting a little ahead of themselves because AI can't create an image of something it hasn't seen. However, many people are finding ways to combine AI art with photography and incorporate it into their workflows. In the AI art tutorial above, the Austrian food and product photographer Oliver Fox demonstrates how he's using Midjourney to create backgrounds and props that can then be combined with photographs in post-production to create impressive product photography.

02. How to upscale AI art

One of the problems with AI art is its size. Most AI image generators only render quite a small image, which might be fine for use on social media, but it doesn't have the resolution necessary for use at a larger scale or for print. There are ways to deal with that, however. In this AI art tutorial, Analog Dreams shows how to upscale AI art. He uses Topaz Labs' Gigapixel AI to upscale his images, but there are also other software programs out there.

03. How to prepare AI art for sale

Finally, this AI art tutorial from digital artist Vladimir Chopine considers the market for AI art and how to sell AI art. Part of this again involves upscaling images to make them large enough to use, but Chopine also looks at making finishing touches in Photoshop and offers some pointers on things to consider when looking to sell AI art, including where to sell it and how to present it in order to stand out.

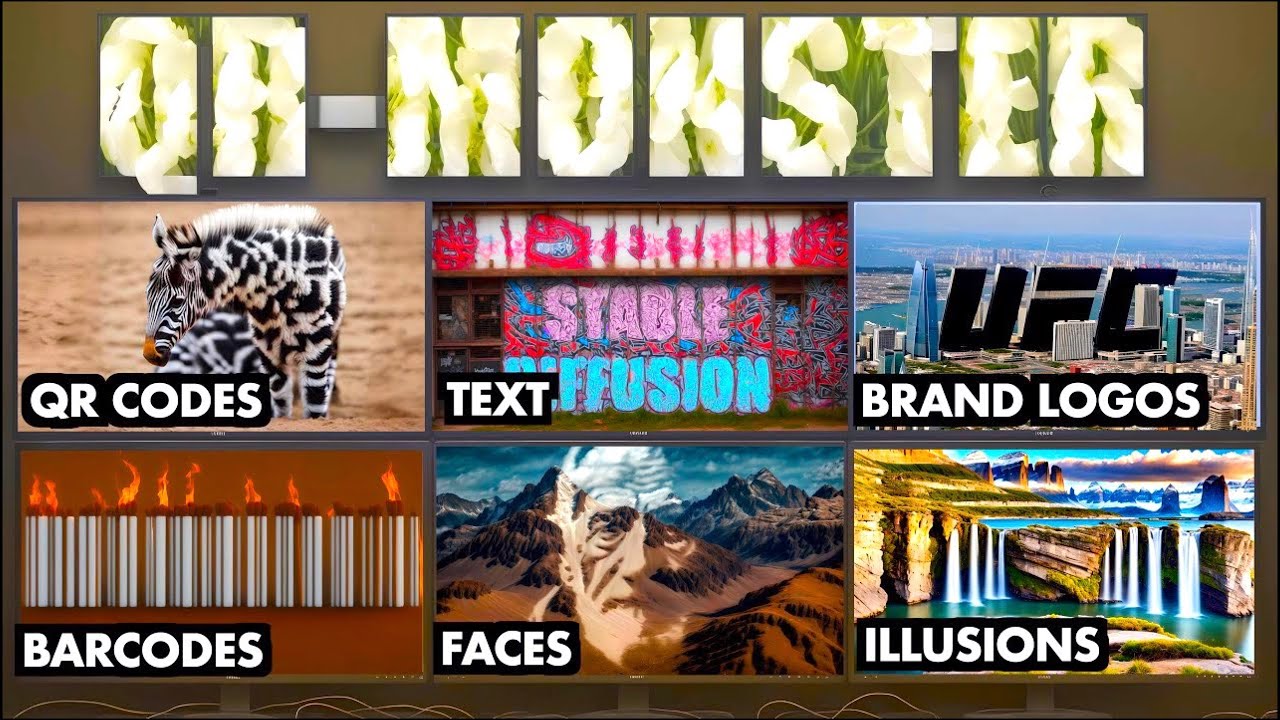

04. How to use AI to make QR codes and optical illusions

One of the latest trends in AI art on social media has been to use the ControlNet technique to make AI-generated QR codes and optical illusions. ControlNet works with stable Diffusion but allows users to introduce specific inputs, be they images, text or patterns like QR codes, that will then appear in the AI-generated image. This allows the creation of working QR codes that look like photorealistic images, for example. In the video above, AI Voice Tutor demonstrates a workflow for this that uses QR Monster.

05. Create Cinematic AI Videos in Runway Gen 2

The next frontier in AI image generation is AI video, and Runway is one of the players leading the way. In this tutorial, Curious Refuge provides some tips on interpolating and upscaling to get optimal results.

06. Google Imagen 2 oveview

Another of the most powerful AI image generators is Google Imagen 2. Access is still restricted, but in this overview, All Your Tech AI talks us through how the latest version compares to its predecessor, some of the technicalities, fluid Style Conditioning and in-painting and out-painting features.

What is AI art?

At its broadest definition, AI art can refer to pieces of digital art created in various ways either by or with the assistance of an artificial intelligence. However, it's currently mainly used to describe images created using the latest text-to-image diffusion models such as Midjourney, DALL-E 2 and Stable Diffusion. Images may be created entirely by the AI model or partly by the AI model and partly by a human, who may build upon the AI's work or take elements generated by an AI and combine them in their own work using other programs.

How is AI art made?

There are various types of AI image generators, but the current explosion of AI art is the result of text-to-image diffusion models. These are deep-learning models that generate digital images from natural language descriptions. Basically, you type in what you want to see, and the AI model will create an image of it. Diffusion models work by adding noise to destroy their training data and then recover the data by reversing the process to create a new image.

How do AI image generators work?

Most of the latest generation of AI art generators are diffusion-based models that convert text to imagery. You write a text prompt describing the image you want to create, set any parameters, and then the model will create what it thinks your description should look like. Most models will generate four initial images by default, allowing you to then fine-tune the one you like best before exporting it.

Depending on the AI image generator, you may also be able to use an existing image as a reference on which to base the AI image generated. You may also be able to change parts of generated image using new prompts, a process known as inpainting, or expand the image beyond its borders, which is known as outpainting. Some AI image generators support specific instructions to maintain consistent characters or styles across generations.

How is AI trained to make art?

AI image generators are trained using datasets comprising images and captions in order to make connections between images and descriptions of objects, people, places and styles. The latest models have often been trained using billions of images scraped from the web.

Is AI art really art?

This is a rather philosophical question that's received much debate, and there are strong opinions on either side. Some argue that AI art cannot be real art because it's created by a machine that doesn't understand what it's doing but merely reproduces variations of existing pieces of art. Proponents take a different view, arguing that the AI is controlled and directed and, like any creative tool, can realise the human's creative vision. There's also an argument that AI art is not copying but learning Plato's theory of ideas: it's not copying an image of, say, a table but learning that there's an ideal version of, say a table. However, we've seen examples of AI image generators turning out almost exact copies of original works.

Is AI art legal?

To date, we're aware of no law being passed against using AI art generators and no law that requires art created with AI image generators to be clearly described as such. Artists have launched legal action against the companies behind some of the most well-known models, arguing that they have infringed the rights of artists by training their AI tools on images scraped from the web without consent. The lawsuit is against the companies rather than the users of the image generators.

Who owns AI art?

OpenAI says that DALL-E2 users own the images they create and have the right to reprint them, sell them and use them on merchandise – but copyright law would seem to contradict the part about ownership (see below) Other companies state that any image generated with its generator is in the public domain.

Can AI art be copyrighted?

After controversially granting copyright to the graphic novel Zarya of the Dawn, the US Copyright Office has since clarified that AI-generated images cannot be copyrighted because they are “not the product of human authorship.”

Guidance updated in the US Federal Register in March 2023 states that: “Based on the Office's understanding of the generative AI technologies currently available, users do not exercise ultimate creative control over how such systems interpret prompts and generate material. Instead, these prompts function more like instructions to a commissioned artist—they identify what the prompter wishes to have depicted, but the machine determines how those instructions are implemented in its output.”

What about work that combines the two things? Say an original photograph combined with an AI-generated background? In this case, copyright can protect parts that have been created or modified by a human but not the entire work.

Can artists make money with AI art?

In theory, artists can use AI art the same way they would use any art, which includes selling either original files or copies. The questions around copyright mean that artists may struggle to stop people from making copies of any work produced using an AI image generator, although there is always the option of turning work into an NFT in order to prove that it’s the original (see our article on how to make an NFT).

Do AI art generators make money?

Yes, most AI art generators make money in some way for the companies that developed them. Some AI image generators have free plans, with a subscription being required to unlock more advanced features. Others grant a limited number of free credits after which users need to buy more.

For more tutorials, see our roundups of the best Photoshop tutorials and the best Premiere Pro tutorials.

Joe is a regular freelance journalist and editor at Creative Bloq. He writes news, features and buying guides and keeps track of the best equipment and software for creatives, from video editing programs to monitors and accessories. A veteran news writer and photographer, he now works as a project manager at the London and Buenos Aires-based design, production and branding agency Hermana Creatives. There he manages a team of designers, photographers and video editors who specialise in producing visual content and design assets for the hospitality sector. He also dances Argentine tango.