Daily design news, reviews, how-tos and more, as picked by the editors.

You are now subscribed

Your newsletter sign-up was successful

Want to add more newsletters?

Five times a week

CreativeBloq

Your daily dose of creative inspiration: unmissable art, design and tech news, reviews, expert commentary and buying advice.

Once a week

By Design

The design newsletter from Creative Bloq, bringing you the latest news and inspiration from the worlds of graphic design, branding, typography and more.

Once a week

State of the Art

Our digital art newsletter is your go-to source for the latest news, trends, and inspiration from the worlds of art, illustration, 3D modelling, game design, animation, and beyond.

Seasonal (around events)

Brand Impact Awards

Make an impression. Sign up to learn more about this prestigious award scheme, which celebrates the best of branding.

The rapid explosion of AI image generators and editors has raised wide-ranging concerns, from copyright to the impact on creative jobs. But even outside of the creative sector, the general public may start to fear what could happen now that anyone can find images of them online and potentially doctor them using AI.

Even watermarking images can do little to protect them from manipulation now that there are even AI watermark removers. But while AI image generators are proliferating, so too are the potential solutions. The research institute MIT CSAIL is the latest to announce a potential solution: a tool called PhotoGuard (see our pick of the best AI art generators to learn more about the expanding tech).

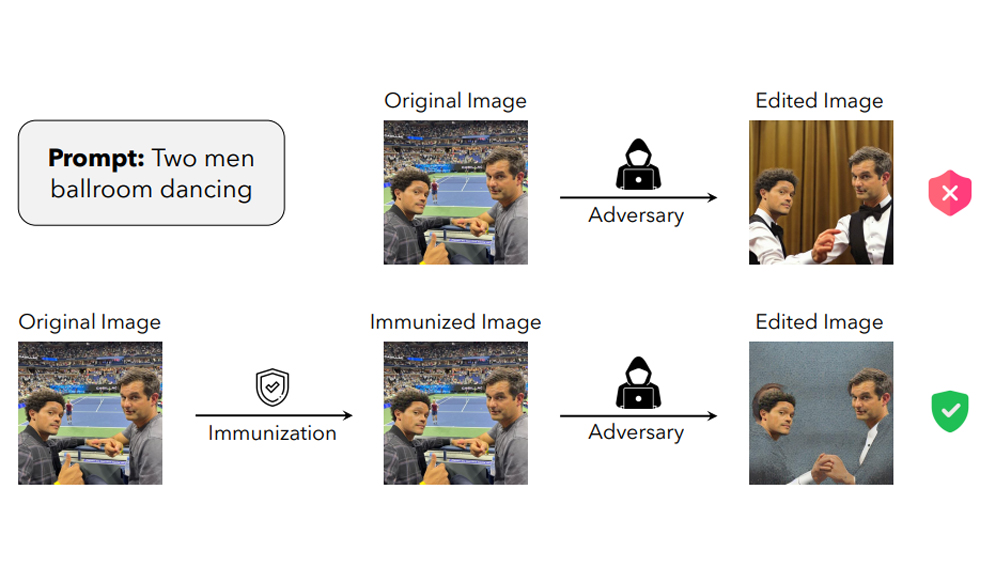

PhotoGuard seems to work in a similar way to Glaze, which we've mentioned before. An initial encoder process subtly alters an image by changing select pixels in a way that interferes with AI models' ability to understand what the image shows. The changes are invisible to the human eye but are picked up by AI models, affecting the algorithmic model's latent representation of the target image (the mathematics detailing the position and colour of each pixel. Effectively, these tiny alterations "immunise" an image by preventing an AI from understanding what it is looking at.

After that, a more advanced diffusion method camouflages an image as something else in the eyes of the AI by optimising the "perturbations" it applies in order to resemble a particular target. This means that when the AI tries to edit the image, the edits are applied to the "fake" target" image instead, resulting in output that looks unrealistic.

As we've noted before, however, this isn't a permanent solution. The process could be reverse-engineered, allowing the development of AI models immune to the tool's interference.

MIT doctorate student Hadi Salman, the lead author of the PhotoGuard research paper, said: "While I am glad to contribute towards this solution, much work is needed to make this protection practical. Companies that develop these models need to invest in engineering robust immunizations against the possible threats posed by these AI tools."

He called for a collaborative approach involving model developers, social media platforms and policymakers to defend against unauthorized image manipulation. "Working on this pressing issue is of paramount importance today,” he said. PhotoGuard's code is available on GitHub. See our pick of the best AI art tutorials to learn more about how AI tools can be used (constructively).

Daily design news, reviews, how-tos and more, as picked by the editors.

Joe is a regular freelance journalist and editor at Creative Bloq. He writes news, features and buying guides and keeps track of the best equipment and software for creatives, from video editing programs to monitors and accessories. A veteran news writer and photographer, he now works as a project manager at the London and Buenos Aires-based design, production and branding agency Hermana Creatives. There he manages a team of designers, photographers and video editors who specialise in producing visual content and design assets for the hospitality sector. He also dances Argentine tango.