Adobe has built its reputation in artificial intelligence on a simple but powerful promise: its Firefly AI models are "commercially safe" because they're trained exclusively on licensed content. It's a compelling pitch for businesses worried about copyright lawsuits, and Adobe has marketed this heavily as their key differentiator in the crowded AI market.

But this week's announcement includes a new move that sounded like it was undermining that promise.

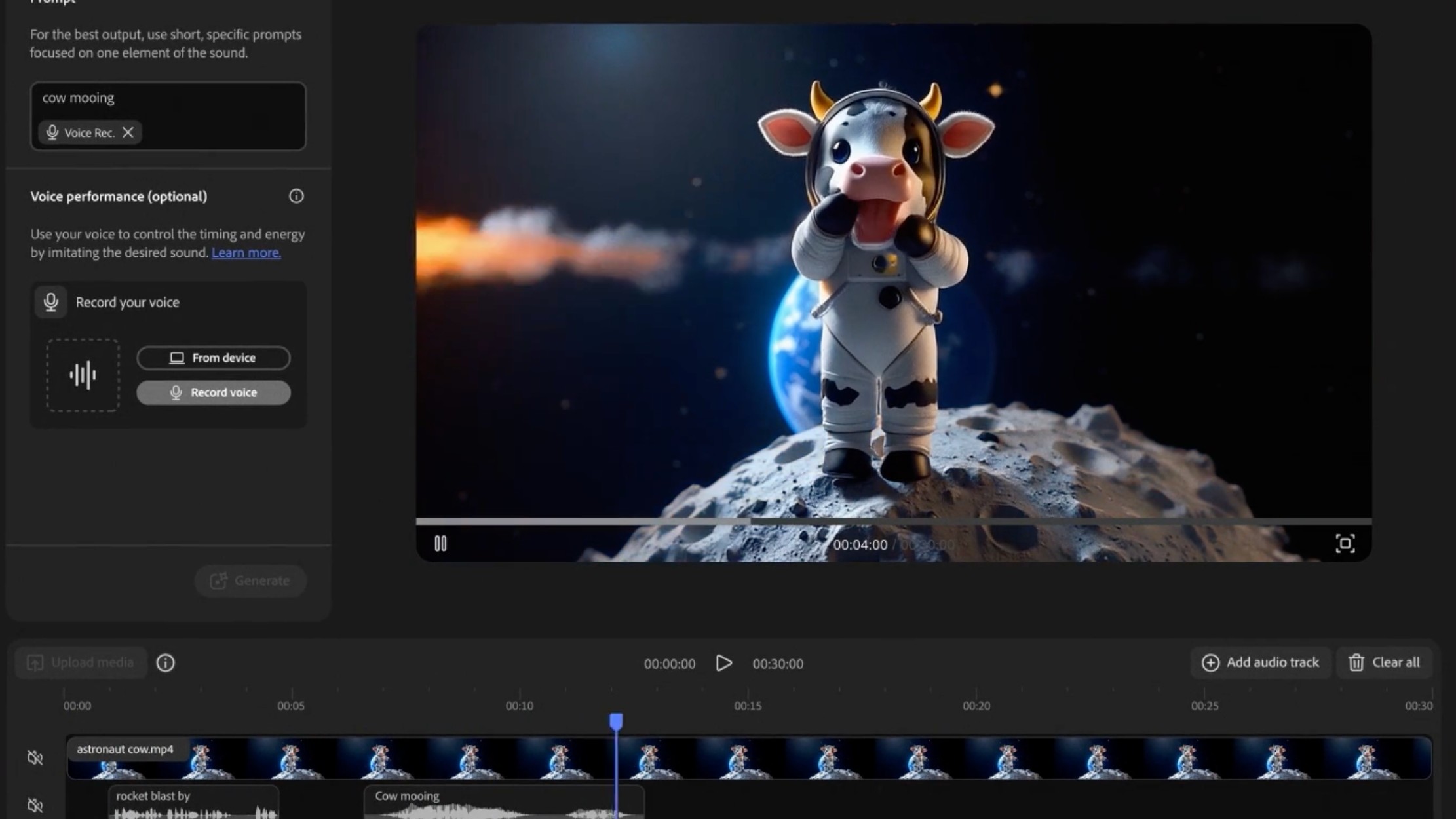

Adobe is now integrating third-party AI platforms, including Runway's Gen-4 Video and Google's Veo3, directly into the Firefly app. Yet these external models weren't built with Adobe's strict licensing standards, creating an apparent contradiction in their "commercially safe" messaging.

What does commercially safe mean?

This isn't just a theoretical concern. if you're a marketing agency creating content for a major client, you want assurance that your AI-generated videos won't trigger a copyright lawsuit. Adobe's promise gave businesses that confidence... or at least, it appeared to.

Now, though, Adobe are offering what they call "partner models" within the Firefly app. This means customers can choose between Adobe's own Firefly Video Model and third-party options like Runway's Gen-4 Video or Google's Veo3 with Audio. More partnerships are coming soon, including models from Topaz Labs, Moonvalley, Luma AI and Pika.

What exactly does Adobe mean by "commercially safe"? Well, unlike most AI companies, which scrape vast amounts of internet content to train their models, Adobe says it only uses images, videos and audio it has explicit permission to use. This includes stock photos from Adobe Stock, public domain content, and material that's been properly licensed.

But here's the issue: these partner companies haven't made the same training commitments as Adobe. Runway, for instance, has been deliberately vague about their training data, and Google's approach to AI training has involved scraping enormous amounts of web content. These models were built using different standards and potentially different sources of copyrighted material.

Daily design news, reviews, how-tos and more, as picked by the editors.

When Adobe processes content through their own Firefly models, it can stand behind its licensing claims because it controls the entire pipeline. But when a user selects a third-party model through the Firefly app, Adobe becomes merely a middleman. They can't guarantee the copyright safety of content they didn't create using data they didn't curate.

Adobe's response

When we contacted Adobe, a spokesperson was keen to stress that "Adobe’s Firefly family of creative models, spanning image, video, vector, audio, and 3D, has been designed from the ground up to be commercially safe, with models trained only on content we have the rights to use."

But crucially, in Adobe's mind at least, these 'Firefly models' are distinct from the Firefly app, which is where the third-party services are being integrated. (I was told these integrations are designed to "give customers the freedom to explore a wider range of distinct aesthetic styles and model personalities.")

The spokesperson went on to emphasise several safeguards Adobe has implemented for partner model integration. Most notably, they guarantee that "no matter which model a creator chooses to use within Adobe products, the content they generate or upload is never used to train generative AI models."

Additionally, the spokesperson explained how Adobe automatically attaches Content Credentials to all AI-generated outputs in the Firefly app, which "clearly indicate whether content was created using our commercially safe Firefly model or a partner model".

All of which is very interesting. But none of which, to my mind, addresses the concern that if you're generating content via the Firefly app, it's not necessarily going to be commercially safe. And while Adobe thinks the distinction between 'the Firefly app' and 'the Firefly models' is clear, I'm not convinced it'll be obvious to most people.

It does make business sense

Don't get me wrong; I think Adobe's decision makes business sense, even if it compromises its core message. The AI video generation market is moving incredibly quickly, and Adobe's own models sometimes lag behind competitors in quality and features. By integrating third-party tools, it can offer users the latest capabilities without waiting for its own technology to catch up.

As for "commercial safety" of the Firefly models vs the Firefly app as a whole, Adobe's spokesperson believes that users can see the nuance. "This is how we’re seeing customers who want commercially safe content use the Firefly app," they explained. "Partner models are leveraged during the ideation phase to explore creative directions, but when it’s time to move into production, they turn to Adobe’s commercially safe Firefly models."

I truly hope this is indeed happening, and that no customer is accidentally putting themselves at legal risk while using the Firefly app. But I have to be honest, I've found this all quite confusing, and I doubt I'm alone. So I still think Adobe has its work cut out to ensure everyone using the Firefly app remains "commercially safe".

Tom May is an award-winning journalist specialising in art, design, photography and technology. His latest book, The 50 Greatest Designers (Arcturus Publishing), was published this June. He's also author of Great TED Talks: Creativity (Pavilion Books). Tom was previously editor of Professional Photography magazine, associate editor at Creative Bloq, and deputy editor at net magazine.

You must confirm your public display name before commenting

Please logout and then login again, you will then be prompted to enter your display name.