Just when we're starting to come to terms with the power of the latest AI image generators, along comes another advance. On the heels of DALL-E comes Point-E, an AI image generator for 3D modelling with a similar modus operandi.

AI image generators took massive leaps forwards in the past year, allowing anyone to create sometimes stunning imagery from a text prompt. For now they can only create still 2D images, but OpenAI, the company behind DALL-E 2, one of the most popular image generators. has just revealed its latest research into an AI-powered 3D modelling tool... but it looks kind of basic (see how to use DALL-E 2 to get up to speed on OpenAI's image generator).

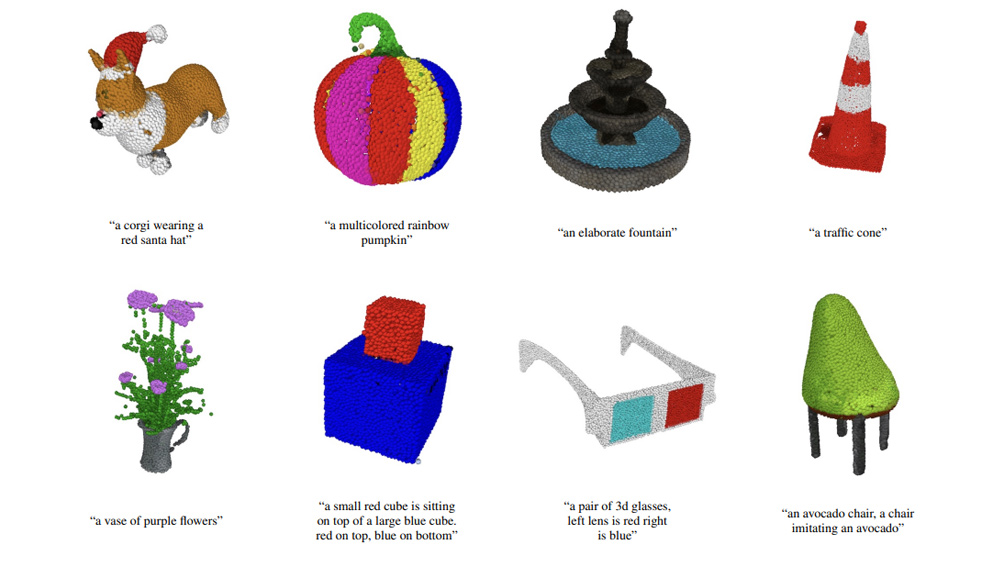

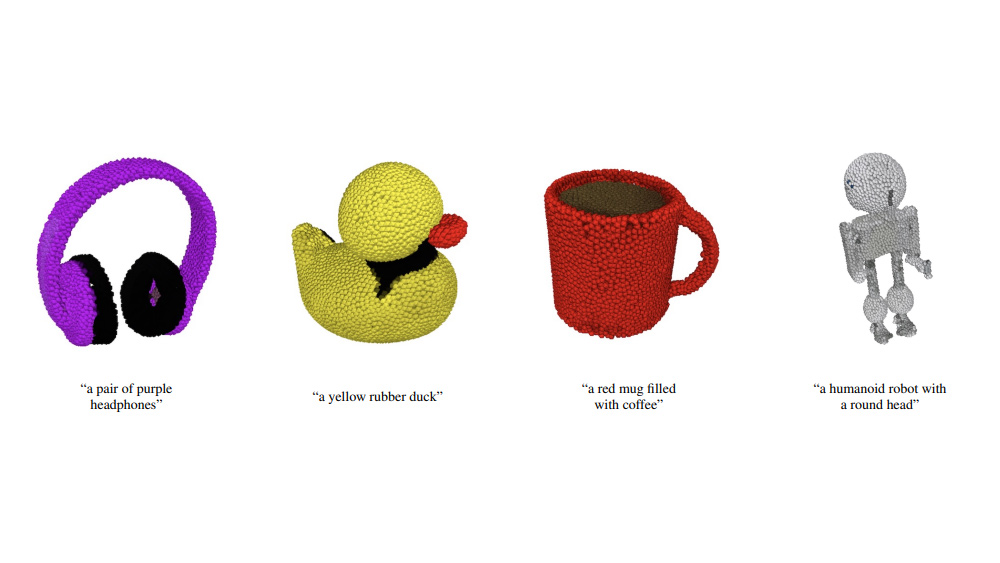

After DALL-E comes Point-E, a model that looks set to bring revolutionary text-to-image tech to 3D modelling. OpenAI says the tool, which has been trained on millions of 3D models, can generate 3D point clouds from simple text prompts. The catch? The resolution is fairly poor.

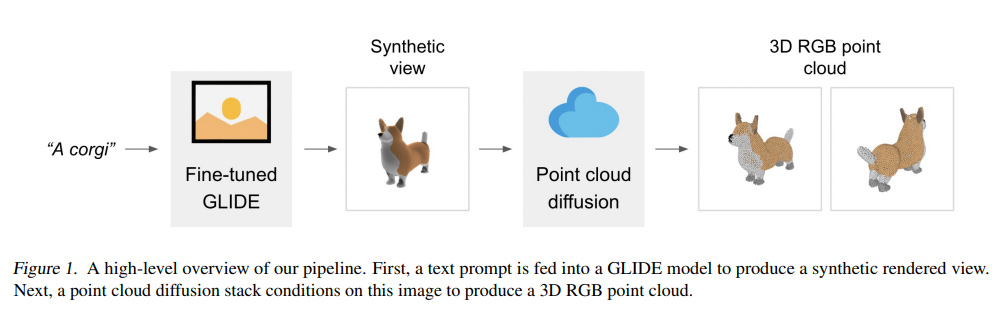

The research paper, authored by a team led by team led by Alex Nichol, says that unlike other methods, Point-E "leverages a large corpus of (text, image) pairs, allowing it to follow diverse and complex prompts, while our image-to-3D model is trained on a smaller dataset of (image, 3D) pairs."

It says: "To produce a 3D object from a text prompt, we first sample an image using the text-to-image model, and then sample a 3D object conditioned on the sampled image." Point-E runs a synthetic view 3D rendering through a series of diffusion models to create a 3D, RGB point cloud: first a coarse 1,024-point cloud, and then a finer 4,096-point cloud.

The example results in the research paper may look basic compared to the images that DALL-E 2 can produce, and compared to the 3D capabilities of existing systems. But creating 3D images is a hugely resource-hungry process. Programs like Google's DreamFusion require hours of processing using multiple GPUs.

OpenAI recognises that it's method performs worse in terms of the quality of results, but says it produces samples in a mere fraction of the time – we're talking seconds rather than hours – and requires only one GPU, making 3D modelling more accessible. You can already try it out for yourself because OpenAI has shared the source code on Github.

Daily design news, reviews, how-tos and more, as picked by the editors.

Read more:

Joe is a regular freelance journalist and editor at Creative Bloq. He writes news, features and buying guides and keeps track of the best equipment and software for creatives, from video editing programs to monitors and accessories. A veteran news writer and photographer, he now works as a project manager at the London and Buenos Aires-based design, production and branding agency Hermana Creatives. There he manages a team of designers, photographers and video editors who specialise in producing visual content and design assets for the hospitality sector. He also dances Argentine tango.