Sign up to Creative Bloq's daily newsletter, which brings you the latest news and inspiration from the worlds of art, design and technology.

You are now subscribed

Your newsletter sign-up was successful

Want to add more newsletters?

When Adobe announced a Premiere Pro update earlier this week, I initially assumed it would involve text-to-video generative AI. After all, Adobe teased AI video generation in Premiere Pro earlier in the year, and most of its recent updates across Creative Cloud have focused heavily on AI. Instead, we got a new colour management system and UI improvements.

But, perhaps sensing that an update on its AI video plans was due, the software giant has now, rather quietly, revealed a first glimpse of its Firefly AI video model in action. And while AI video raises a lot of concerns among many people, Adobe's approach looks like it could be a game changer for video editors who need to fill spaces or make up for missing footage.

In a blog post, Adobe says Firefly AI video will come to Premiere Pro in beta this year. It says it's been working "with the video editing community" to advance the model and that, based on that feedback, it's now developing workflows that use the the model to "help editors ideate and explore their creative vision, fill gaps in their timeline and add new elements to existing footage".

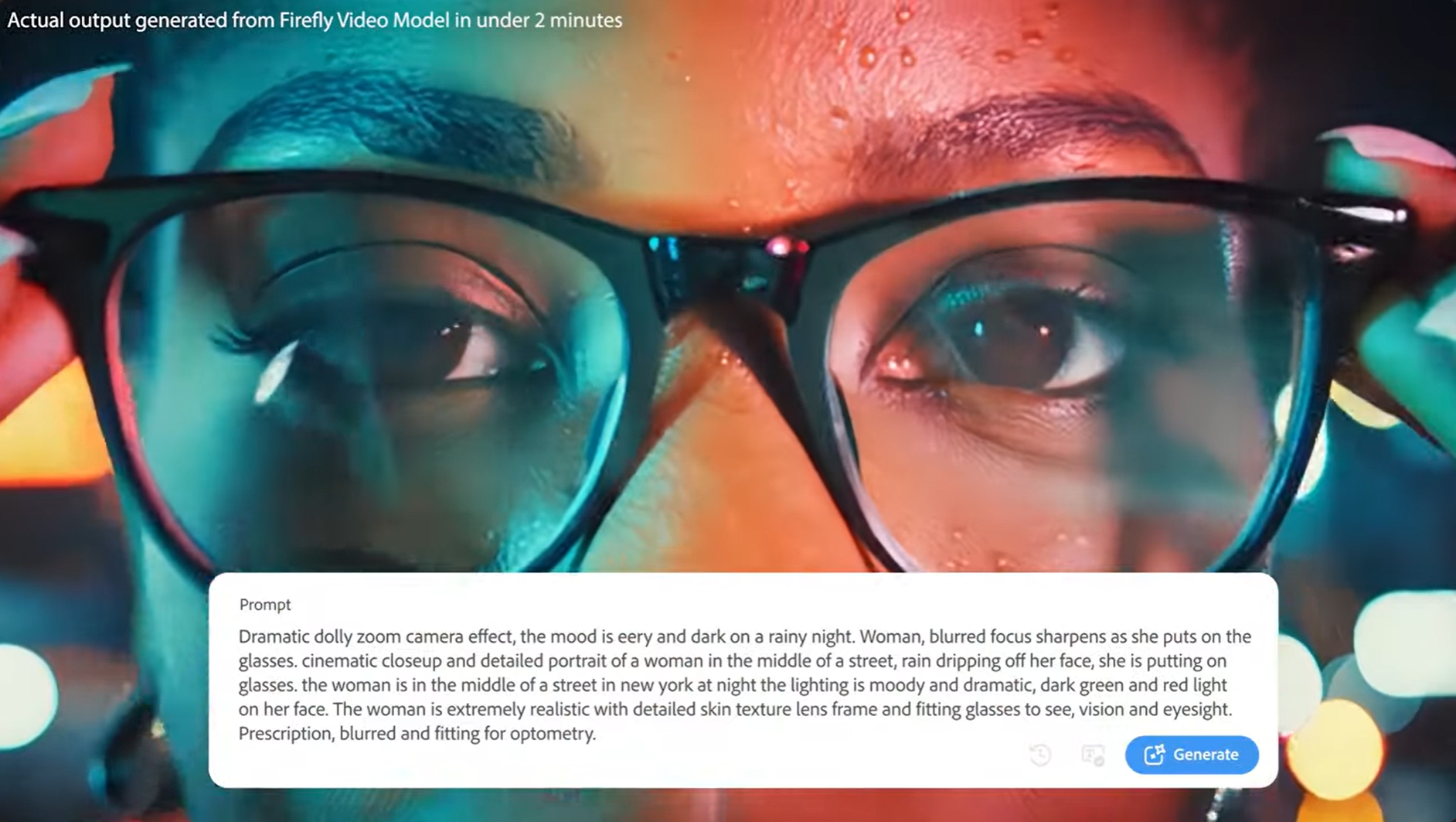

The AI tools will aim to "take the tedium out of post-production" and give editors more time to explore ideas. We'll be able to use text prompts in Firefly Text-to-Video and use reference images to generate B-Roll footage. The demonstration shows that the Firefly Video Model will allow the use of camera controls, like angle, motion and zoom to create the desired perspective for the generated video.

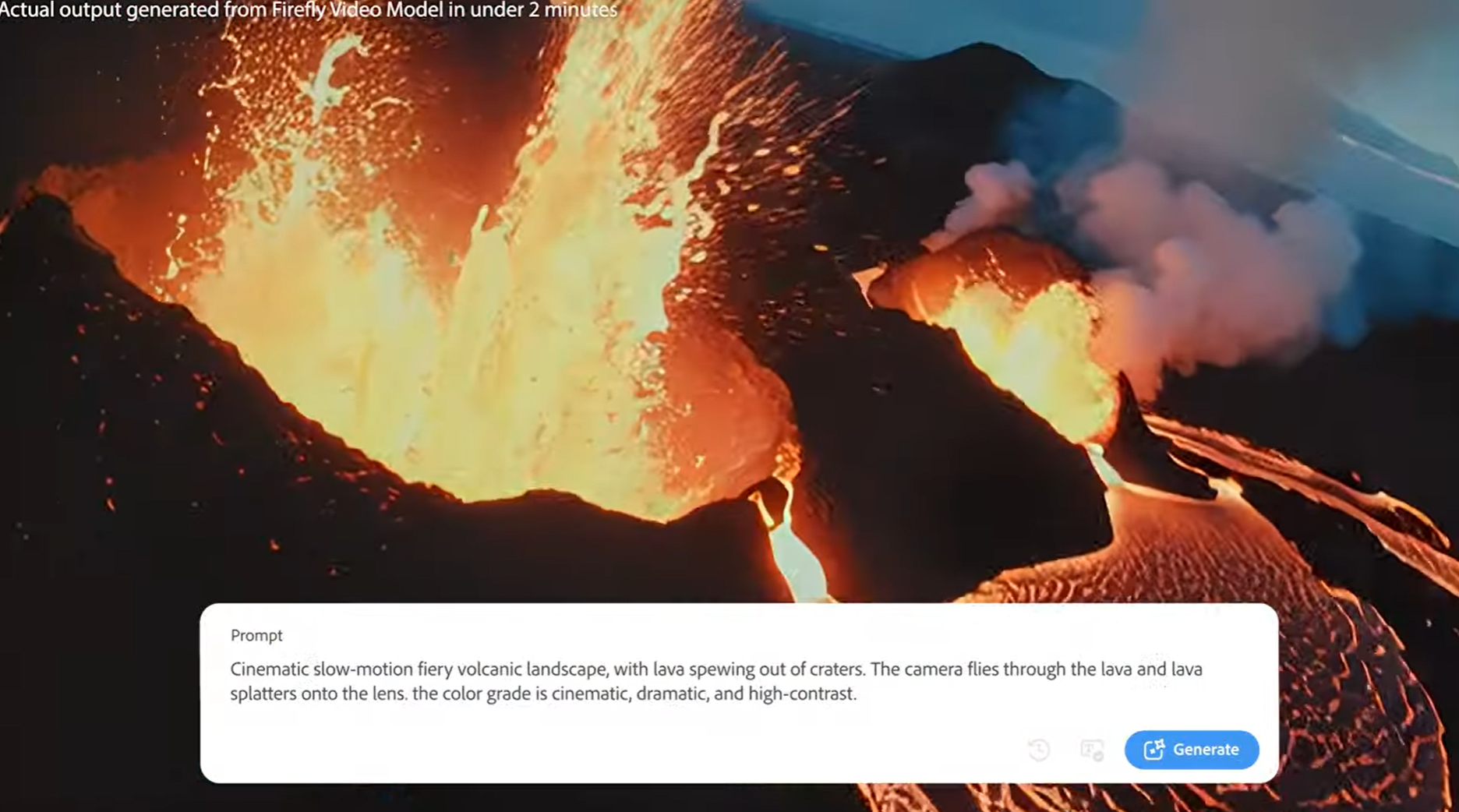

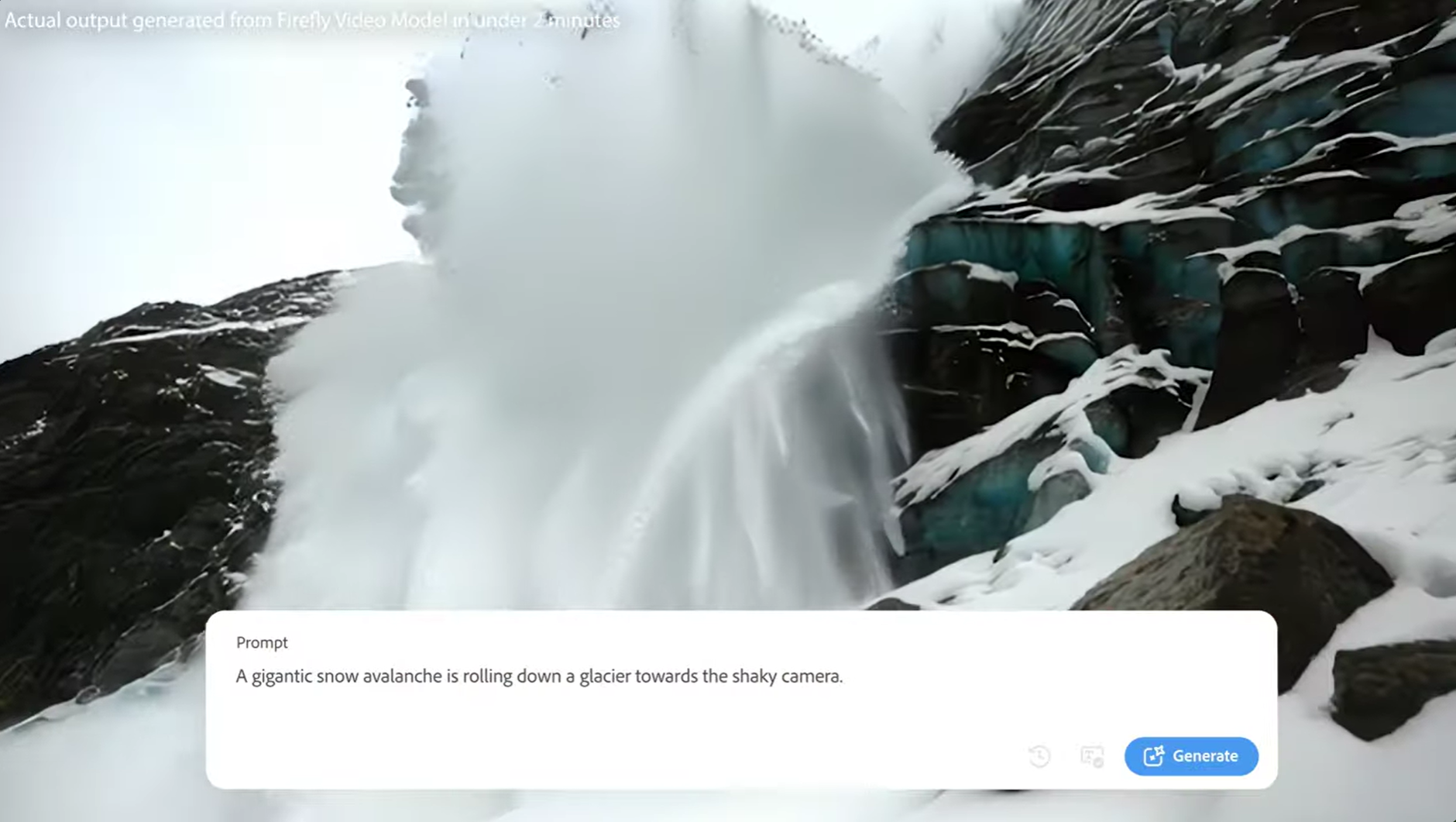

How the Firefly Video Model compares to the likes of Sora and Runway remains to be seen, but Adobe says it does particularly well at generating videos of the natural world, including landscapes, plants or animals. Like with many of the best AI image generators, the more detailed the prompt, the more convincing the results tend to be.

The focus so far seems very much on ideation (including in claymation) and helping production teams that might be missing a key establishing shot to set the scene or complementary footage to fill a space in a time line rather than on using AI to generate entire films or sequences, which could be healthiest and most useful approach for Adobe users.

The model will also be able to create atmospheric elements like fire, smoke, dust particles and water against a black or green background for layering over existing content using blend modes or keying in Premiere Pro and After Effects. And the model will also allow Image-to-Video, adding motion to stills. Later in the year, Premiere Pro (beta) will get Generative Extend allowing clips to be extended to cover gaps in footage, smooth out transitions or hold on shots longer, which sounds like a potential game-changer for editors.

Sign up to Creative Bloq's daily newsletter, which brings you the latest news and inspiration from the worlds of art, design and technology.

Joe is a regular freelance journalist and editor at Creative Bloq. He writes news, features and buying guides and keeps track of the best equipment and software for creatives, from video editing programs to monitors and accessories. A veteran news writer and photographer, he now works as a project manager at the London and Buenos Aires-based design, production and branding agency Hermana Creatives. There he manages a team of designers, photographers and video editors who specialise in producing visual content and design assets for the hospitality sector. He also dances Argentine tango.