Five smartphone features you never thought of using in your apps

Don't wait for the next big thing: today's phones are already full of sensors developers can use, argues Keith Butters in his guide to creating lateral-thinking mobile apps

Daily design news, reviews, how-tos and more, as picked by the editors.

You are now subscribed

Your newsletter sign-up was successful

Want to add more newsletters?

Five times a week

CreativeBloq

Your daily dose of creative inspiration: unmissable art, design and tech news, reviews, expert commentary and buying advice.

Once a week

By Design

The design newsletter from Creative Bloq, bringing you the latest news and inspiration from the worlds of graphic design, branding, typography and more.

Once a week

State of the Art

Our digital art newsletter is your go-to source for the latest news, trends, and inspiration from the worlds of art, illustration, 3D modelling, game design, animation, and beyond.

Seasonal (around events)

Brand Impact Awards

Make an impression. Sign up to learn more about this prestigious award scheme, which celebrates the best of branding.

The modern mobile space is ripe for innovation. Startups are popping up everyday, many of which are jumping on the social/local/mobile bandwagon. And sure, plenty of them are doing a very good job of it. But for me, the more interesting innovations will come from applications which look at existing mobile sensors and hardware features with new eyes, instead of rehashing the twice-baked features present in the application layer.

So stop brainstorming how to 'put a map in there' and open yourself up to the opportunities present in the device you carry with you all day, every day. Here are five killer examples.

1. Location: more than a pin on a map

In iOS, the Core Location framework provides more than latitude and longitude. It also gives you altitude and speed. There are obvious uses for those two properties, as in fitness-tracking apps like RunKeeper. By combining altitude, velocity and type of exercise, RunKeeper tracks the strenuousness of your physical activity. You can then monitor your exercise habits and progress with interactive charts to get a fuller picture of your health. (Yeah, running uphill is much more strenuous.)

Why stop there, though? I remember failing miserably to cook a family dinner in Colorado because I did not account for elevation. Could a mobile recipe app detect my altitude and adjust cooking times? What if a hiking app could let me know how far from the treeline I am, or send me a notification with weather details before I attempt to climb to the summit?

Fitness apps use your speed to give insight into your running pace and splits. But when you think about speed combined with position from a broader perspective, there are plenty more possibilities. Can my application know if I'm on a train approaching my final destination, and start contacting cabs for me? Could my phone realise that I am driving, and turn on an 'answering machine' to respond to my texts and keep track of who tried to contact me? Could my phone see that I am in the middle of the ocean traveling at 15 knots, but that I am very near the shelf break, and warn me? (Given response speed, probably not the latter...) Look up what a CLLocation object is in iOS – and dream of more than a pin on a map.

2. The compass knows more than North

Another interesting thing to think about is uncoventional ways to use a person's heading or orientation. It’s awesome that developers are helping all of us find the nearest subway station, but what else could we use this data for? After all, you can effectively determine where someone might be looking. This could be amazing when paired with photo apps.

Some apps (Instagram, for example) already use the foursquare API to tag a location to the photo you upload. But what if the device knew that you're taking a picture at 6am, facing east? Could it recommend a sunrise tag? What it it knew your location, your heading, and the time, and could tell you that you just took a picture of a Brooklyn Bridge sunset?

Daily design news, reviews, how-tos and more, as picked by the editors.

We can go even further than that. You could combine location, velocity and direction to see if someone is looking at, or is near, a storefront. Even if the phone were in your pocket, you could use previous velocity measurements (along with the accelerometer) to 'calibrate' the phone’s heading and deliver notices on sales, offers, promotions and events. Or what about a concert app that gave you the name of each song as it was played?

3. That little vibrating motor in your phone

I think there’s something amazing about tactile feedback. I thought a lot about this back when the first Force Feedback joysticks came out, and once again when mobile game developers started using 'vibrate' to enhance the experience of an in-game car crash. In a more practical context, I’ve also read about people using vibration for a more seamless implementation of turn-by-turn directions for GPS maps.

It’s a fascinating concept. Imagine being in an unfamiliar city, telling your maps app which museum you want to go to, and then being able to put your phone back into your pocket. One vibration means 'turn left' and two means 'turn right'. Suddenly, you can enjoy the walk instead of burying your nose in your device and bumping into people (and as a side benefit, look way less like a tourist). The app could also be modified for the elderly or the hearing/visually impaired as well.

Similarly, what about a reimagining of the old 911 page from forever ago? Could a particular vibration pattern, perhaps the old standard SOS in morse code, indicate to you that an SMS message was '911 important'?

4. Accelerometer, gyroscope... and much more?

The most common use cases of the iPhone's accelerometer and gyroscope include orienting your display so it’s readable, as a game controller (like a steering wheel for a driving game) and in augmented reality implementations like accrossair's New York Nearest Subway.

But in what other situations could they be used? For one thing, you could use them to measure the height of waves when you’re out on the ocean in a boat. Instead of using guesswork to determine you’re in four-foot swells, you could let your phone do it automatically. Or, what about using your mobile device as a personal flight data recorder? What if a phone detects a sudden shift in acceleration followed by a period of no activity? It could be that the owner has suffered some form of accident. While it probably wouldn’t be a good idea to call 911 right away, an app like that could be a part of an overall monitoring system, perhaps for hikers who like roughing it in the wild alone, or for skiers braving treacherous slopes.

5. A camera is for more than taking photos

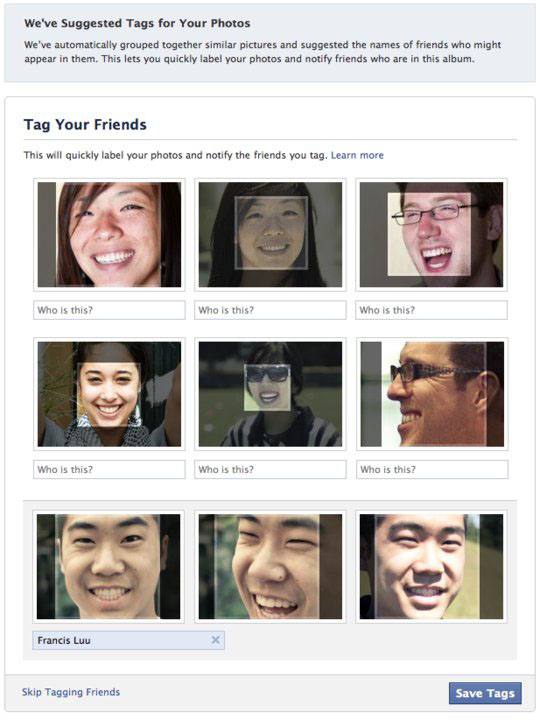

A lot of new image-analysis technology is making its way into the mobile space. Cameras now come with facial-recognition software. Google and Facebook invested in image-analysis software for their social platforms. Soon we’ll be able to point our phone cameras at people and see their name, social network profile and virtual business card. The days of sitting down at a dinner party and forgetting the name of the person across from you will be gone.

But what else? There’s certainly a lot of here potential for marketers. Auto-tagging photos with product names in them could be huge. (There are lots of pictures of me out there holding a can of Dr. Pepper. And no I’m not affiliated, nor am I an investor.) Brands will be able to extract value there, as will social platforms. When algorithms determine what we see in our social feed, they can be manipulated by brands. So maybe my feed will be full of images of my friends drinking some other soda. Or maybe I’ll be served with served public service advertising telling me to stop drinking soda (and I really should). And of course, in the mobile space, these things can all happen in real time.

Getting creative with the tools we've got

If hardware development stopped today, we would still have 25 years of amazing things to create, based on the technology that’s already available. Fortunately, the future of hardware is bright, but I would urge people push the devices in our pockets to their limits, rather than waiting for the next feature to come along. We should be responding to life and creating applications that enrich it, not responding to new technology merely because it is new. Let’s be creative. There are more possibilities now than ever before.

The Creative Bloq team is made up of a group of art and design enthusiasts, and has changed and evolved since Creative Bloq began back in 2012. The current website team consists of eight full-time members of staff: Editor Georgia Coggan, Deputy Editor Rosie Hilder, Ecommerce Editor Beren Neale, Senior News Editor Daniel Piper, Editor, Digital Art and 3D Ian Dean, Tech Reviews Editor Erlingur Einarsson, Ecommerce Writer Beth Nicholls and Staff Writer Natalie Fear, as well as a roster of freelancers from around the world. The ImagineFX magazine team also pitch in, ensuring that content from leading digital art publication ImagineFX is represented on Creative Bloq.