How to make your sites load faster

Your website’s visitors care whether or not it loads quickly. Tom Gullen explains how to make sites render faster, and why you should be doing this.

Daily design news, reviews, how-tos and more, as picked by the editors.

You are now subscribed

Your newsletter sign-up was successful

Want to add more newsletters?

Five times a week

CreativeBloq

Your daily dose of creative inspiration: unmissable art, design and tech news, reviews, expert commentary and buying advice.

Once a week

By Design

The design newsletter from Creative Bloq, bringing you the latest news and inspiration from the worlds of graphic design, branding, typography and more.

Once a week

State of the Art

Our digital art newsletter is your go-to source for the latest news, trends, and inspiration from the worlds of art, illustration, 3D modelling, game design, animation, and beyond.

Seasonal (around events)

Brand Impact Awards

Make an impression. Sign up to learn more about this prestigious award scheme, which celebrates the best of branding.

- Knowledge needed: Intermediate CSS and JavaScript, basic HTML5

- Requires: A website to speed up

- Project Time: Highly dependent on website

This article first appeared in issue 231 of .net magazine

Speed should be important to every website. It’s a well-known fact that Google uses site speed as a ranking metric for search results. This tells us that visitors prefer fast websites – no surprise there!

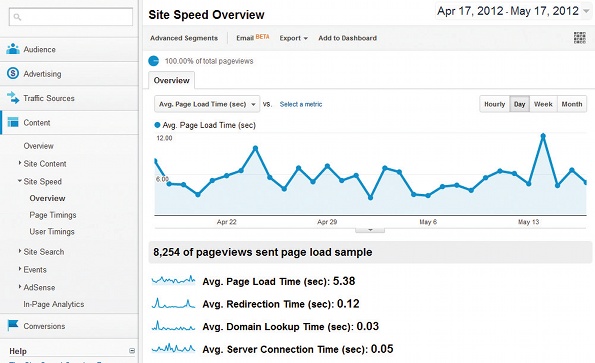

Jakob Nielsen wrote in 1993 about the three limits of response times; although the research is old by internet standards, our psychology hasn’t changed much in the intervening 19 years. He states that if a system responds in under 0.1 seconds it will be perceived as instantaneous, while responses faster than one second enable the user’s thought flow to remain uninterrupted. Having a web page load in 0.1 seconds is probably impossible; around 0.34 seconds represents Google UK’s best load time so this serves as a more realistic (albeit ambitious) benchmark. A page load somewhere in the region of 0.34 to 1 second is achievable and important.

01. The price of slowing down

These sorts of targets have real world implications for your website and business. Google’s Marissa Mayer spoke in 2006 about an experiment in which the number of results returned by the search engine was increased to 30. This slowed down the page load time by around 500ms, with a 20% drop in traffic being attributed to this. Amazon, meanwhile, artificially delayed the page load in 100ms increments and found that “even very small delays would result in substantial and costly drops in revenue”.

Other adverse associations linked with slow websites include lessened credibility, lower perceived quality and the site being seen as less interesting and attractive. Increased user frustration and increased blood pressure are two other effects we probably have all experienced at some point! But how can we make sure our websites load speedily enough to avoid these issues?

One of the first things to look at is the size of your HTML code. This is probably one of the most overlooked areas, perhaps because people assume it’s no longer so relevant with modern broadband connections. Some content management systems are fairly liberal with the amount they churn out – one reason why it can be better to handcraft your own sites.

As a guideline you should easily be able to fit most pages in <50KB of HTML code, and if you’re under 20KB then you’re doing very well. There are obviously exceptions, but this is a fairly good rule of thumb.

It’s also important to bear in mind that people are browsing full websites more frequently on mobile devices now. Speed differences between sites viewed from a mobile are often more noticeable, owing to them having slower transfer rates than wired connections. Two competing websites with a 100KB size difference per page can mean more than one second load time difference on some slow mobile networks – well into the ‘interrupted thought flow’ region specified by Jakob Nielsen. The trimmer, faster website is going to be a lot less frustrating to browse, giving a distinct competitive edge over fatter websites and going a long way towards encouraging repeat visits.

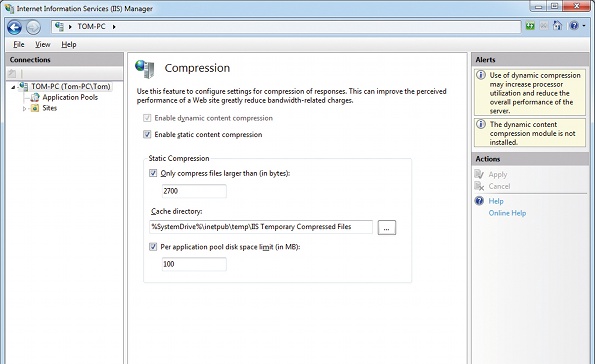

One important feature of most web servers is the ability to serve the HTML in a compressed format. As HTML by nature contains a lot of repeating data it makes it a perfect candidate for compression. For example, one homepage’s 18.1KB HTML is reduced to 6.3KB when served in compressed format. That’s a 65 per cent saving! Compression algorithms increase in efficiency the larger the body of text they have to work from, so you will see larger savings with larger HTML pages. A 138.1K page on a popular forum is reduced to 25.7K when served compressed, a saving of over 80 per cent – which can significantly improve total transfer times of resources.

There are virtually no negatives to serving HTML in this form; everyone should be enabling it for all their HTML content. Some web servers have different settings for compressing static and dynamically generated content, so it’s worth ensuring you’re serving compressed content for both if possible.

02. Content delivery networks

Content delivery networks (known as CDNs) can also significantly improve load times for your website. CDNs are a collection of servers distributed across the globe that all hold copies of your content. When a user requests an image from your website that’s hosted on a CDN, the server in the CDN geographically closest to the user will be used to serve the image.

There are a lot of CDN services available. Some of these are very costly but advertise that they will offer better performance than cheaper CDNs. Free CDN services have also started cropping up, and may be worth experimenting with to see if they can improve performance on your website.

One important consideration when using a CDN is to ensure that you set it up correctly so you don’t lose any SEO value. You may be receiving a lot of traffic from images hosted on your domain, depending on the nature of your website, and by moving them to an external domain it might adversely affect your traffic. The Amazon S3 service enables you to point a subdomain to its CDN, which is a highly preferable feature in a CDN.

Serving content on a different domain (such as a CDN) or a subdomain on your own domain name that doesn’t set cookies has another key benefit. When a cookie is set on a domain, the browser sends cookie data with each request to every resource on that same domain. More often than not, cookie data is not required for static content such as images, CSS or JavaScript files. Web users’ upload rates are often much slower than the available download rates, which in some cases can cause significant slowdown in page load times. By using a different domain name to serve your static content, browsers will not send this unnecessary cookie data, because they have strict cross domain policies. This can speed up the request times significantly for each resource.

Cookies on websites can also take up most of an HTTP request; 1,500 bytes is around the most commonly used single-packet limit for large networks so if you are able to keep your HTTP requests under this limit the entire HTTP request should be sent in one packet. This can offer improvements on page load times. Google recommends that your cookies should be less than 400 bytes in size – this goes a long way towards keeping your websites HTTP requests under the one-packet/1,500 bytes limit.

Daily design news, reviews, how-tos and more, as picked by the editors.

03. Further techniques

There are other, easier to implement techniques that can offer great benefits to your site’s speed. One is to put your JavaScript files at the end of your HTML document, just before the closing body tag, because browsers have limits on how many resources they can download in parallel from the same host.

The original HTTP 1.1 specification written in 1999 recommends browsers should only download up to two resources in parallel from each hostname. But modern browsers by default have a limit of around six. If your web page has more than six external resources (such as images/ JavaScript/CSS files) it may offer you improved performance to serve them from multiple domains (such as a subdomain on your main domain name or a CDN) to ensure the browser does not hit its maximum limit on parallel downloads.

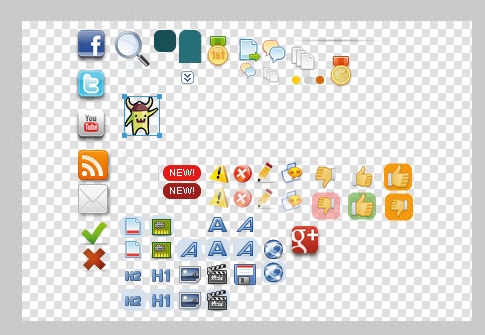

Rather than splitting multiple requests onto different domains, you may consider combining them. Every HTTP request has an overhead associated with it. Dozens of images such as icons on your website served as separate resources will create a lot of wasteful overhead and cause a slowdown on your website, often a significant one. By combining your images into one image known as a ‘sprite sheet’ you can reduce the number of requests required. To display the image you define it in CSS by setting an element’s width and height to that of the image you want to display, then setting the background to the sprite sheet. By using the background-position property we can move the background sprite sheet into position so it appears on your website as the intended image.

Sprite sheets also offer other benefits. If you’re using mouseover images, storing them on the same sprite sheet means that when the mouseover is initiated there is no delay because the mouseover image has already been loaded in the sprite sheet! This can significantly improve the user’s perceived loading time and create a much more responsive feeling website.

Specifying the dimensions of any other images in <img /> tags is also an important factor in increasing your web page’s perceived loading time. It’s common for devs not to explicitly set width and height for images on pages. This can cause the page’s size to expand in jumps as each image (partially) loads, making things feel sluggish. If explicit dimensions are set the browser can reserve space for the image as it loads, stopping the page size changing and sometimes significantly improving the user’s perceived loading time.

So what else can we do to improve this? Prefetching is one such feature available in HTML5. Prefetching enables loading of pages and resources before the user has actually requested them. Its support is currently limited to Firefox and Chrome (with an alternative syntax). However, its ease of implementation and usefulness in improving the perceived loading time of your web page is so great that it’s something to consider implementing.

<!—Firefox Prefetching -->

<link rel="prefetch" href="http://www.example.com/page2.html">

<!—Chrome Prerender -->

<link rel="prerender" href="http://www.example.com/page2.html">

<!—Both in one line -->

<link rel="prefetch prerender" href="http://www.example.com/page2.html">

There is a behavioural difference between prefetching and prerender. Mozilla’s prefetch will load the top level resource for a given URL, commonly the HTML page itself, and that’s where the loading stops. Google’s prerender loads child resources as well, and in Google’s words “does all of the work necessary to show the page to the user, without actually showing it until the user clicks”.

04. Prefetching and prerendering considerations

But using this feature also comes with important considerations. If you prerender/prefetch too many assets or pages then the user’s entire browsing experience may suffer; if you have any server-side statistics these can become heavily skewed. If the user doesn’t click the preloaded resource and exits your website, your stats tracker may count the visit as two page views, not the actual one. This can be misleading for important metrics such as bounce rates.

Chrome’s prerender has another caveat developers need to be aware of, in that the prerendered page will execute JavaScript. The prerender will load the page almost exactly the same way as if the link has been clicked on by the user. No special HTTP headers are sent by Chrome with a prerender; however, the Page Visibility API enables you to distinguish whether the page is being prerendered. This is crucially important again for any third party scripts that you’re using, such as advertising scripts and statistics trackers (Google Analytics already makes use of the Page Visibility API so you don’t have to worry about that). Improperly handling these assets with the Page Visibility API again makes you run the risk of skewing important metrics.

Using prefetch and prerender on paginated content is probably a safe and useful implementation – for example on a tutorials web page that is split into multiple sections. Especially on content like tutorials it’s probably important to keep within Nielsen’s ‘uninterrupted thought flow’ boundaries.

Google Analytics can also give valuable clues as to which pages you may want to prerender/prefetch. Using its In-Page Analytics you can determine which link on your homepage is most likely to be clicked. In some cases with highly defined calls to action this percentage might be extremely high – which makes it an excellent candidate for preloading.

Both prefetching and prerendering work cross-domain – an unusually liberal stance for browsers, which are usually extremely strict on cross-domain access. However, this probably works in Google’s and Mozilla’s favour because they are able to create a faster browsing experience for their users in several ways, offering a significant competitive edge over other browsers that don’t yet support such features.

Prefetching and especially prerendering are powerful tools that can have significant improvements on the perceived load times of web pages. But it’s important to understand how they work so your user’s browsing experience is not directly and negatively affected.

05. Ajax content loading

Another way to improve loading times is to use Ajax to load content as opposed to loading the entire page again – more efficient beacuse it’s only loading the changes, not the boilerplate surrounding the content each time.

The problem with a lot of Ajax loading is that it can feel like an unnatural browsing experience. If not executed properly, the back and forward buttons won’t work as the user expects, and performing actions such as bookmarking pages or refreshing the page also behave in unexpected ways. When designing websites it’s advisable to not interfere with low level behaviours such as this – it’s very disconcerting and unfriendly to users. A prime example of this would be the efforts some websites go to to disable right-clicking on their web pages as a futile attempt to prevent copyright violations. Although implementing Ajax doesn’t affect the operation of the browser with the same intention of disabling right-clicking, the effects are similar.

HTML5 goes some way to address these issues with the History API. It is well supported on browsers (apart from Internet Explorer, though it is planned to be supported in IE10). Working with the HTML5 history API we can load content with Ajax, while at the same time simulating a ‘normal’ browsing experience for users. When used properly the back, forward and refresh buttons all work as expected. The address bar URL can also be updated, meaning that bookmarking now works properly again. If implemented correctly you can strip away a lot of repeated loading of resources, as well as having graceful fall backs for browsers with JavaScript disabled.

There is a big downside however: depending on the complexity and function of the site you are trying to build, implementing Ajax content loading with the History API in a way that is invisible to the user is difficult. If the site uses server-side scripting as well, you may also find yourself writing things twice: once in JavaScript and again on the server – which can lead to maintenance problems and inconsistencies. It can be difficult and time consuming to perfect, but if it does work as intended you can significantly reduce actual as well as perceived load times for the user.

When attempting to improve the speed of your site you may run into some unsolvable problems. As mentioned at the start of this article it’s no secret that Google uses page speed as a ranking metric. This should be a significant motivation to improve your site’s speed. However, you may notice that when you use resources such as Google Webmaster Tools’ page speed reports they will report slower load times than you would expect.

The cause can be third-party scripts such as Facebook Like buttons or Tweet buttons. These can often have wait times in the region of hundreds of milliseconds, which can drag your entire website load time down significantly. But this isn’t an argument to remove these scripts – it’s probably more important to have the social media buttons on your website. These buttons usually occupy relatively small spaces on your page, so will not significantly affect the visitor’s perceived loading time – which is what we should primarily be catering for when making speed optimisations.

Tom Gullen is a founder of Scirra Ltd, a startup that builds game creation tools: www.scirra.com/

Liked this? Read these!

- How to build an app

- Free graphic design software available to you right now!

- Download the best free fonts

- 101 CSS and Javascript tutorials

The Creative Bloq team is made up of a group of art and design enthusiasts, and has changed and evolved since Creative Bloq began back in 2012. The current website team consists of eight full-time members of staff: Editor Georgia Coggan, Deputy Editor Rosie Hilder, Ecommerce Editor Beren Neale, Senior News Editor Daniel Piper, Editor, Digital Art and 3D Ian Dean, Tech Reviews Editor Erlingur Einarsson, Ecommerce Writer Beth Nicholls and Staff Writer Natalie Fear, as well as a roster of freelancers from around the world. The ImagineFX magazine team also pitch in, ensuring that content from leading digital art publication ImagineFX is represented on Creative Bloq.