How Framestore redesigned RoboCop for the 21st century

Andy Walker describes how Framestore brought the iconic sci-fi character back to the big screen.

RoboCop arrived on Framestore's doorstep as a very well developed pre-production.

Director José Padilha had been working with the studio, MGM, VFX supervisor Jamie Price, Legacy Effects, Mr X and a great concept team to produce a large body of physical props, animation tests and artwork ready for the imminent shoot in Toronto, Canada.

There were concepts for almost everything and highly detailed 3D concept models for a lot of things. It was our job to pick up the baton as the main vendor on the show and get everything post-production-ready and bring the concepts to the big screen.

Key task

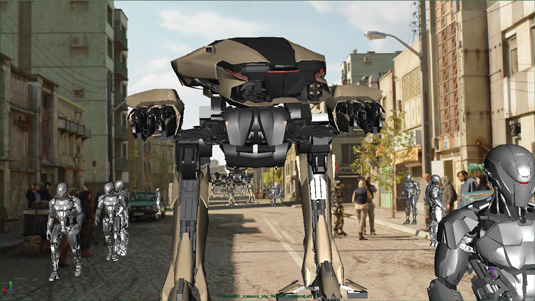

Framestore's key task was to embellish the practical suit from Legacy FX and bring the iconic main character to life. Meanwhile the much-loved ED-209 had received a new design alongside a new EM-208 robot and both needed to be created fully in CG.

Several locations also required post work, including turning a backlot in Toronto into a sprawling future Tehran.

Our VFX supervisor Rob Duncan strategised the whole project and supervised the shoot, while as CG supervisor I worked with the Framestore pipeline and R&D departments to standardise tools and workflows hot on the heels of Gravity. Simultaneously we were opening up a Montreal facility, which was to take on a large chunk of the work.

01. Putting the man in the machine

Initially all eyes were on RoboCop himself. Live-action photography would dress Joel Kinnaman and his two stunt doubles in two variants of the suit built by Legacy, which needed a nip and tuck to create negative space and a CG mechanical abdomen, alongside various digital doubles for the action shots.

Daily design news, reviews, how-tos and more, as picked by the editors.

Quickly it became apparent that rather than track the whole body to replace the abdomen and limb linkages, it would be quicker and more successful to track just the head and spend our time creating a convincing CG body and/or head, even in close up shots.

It was a revelation that the uncanny valley is a lot less deep when you only see a chin.

Every shot ended up being its own mix of techniques; we wonder if you could spot which.

02. Creating an awkward number of robots

We knew we would need a lot of robots in some shots, around 160 in the heaviest shot.

As a CG supervisor that's an awkward number. Just big enough to make your pipeline crew question the use of the character pipeline, too small and art directed to consider the overhead of a crowd pipeline.

Testing the character pipeline to destruction was the chosen way forwards. With a few tweaks to loaders and animation-scene-wrangling tools, all was running smoothly.

Stunt doubles were shot for reference, mocap was considered, but replaced by mechanised keyframe cycles and animation to get just the right robotic feel.

Instancing and level of detail were next on the agenda, but these items evaporated after Arnold crunched through 160 high-resolution robots without blinking twice.

It's amazing how quickly CG raytraced rendering has matured. You can throw a huge complexity at Arnold, and with the right care setting up, it will simply chew through it.

03. Turning Toronto into Tehran

I suppose it's understandable to not take the production to Tehran, but watching the first rushes of a few hundred feet of a Toronto backlot and then googling some photos of Tehran streets, you wonder if you should hop on a plane and do some second unit work.

Where is the line between CG and digital matte painting was the first question? Perhaps we could top up the live-action street with CG, then a few 2.5D angles beyond?

Early rushes of swooping cameras flying well past the end of the live-action set meant we needed a fully CG street. With one CG street, you may as well reuse the assets to make a few hundred blocks. The environment team ran with it and it became apparent Arnold is perfect for sunny architectural scenes.

Again it chewed through the geo, I'm sure render times actually got faster from early tests with cubes to final geo (albeit with instancing). Layered up with some mattes and integration work, and we had a convincing Tehran that saved everyone a long, expensive trip.

Words: Andy Walker

Andy Walker is a CG supervisor at Framestore. He joined in 2001 as an animator and FX artist. This article originally appeared in 3D World issue 183.

The Creative Bloq team is made up of a group of art and design enthusiasts, and has changed and evolved since Creative Bloq began back in 2012. The current website team consists of eight full-time members of staff: Editor Georgia Coggan, Deputy Editor Rosie Hilder, Ecommerce Editor Beren Neale, Senior News Editor Daniel Piper, Editor, Digital Art and 3D Ian Dean, Tech Reviews Editor Erlingur Einarsson, Ecommerce Writer Beth Nicholls and Staff Writer Natalie Fear, as well as a roster of freelancers from around the world. The ImagineFX magazine team also pitch in, ensuring that content from leading digital art publication ImagineFX is represented on Creative Bloq.