8 tips for designing voice user interfaces (VUI)

Essential considerations when designing UI for voice rather than screen.

Sign up to Creative Bloq's daily newsletter, which brings you the latest news and inspiration from the worlds of art, design and technology.

You are now subscribed

Your newsletter sign-up was successful

Want to add more newsletters?

Voice user interfaces (VUIs) present their own challenges for UI designers, but they can improve experiences for users. The artificial intelligence involved in voice technology is now advanced enough to make VUIs the most efficient way to perform many common tasks, and it's getting better all the time.

The technology behind voice services like Amazon Alexa, Google Assistant, and Microsoft Cortana can now understand different utterances, variations in syntax and even different accents. And thanks to cloud computing they can access vast amounts of data in a flash.

To learn more about UI design in general, sign up for our online UX design course: UX Design Foundations. In the meanwhile, here are our top tips for designing voice interfaces – some of which come from the tech school CareerFoundry's comprehensive Voice User Interface Design course in collaboration with Amazon Alexa.

01. Remember that users don’t speak the way they type

Our first tip when designing voice user interfaces is to remember that people don't speak the way they write (and nor do they speak to VUIs the way they would speak to a person). This has implications for the development of VUIs.

When we type in a search engine, we often abbreviate, omitting certain parts of speech. For example, if we want to find a good sushi restaurant in Berlin, we might type ‘best sushi Berlin’ or ‘top sushi restaurant Berlin.’ In contrast, in speech with a friend, we might say something like, ‘Do you know any good sushi restaurants in Berlin?’ or ‘What’s your favourite sushi restaurant in Berlin?’

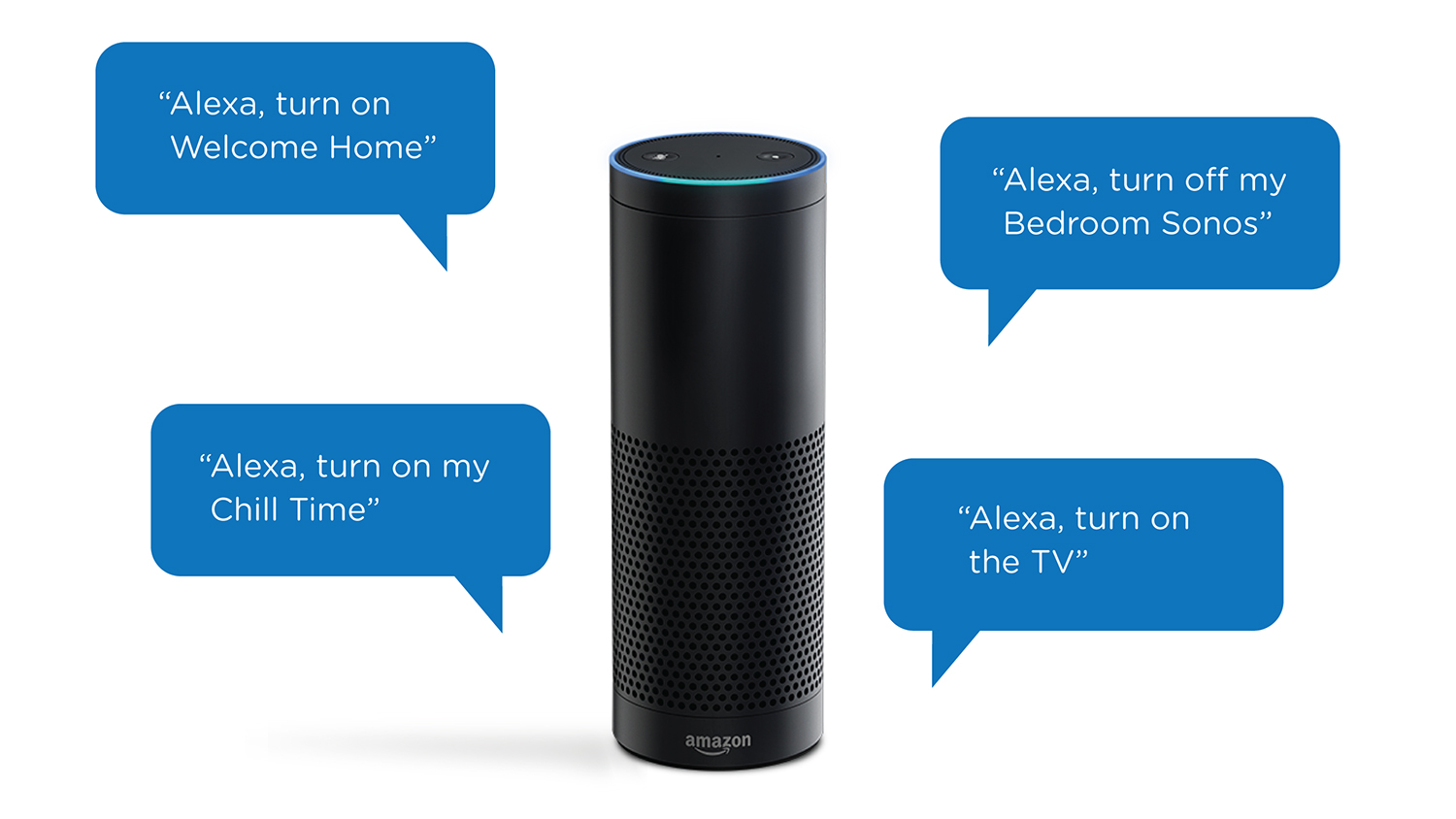

People have already developed a unique way of speaking to voice user interfaces. We're aware that we’re talking to an artificially intelligent machine, so we don’t speak to them the way we do a person, but nor in the abbreviated way we type. Instead of saying 'Alexa, what’s your favourite sushi restaurant?’, we're more likely to say, ‘Alexa, find me a good sushi restaurant in Berlin’ or ‘Alexa, where should I eat sushi?’

The pattern here is that we tend to speak to voice user interfaces by giving orders or asking direct questions. We speak to them with natural speech, but not as we would to a friend. The voice service assumes the persona of a helpful assistant.

Sign up to Creative Bloq's daily newsletter, which brings you the latest news and inspiration from the worlds of art, design and technology.

Over time, this may change. Who knows, maybe Alexa will respond, ‘Say please', to ensure we don’t lose sight of our good manners. On the other hand, we might end up in a future where we talk to our partners with the same directness as we do our devices.

02. Personalisation is vital

Voice-operated devices are already showing a clear tendency towards personalisation. This is all part of creating a quick, efficient experience. A great way to do this is for a device to remember preferences so you don’t have to input information every time you use it.

For example, if you use the Deutsche Bahn, a personalised voice assistant can check your train times to work without you having to name the departure and arrival station each day. The device remembers your route.

Similarly, you might not want to order from Amazon and have to tell it your address and postcode through speech (‘8TK’ ‘80K?’). However, once you’ve ordered online once, Amazon will remember your delivery address and simply ask you to confirm it when you’re placing repeat orders via voice.

03. We need new conventions to show system status

When you’re waiting for a webpage to load, not receiving feedback is incredibly frustrating, mainly because you don't know if it’s crashed or if it’s just taking its time. Status feedback is an important part of good user experience – if a site has to search through a large database to find what you need, it’s important to keep the user informed on where it’s up to.

When you’re talking to a voice user interface, you’ll also want reassurance that it’s switched on and listening or performing a given action. However, you don’t necessarily want it to talk over you and disrupt your flow. This is where other indicators like lights or sound effects, which don’t disrupt your speech, can serve a valuable purpose.

Have you ever had the experience of being on the phone and suddenly wondering if the person on the other end is still there? Thankfully we often use subtle audible clues to let the other person know we’re still on the line when they’re in the middle of a long anecdote.

Similarly, voice services can find subtle ways of letting us know they’re switched on and at our service. Amazon Alexa devices reassure their owners that they’re listening with flashing lights and non-disruptive sound effects. Maybe in the future, we’ll be so used to them being failsafe that we won’t need any reassurance.

04. Adapt for flat navigation

When designing the UX for a website, the site navigation is crucial. You think about what are the most common actions a user performs and what options should be available on the homepage. How many click-throughs does it take a user to perform a simple task?

When users interact with the web using voice, they’re likely to bypass many intermediary stages and go straight to the information they need. For example, a user who wants to order from Amazon will not say, 'Alexa, go to Amazon.com, then go to my account, then view my history, then find coffee, then place the order again.' They’ll simply go straight to the final step: ‘Alexa, re-order coffee.’ They don't want to have to go through stages to get there.

05. Talking should come naturally

Users don’t want to memorise hundreds of commands to perform specific tasks. The whole point of voice user interfaces is to leverage our most natural communication style and apply this to computers, not to create something new that takes time to learn.

Graphical interfaces have a few codes we have come to understand. For example, if you can’t find what you’re looking for, it’s probably hiding in the hamburger menu. With voice, we may end up with a few established conventions, but in general the aim is to make voice interactions so intuitive that anyone could pick up a device and start using it.

This will be an exciting task for designers and programmers: understanding the natural cues in conversations and teaching computers to understand us and seamlessly provide an answer or perform a task.

The database of utterances that machines can understand is growing daily, and it’s very possible that we’ll reach a point where machines are better at deciphering our drunken slurs than our friends are.

06. Accessibility has implications for voice user interfaces

As any UI designer will tell you, one of the most important things to consider in UI is digital accessibility. Fonts, colours, and graphics aren't just questions of aesthetics, they're about making sure everyone can access the content – for example, is the contrast making your content illegible for people with visual impairments?

Considerations around accessibility are important in voice interactions, but they take a different form. Voice interactions rely on two things working successfully: the device understanding the person talking, and the person understanding the device.

This means designers should plan for speech impediments (not just regional accents), hearing impairments, and any other factors that could influence communication, such as cognitive disorders. See our designer's guide to digital accessibility for more on this topic.

07. Avoid bias

We’re used to finding hundreds of results when we search on Google, however, when we’re interacting via voice we often want the device to intelligently pick the best answer for us, rather than reeling off a long list of sometimes contradictory search results.

This could get complicated quickly. For example, imagine asking your voice service to tell you what the best headphones on the market are. The information is subjective and could easily lend itself to commercial bias.

Search engines will also have a huge role to play in determining which content is picked up by voice services, and this will no doubt result in much debate and controversy. It's impossible to curate, but we need to try to avoid bias in voice user interfaces wherever we can.

08. Be aware of issues around privacy and fraud

From your children talking to Amazon Alexa and re-ordering tons of chocolate, to people overhearing your confidential information – there are lots of considerations around privacy and security when it comes to voice user interfaces.

An obvious solution is password-locking devices, but then, voice makes it easier for someone to overhear that. In the future, it’s likely that shared devices will recognise users by their voice and personalise their experience accordingly. In the meantime, we have to think carefully and recognise that there are some use cases where voice just isn’t appropriate.

09. Embrace voice interactions

As voice interaction evolves and machines become smarter and smarter, there will no doubt be more considerations. voice is potentially the next huge paradigm shift in technology, and with machine learning moving at the rate it is today it’s highly possible that over the next few years machines will become better than humans at deciphering human speech.

If you’re working in technology, you’d be silly to ignore voice user interfaces. There are lots of exciting initiatives out there, and Amazon, Cisco, IBM and Slack are just some of the big companies investing heavily in voice startups. If you’re keen to learn more about designing for voice, check out CareerFoundry’s 8-week Voice User Interface Design with Amazon Alexa online course.

To learn more about the essentials of UI design, sign up for our 100% remote UX Design Foundations course

Related articles: