Secure your toolset and stop hack attacks

With tools like Rails, PHP, YAML, GitHub and PostgreSQL suffering security failures, Tim Perry explains how to protect yourself.

Sign up to Creative Bloq's daily newsletter, which brings you the latest news and inspiration from the worlds of art, design and technology.

You are now subscribed

Your newsletter sign-up was successful

Want to add more newsletters?

Five times a week

CreativeBloq

Sign up to Creative Bloq's daily newsletter, which brings you the latest news and inspiration from the worlds of art, design and technology.

Once a week

By Design

Sign up to Creative Bloq's daily newsletter, which brings you the latest news and inspiration from the worlds of art, design and technology.

Once a week

State of the Art

Sign up to Creative Bloq's daily newsletter, which brings you the latest news and inspiration from the worlds of art, design and technology.

Seasonal (around events)

Brand Impact Awards

Sign up to Creative Bloq's daily newsletter, which brings you the latest news and inspiration from the worlds of art, design and technology.

Developing secure software is about more than writing secure code. It's relatively easy to keep unsafe input out of your SQL, hash and salt your passwords, and limit users' permissions. It's much harder to ensure every part of your infrastructure and tooling has done the same.

Your code is only a small part of the 'attack surface' (the accessible parts of the system that an attacker might exploit) of your application, and it's the part of the system an attacker will have least knowledge of.

Meanwhile, huge databases of exploits for common components already exist, and can be used to quickly sweep swathes of the internet looking for unlucky vulnerable sites from which they can steal valuable secrets. Let's take a look at some of the security failures that have hit popular tools recently, and see how you can protect yourself from such flaws in the future.

Rails

We'll start with the biggest name: Ruby on Rails. Rails is a web framework, running atop Ruby, which makes it very easy to quickly build web applications.

In February 2013, Rails 3.2.12 was released, fixing one of a series of critical security vulnerabilities. This vulnerability was in the commonly-used serialize attribute. This tells Active Record (the Rails ORM) to serialise or deserialise a property to or from a YAML string when persisting to the database. In a system that allows users to add blog posts, marking each post with a list of tags, a vulnerable class might look like this:

class Post < ActiveRecord::Base

serialize :tags

endThis simple class represents a blog post with a tags property, that should be serialised in YAML format when the post is saved.

Unfortunately for this example, if tags is already a string of YAML, then it's not further serialised, and is saved raw to the database. When it's loaded, it then appears to be previously safely-serialised YAML, and Rails happily tries to deserialise it. Thus, if a web application accepts user input anywhere to a serialisable field, users can provide arbitrary YAML for deserialisation.

Sign up to Creative Bloq's daily newsletter, which brings you the latest news and inspiration from the worlds of art, design and technology.

This bug sounds innocuous, but is actually quite nasty. YAML is a powerful data format, and can request that Ruby classes be instantiated and configured as part of parsing. Simple, well publicised YAML documents exist which run arbitrary code when parsed with the standard Ruby libraries, and using that in this case makes it surprisingly easy for an attacker to take over your server.

It's easy to keep your code safe. It's harder to ensure your tooling has done the same.

This wasn't the first time a YAML bug had hit Rails. Two other similarly catastrophic Rails issues also exploded just a few weeks prior to this, where a default Rails install could be made to parse user-provided YAML and thereby give total control of the server to attackers.

This painful series of issues required repeated, urgent upgrades to almost every Rails server deployment on the net, leaving Rails developers not best pleased.

Look for anything suspicious

Bugs like this are scary: reasonable code made vulnerable by problems in the tools used. The eventual fix for the Rails 3.2.12 issue was to reject any attempts to ever set serialisable properties to raw YAML: there's generally no good reason to intentionally serialise YAML twice.

This highlights a critical point for security in general: ensure you're sanitising user input as much as possible, and reject anything even slightly dubious immediately.

It's also worth noting that your data formats may be more than just simple structures for data, and naïve uses of the wrong ones make for easy avenues of attack. This holds true for YAML, but also for popular formats like XML (for example, consider the Billion Laughs attack). For the easy, safe option, opt for JSON.

PHP

PHP is the most popular programming language in the world for websites, powering huge players like Facebook, Wikipedia and WordPress. However, it's experienced more than a few security hiccups along the way.

For version 5.3.7, a tiny change was made to the crypt() function – the recommended function (at the time) for salting and hashing passwords. This introduced a bug: hashing and salting any string with crypt always returned the same value (the raw salt), regardless of the string to be hashed.

This is extraordinarily dangerous. Login code using this to authenticate a user (ignoring salt handling) will typically look something like this:

$loginOk = (crypt($passwordInput, $salt) == $previouslyCryptedPassword));With this bug in place this test is always true, regardless of the password provided. This is because both the previously hashed password and the password hashed by the crypt() call here will both just be the $salt .

This breaks all authentication for affected applications. If on any site using PHP 5.3.7 a user is created, logins to that account using any password are all successful. Whoops.

The underlying issue here isn't too interesting: it's a tiny clean-up change to a different string concatenation method, done just wrong enough. More interestingly, this bug was actually reported before the release went out – and on top of that PHP has unit tests for this, which would have detected the bug immediately. Sadly, nobody ran them before publishing the release.

Build-in safe development practices

There are many morals to this story, but it's particularly striking to consider how important project development practices were in this security issue.

If the unit tests were run automatically and attention was given to failures, or bugs reported in release candidates were properly considered before release, then the bug simply wouldn’t have been included in the update. Following best practices like this will help us avoid more of these problems, both for library maintainers and for library users.

Having your own set of integration tests, testing security-critical processes including the unhappy path, enables you to quickly spot regressions like this before you upgrade in your live environment. It's also worth considering the security practices of your potential tools when evaluating them.

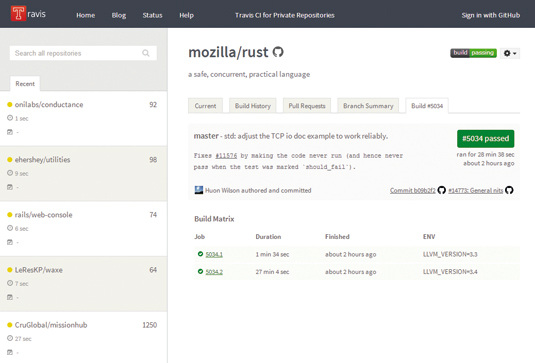

Publicly visible (and passing) build statuses are a good sign, and consideration of testing and design are great too. It's also particularly worth looking for projects with well publicised security reporting mechanisms, preferably with published encryption keys, to allow exploit reporters encrypt the reported details for the maintainer's eyes only.

PostgreSQL

PostgreSQL is an open source database that’s existed since the 80s, and is now probably the most powerful SQL database available in the open source community. Like PHP, through, it's had troubles.

In its most spectacular recent vulnerability, it was discovered that if you connect to a Postgres server and request a database which has a name that begins with a dash, then the name is interpreted instead as a command-line argument on the server, even if you fail to authenticate.

Increasing the external attack surface can threaten the security of your entire system.

This is very exploitable, using arguments like -r filename , which logs output to a file on the server. To exploit this with the standard psql client, you connect with:

psql --host=example.com --dbname="-r /var/lib/postgresql/data/db"With that, example.com's log output is appended to the contents of its database, corrupting it and totally breaking the site.

In fact it's possible to control the logged output too, and by directing the logging to an executable file likely to be run later, and appending code of your choice, you can run arbitrary code on the server (eventually, when the executable is later run).

Nonetheless, this shouldn't have been an immediate problem, as infrastructure like this shouldn’t be accessible to external entities in the first place. Patching quickly would have been important to protect against attackers that had gained access to internal networks – but that should be a rare occurence in itself.

It was very challenging for many cloud services. Infrastructure and Platform as a Service (IaaS/PaaS) tools that provide hosted components to build applications upon, often make these services accessible to the wider internet. This exposes them to attacks like this in any individual component. At the time, many services – including Heroku and EnterpriseDB – were attacked, and had to rush out fixes to avoid disaster.

Reduce external access

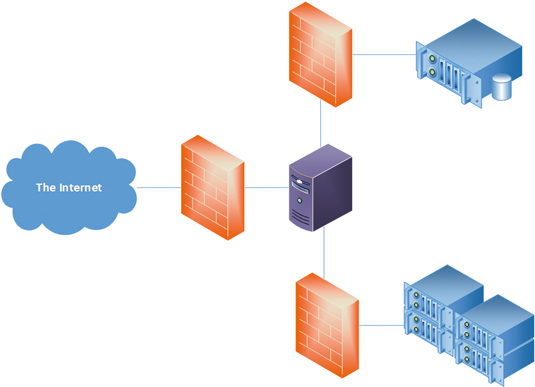

This is a great example of how increasing your external attack surface can threaten the security of your entire system. There's rarely any need to expose your database instances, or other internal components.

Ensuring they're insulated from the world provides substantial protection even when a vulnerability is found. It's worth checking what components you currently allow external access to, and whether these can be reduced.

To control this, use your network and machine firewalls, limit which network interfaces services listen on, and consider totally separating internal-only networks from externally connected ones.

GitHub

Lastly, we look to GitHub, the popular Git hosting site. It's occassionally had some security holes of its own, but more interesting was the fallout of the substantial search improvements it rolled out in January last year.

These improvements enabled visitors to search GitHub code in great detail. Curious users quickly discovered that many repositories included private information, including users' SSH keys (giving write access to their GitHub), various usernames and passwords, and a selection of API keys for services – both free and paid-for.

Secret data for your deployed site should be stored separately from your codebase

Even now, searches for Amazon Web Services keys on GitHub turn up nearly 10,000 results. Despite many warnings from Amazon itself, it's easy to find reports of AWS users with crippling bills after accidentally making their keys public like this.

This is a powerful example, but isn't only affecting people using public repositories. Even having your secrets stored in a private repository has dangers. Additions to your Git repository stay in your history permanently, and there's enough risk around the possible brief exposure of your Git repo (the next vulnerability in GitHub, your CI server, or anybody's development machine) that it's not worth the massive damage that'll be caused by the exposure of your secure data.

Keep secret data isolated

Secret data for your deployed site (API and encryption keys, usernames, passwords) should be stored somewhere separate from your codebase, and securely isolated from everything else possible too. This allows you to limit access to your secrets to the absolute minimum, minimising the number of moving parts you have to trust not to accidentally leak your private data.

Popular solutions to this include storing these secrets as environmental variables in your deployment environments, keeping the secrets in Git-ignored local config files, or in encrypted config files that can be committed, while keeping the encryption key safe elsewhere.

For small projects this can be easily managed with manual processes, and a variety of deployment tools exist that help keep things nice and straightforward as you expand. Take a look at ZooKeeper, Chef Vault , Git-encrypt and dotenv.

Conclusion

Hopefully this has provided some insight into what can go wrong in your tools, and the steps that you can take to help minimise the risk. To reiterate the core points I've covered:

- Don't trust user input, and be extremely careful about everything it's allowed to touch. All sorts of data formats and components have unpredictable complexities that can make your site unexpectedly vulnerable to attack.

- Follow good development practices for security processes, and look for tools that follow these processes too. Thorough testing is particularly worthwhile, and makes it easier to remain confident of your system's basic security as you change it.

- Limit the exposure of your infrastructure and tools to the outside world wherever possible, to minimise your attack surface and dramatically increase the security of your application.

- Trust your own tools' security as little as possible: isolate everything sensitive from the rest of your internal infrastructure to limit the damage when something is eventually exploited.

Finally, in every case I've covered, announcements were made as the issues came to light, and the users that updated quickly were generally safe. At a minimum, subscribe to security notifications for your tools, and ensure you're prepared to quickly upgrade if you have to. Good luck!

Words by: Tim Perry

Tim Perry is an expert in JavaScript, security, Polyglot Persistence and Automated Testing. This article first appeared in net magazine issue 257.

Like this? Read these!

- How to build an app: try these great tutorials

- Our favourite web fonts - and they don't cost a penny

- Brilliant Wordpress tutorial selection

The Creative Bloq team is made up of a group of art and design enthusiasts, and has changed and evolved since Creative Bloq began back in 2012. The current website team consists of eight full-time members of staff: Editor Georgia Coggan, Deputy Editor Rosie Hilder, Ecommerce Editor Beren Neale, Senior News Editor Daniel Piper, Editor, Digital Art and 3D Ian Dean, Tech Reviews Editor Erlingur Einarsson, Ecommerce Writer Beth Nicholls and Staff Writer Natalie Fear, as well as a roster of freelancers from around the world. The ImagineFX magazine team also pitch in, ensuring that content from leading digital art publication ImagineFX is represented on Creative Bloq.