Sign up to Creative Bloq's daily newsletter, which brings you the latest news and inspiration from the worlds of art, design and technology.

You are now subscribed

Your newsletter sign-up was successful

Want to add more newsletters?

Five times a week

CreativeBloq

Sign up to Creative Bloq's daily newsletter, which brings you the latest news and inspiration from the worlds of art, design and technology.

Once a week

By Design

Sign up to Creative Bloq's daily newsletter, which brings you the latest news and inspiration from the worlds of art, design and technology.

Once a week

State of the Art

Sign up to Creative Bloq's daily newsletter, which brings you the latest news and inspiration from the worlds of art, design and technology.

Seasonal (around events)

Brand Impact Awards

Sign up to Creative Bloq's daily newsletter, which brings you the latest news and inspiration from the worlds of art, design and technology.

Another day, another leap in generative AI development from big tech. Meta Reality Labs has announced a research project that takes a step towards making text to world generation a reality.

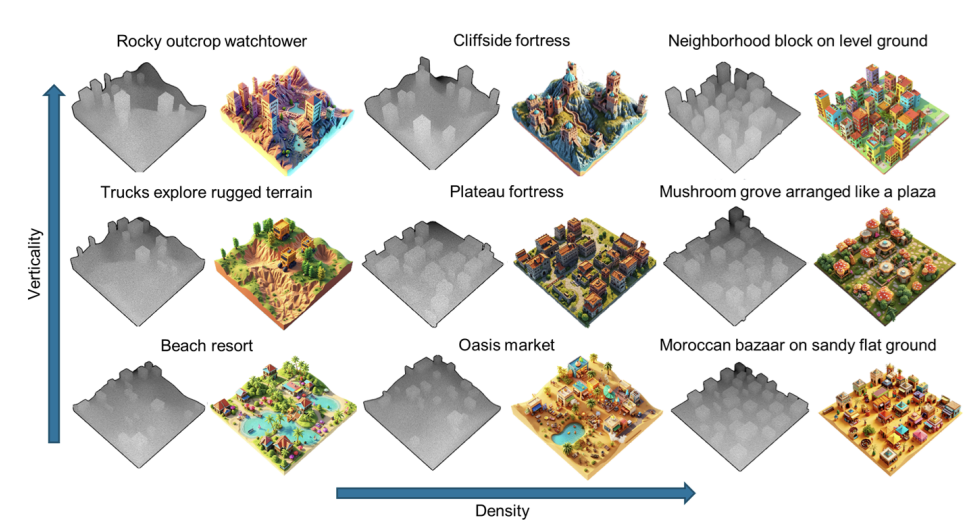

WorldGen is designed to generate stylistically coherent explorable 3D worlds from a just one text prompt. The idea is that these could be used for VR development in engines like Unreal Engine 5 or Unity (see our guide to game development software), although the research paper reveals that there's a long way to go.

Research Update: WorldGen — Text to Immersive 3D Worlds https://t.co/PfeNsWjKtp pic.twitter.com/Ua5DlRC3v2November 21, 2025

According to the WorldGen research paper, Meta's AI model can create scenes spanning up to 50 × 50 metres that users can walk around and explore, They can also be exported for use in real-time engines.

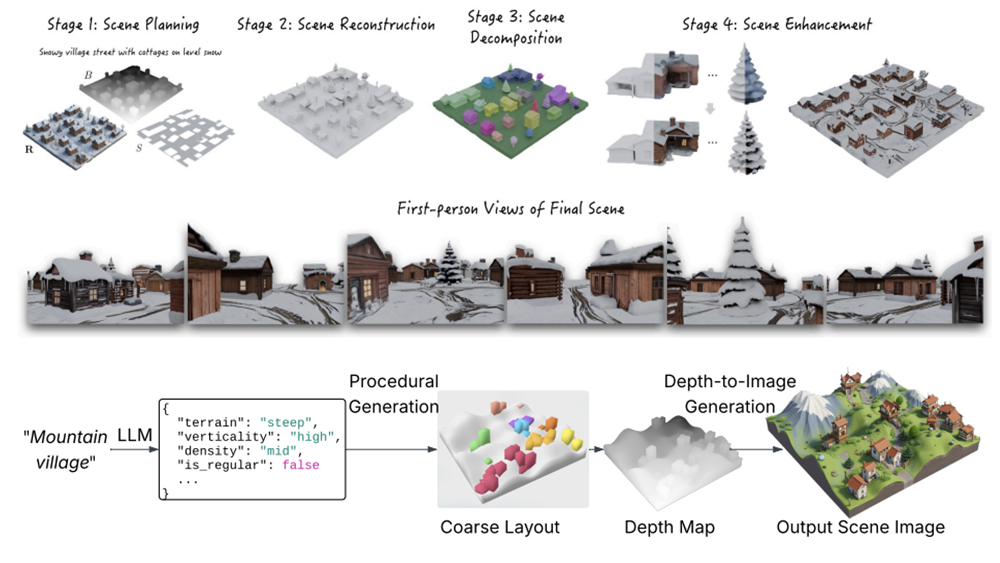

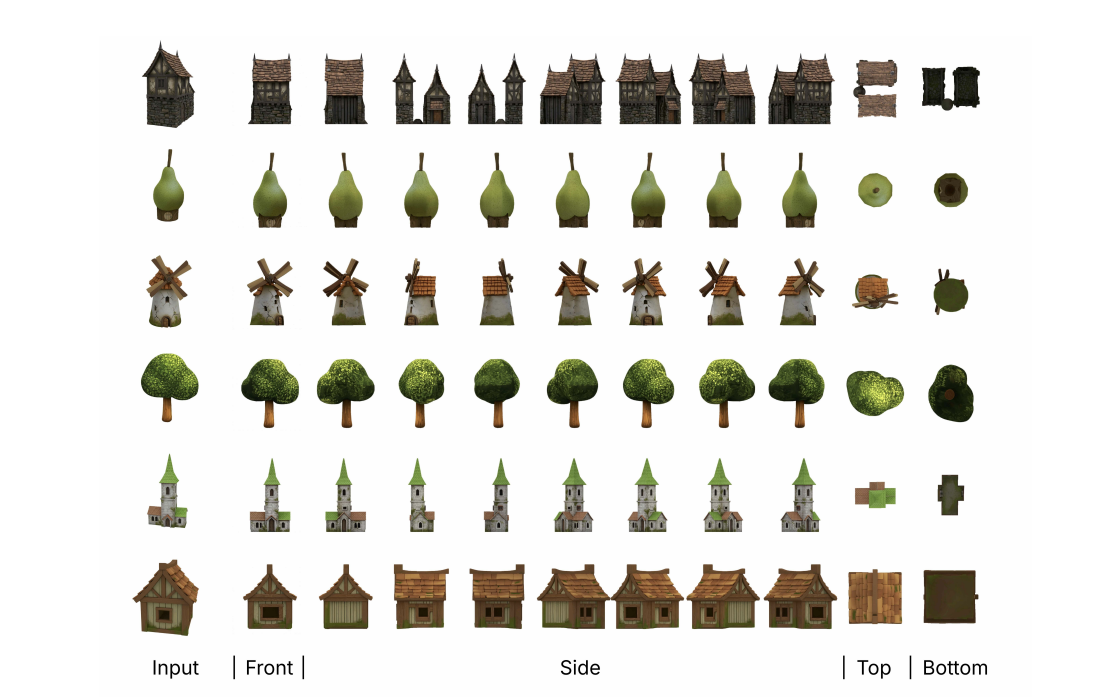

The pipeline combines procedural layout generation, image-based planning, diffusion-driven 3D reconstruction, navmesh extraction, scene decomposition, mesh refinement, and texturing. WorldGen can also break scenes down into objects via an accelerated AutoPartGen process, which is intended to make the environments generated more editable and reusable.

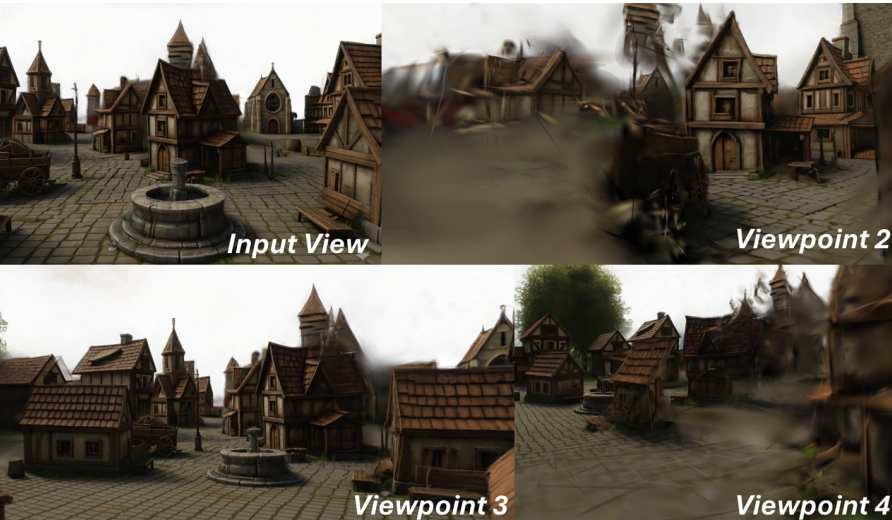

But WorldGen currently has significant limitations. It can only generate a single reference view, limiting the scale of the scenes that can be produced.

It doesn't natively support larger open worlds spanning kilometres rather than metres. This would require generating and stitching multiple local regions, which could introduce non-smooth transitions or visual artifacts at the boundaries, the research paper notes.

The platform can't model multi-layered environments, such as multi-floor dungeons or interior-exterior transitions, either. And it can't reuse textures or geometry, which limits its efficiency and hence its usability for development.

Sign up to Creative Bloq's daily newsletter, which brings you the latest news and inspiration from the worlds of art, design and technology.

Some devs have noted that they need textures to be shareable between objects. Meta says it's looking at how it could add reusable textures in future versions to make the platform more scalable.

Other developers have suggested that the paper makes it look like the generations tend to be repetitive with elements always arranged in a grid pattern.

Joe is a regular freelance journalist and editor at Creative Bloq. He writes news, features and buying guides and keeps track of the best equipment and software for creatives, from video editing programs to monitors and accessories. A veteran news writer and photographer, he now works as a project manager at the London and Buenos Aires-based design, production and branding agency Hermana Creatives. There he manages a team of designers, photographers and video editors who specialise in producing visual content and design assets for the hospitality sector. He also dances Argentine tango.

You must confirm your public display name before commenting

Please logout and then login again, you will then be prompted to enter your display name.