The best laptop for animation comes with a powerful CPU, a high-performance graphics card, and plenty of RAM to keep demanding software like Blender, Maya, and After Effects running smoothly. Every model we’ve selected on this list, has been put through rigorous industry benchmark tests, so you can be sure they deliver where it counds.

Right now, our top pick is the ASUS ProArt P16, which pairs exceptional processing muscle with a gorgeous OLED display. There are plenty more choices, though, so we’ve included a number of other strong options for specific needs.

Meanwhile if 3D animation is your main focus, check out our guide to the best laptops for 3D modelling too.

The top 3 laptops for animation

The ASUS ProArt P16 is our top choice today for animators. This Windows laptop is a fast worker, has top-tier graphical performance, and boasts the famous ASUS Dial, which can help when scrubbing through animation timelines or fine-tuning brush sizes.

Read more below

If you're looking to keep your spend under a grand, this large laptop offers a generous 16-inch screen, strong battery life and decent enough graphical performance for most animation purposes. It ain't fancy, but for the money it'll get the job done.

Read more

With the NVIDIA RTX 5090 GPU and Intel Core Ultra 9 275HX processor, this powerhouse excels at GPU-accelerated rendering, real-time viewport playback, and complex rigging operations in Maya, Blender, Cinema 4D, and Houdini.

Read more

The best laptop for animation in full

Why you can trust Creative Bloq

The best laptop for animation overall

Specifications

Reasons to buy

Reasons to avoid

30-second review: The ASUS ProArt P16 (2025) is our top choice today for animators seeking a powerful and versatile laptop. It's even better than the 2024 version, which until very recently (i.e. until before I wrote this sentence) was out top choice for animators seeking a powerful and – well, you get the idea. AI-powered smarts pair with a slick, capable screen and dazzling graphical performance – really, what more could you ask for?

Price: A price of £2,799.99 in the UK, and $2,899.99 in the US certainly puts it in the upper echelons, but if you are facing a demanding animation workflow, dabbling in 3D, then the ASUS ProArt P16 more than justifies itself.

Design: Weighing almost 2kg, the ProArt P16 isn't the ideal choice for on-the-go agility – but that's not really the selling point. It boasts a lovely 16-inch 3K OLED touchscreen display with 100% DCI-P3 color gamut coverage, ensuring vibrant and accurate colours crucial for animation work. While the resolution has been downgraded from the 2024 version's 4K, the refresh rate has been upped from 60Hz to 120Hz, meaning your animations will look and feel much smoother.

Our reviewer enjoyed using the ProArt P16 (2025)'s ASUS Dial: a physical control that allows for precise adjustments in creative applications. It's particularly good for animation, as it allows you to easily scrub through animation timelines or fine-tune brush sizes. One small annoyance though – the power port has been redesigned to be an ASUS proprietary port, meaning you can't buy generic chargers. Booo.

Performance: This Windows laptop is a fast worker, and its graphical performance is top of the line right now. The AMD Ryzen AI 9 HX 370 CPU pairs with an AMD XDNA NPU with up to 50 TOPS AI performance – so if you've integrated AI tools into your animation workflow, the ProArt P16 will be able to keep up. The graphical performance is frankly ludicrous thanks to the new NVIDIA GeForce RTX 5070, replacing the 4070 on the previous model to deliver what we measured as a 25-28% improvement.

For 2D animation, all this is ample power – more than you really need. With 64GB of RAM and 2TB SSD of storage (that can be expanded if needed), you'll be well-equipped to venture into the world of 3D animation if you want to, or just crunch through 2D animation tasks exceptionally quickly.

Battery: This was never exactly going to be the ProArt P16's forte. With that in mind, our test result of six hours' continuous video playback on a battery charge is pretty good, and considering that this laptop isn't really designed in any way for portability, it's unlikely to be a deal-breaker for many people.

Read more: Asus ProArt P16 (2025) review

"After testing this laptop for a month, it's my new favourite creative powerhouse offering everything an ambitious creative professional could hope for. The touchscreen makes it ideal for artists who want to have hands-on control of their creation, and the colour and brightness is a video editor's dream"

The best budget laptop for animation

Specifications

Reasons to buy

Reasons to avoid

30-second review: If you're looking to stay under a grand, the HP Pavilion 16 will tuck in nicely under your budget. It's not the most exciting laptop, but with a large display and Intel Arc graphics, it's viable for budding animators who don't need the most high-end performer. The 32GB RAM keeps things moving at a healthy clip, and we were also impressed by the generous battery life.

Price: Here is where the HP Pavilion 16 really shines – its value for money. You can generally pick one up for as little as $650 or £699, and in the USA we've seen it go as low as $459 with limited-time discounts. For a laptop capable of handling animation work, that's excellent value, and it cements the HP Pavilion 16 as our budget pick.

Design: While it's large, the Pavilion 16 is pleasingly lightweight – coming in under 2kg, it's a viable laptop to take on the go with you provided you have a bag big enough. In terms of the display, the 1920x1200 is hardly exceptional in terms of quality, but its 16-inch size gives you generous room to see what you're doing, as well as providing a comfortable amount of real estate for touch and swipe gestures.

Performance: Lots to recommend here. You get plenty of ports, including two USB-C and two USB-A, as well as HDMI port and audio jack. And the 32GB of RAM means the laptop is more than capable of handling the demands of most animation software. It's in graphical performance that you may find an issue; results in our benchmark tests were decent but not exceptional. That's about what you'd expect from a machine with integrated graphics, even if it is Intel Arc.

Battery: No complaints. The battery life of the HP Pavilion 16 held up well in our testing, even factoring in the power drain of that large display, and you shouldn't have trouble coaxing a full day's work out of it without needing a charge.

Learn more in our full HP Pavilion 16 review.

"The HP Pavilion 16 is a lightweight, budget-friendly all-around work laptop. The strong battery life paired with great ergonomics makes it comfortable to use for extensive period,s and features like AI functionality and touchscreen capabilities make it an affordable choice for animation work".

Most powerful laptop for 3D modelling

Specifications

Reasons to buy

Reasons to avoid

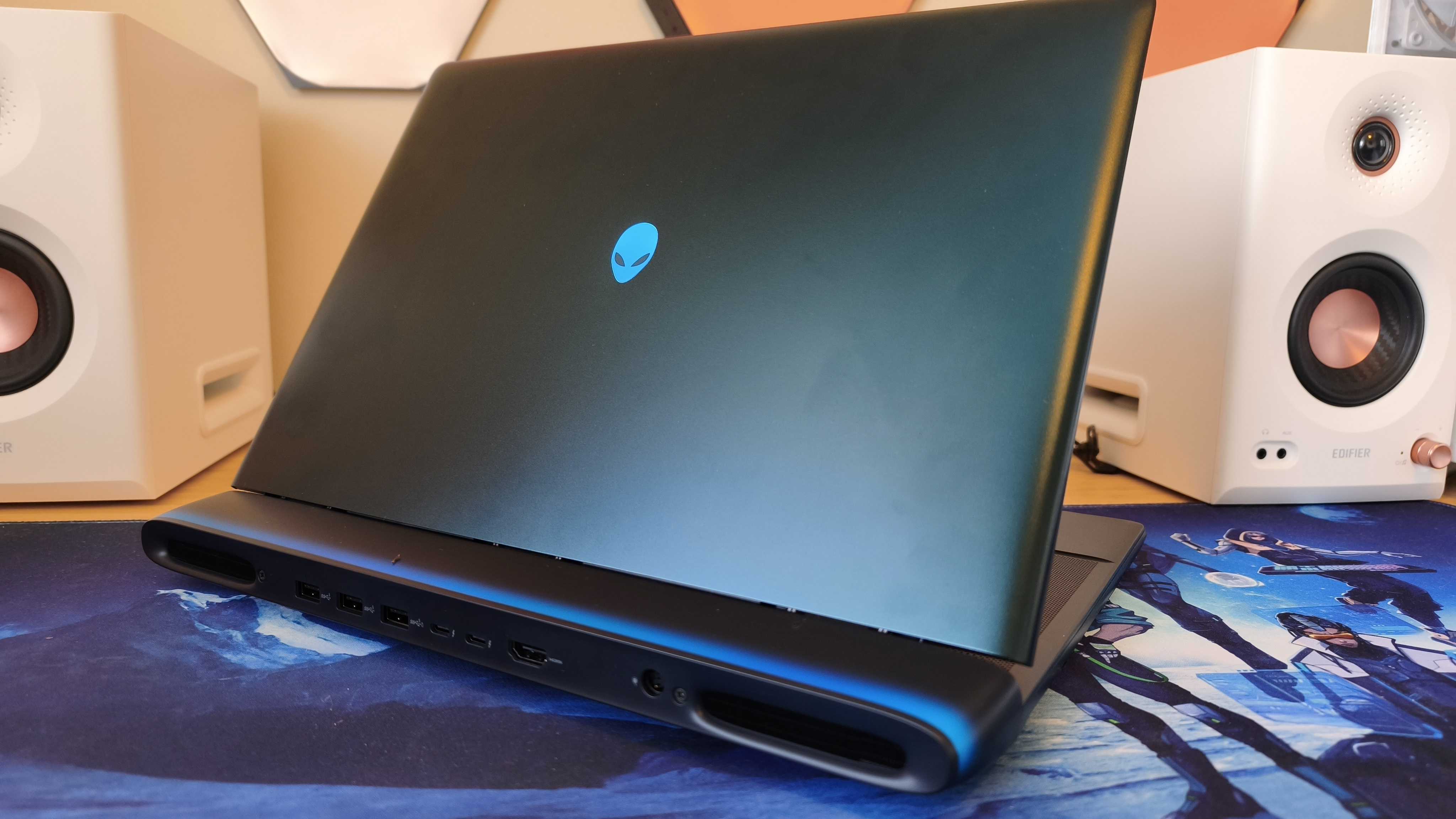

30-second review: The Alienware 16 Area-51 is an animation powerhouse that delivers uncompromising performance for demanding projects. With the flagship NVIDIA RTX 5090 GPU and Intel Core Ultra 9 275HX processor, it excels at GPU-accelerated rendering, real-time viewport playback, and complex rigging operations in Maya, Blender, Cinema 4D, and Houdini. The 16-inch display offers a sharp 2560x1600 resolution at 240Hz, providing silky-smooth scrubbing through timelines and accurate colour representation for final output.

Price: Starting at £1,999.01 / $2,749.99 for the base configuration with RTX 5070, whilst the flagship RTX 5090 model tested here costs £3,499 / $4,049.99. This represents a considerable investment, but the exceptional performance justifies the price for professional animators working on complex character animations, particle simulations, or tight production deadlines.

Design: The Alienware 16 Area-51 features an anodised aluminium chassis with bold gaming aesthetics, including customisable RGB lighting throughout. Whilst the design may not suit every animation studio's aesthetic, the build quality is robust and the keyboard offers excellent responsiveness for hotkey-intensive workflows. At 3.4kg, this is firmly a desktop replacement rather than a portable solution. The comprehensive port selection includes two Thunderbolt 5 ports, two USB-A ports, HDMI 2.1, and an SD card slot, all positioned on the rear for clean desk organisation when connecting drawing tablets or external displays.

Performance: Equipped with the Intel Core Ultra 9 275HX and NVIDIA RTX 5090, this laptop delivers extraordinary performance across animation workflows. Benchmark testing showed exceptional results in Cinema 4D rendering, with Adobe Photoshop and Premiere Pro performance amongst the best ever tested—essential for compositing and post-production work. The RTX 5090's 24GB VRAM handles complex scenes with multiple high-resolution textures and subdivision surfaces effortlessly, whilst the processor's 24 cores dramatically accelerate physics simulations and final frame rendering. The integrated NPU enhances AI-powered rotoscoping and motion tracking tasks.

Battery: The battery provides approximately 3.5 hours under standard office workloads, which is admittedly limited. For intensive animation sessions involving rendering, simulation baking, or real-time playback of heavy scenes, you'll need to remain connected to mains power. Combined with its substantial weight, this laptop is best suited as a stationary animation workstation.

Battery: The battery provides around 3.5 hours under standard office workloads, which is obviously pretty limited. Combined with its substantial weight, this reinforces its role as a stationary workstation that requires proximity to mains power for serious 3D modelling.

Learn more in our full Alienware 16 Area-51 review.

""The RTX 5090 is a game-changer for animation work. Complex character rigs with hundreds of controls remain responsive in the viewport, particle simulations calculate at impressive speeds, and GPU rendering in Cycles or Redshift is remarkably swift. The 240Hz display measured 488 nits and 99% DCI-P3 coverage in testing, providing both the smoothness needed for animation playback and the colour accuracy required for final output review."

The most portable laptop for animation

Specifications

30-second review: The brand-new-for-2024 HP Omen Transcend 14 is a sleek, relatively lightweight bundle of portable, powerful joy. When we tested it, we appreciated that it's another in a new generation of gaming laptops that don't look like gaming laptops; aka the holy grail for creatives.

Price: This level of performance doesn't come cheap; the HP Omen Transcend 14 costs $1,599.99/£1,449 for the base model and $1979.99/£1,799 for the model we tested in our full review.

Design: The 14-inch OLED screen is a joy, with 500 nits peak brightness (sounds average but the OLED tech makes it seem brighter) even in HDR mode. And it's got the trendy 16:10 aspect ratio, too, all without falling into the classic "gamer aesthetic" which can be offputting in professional environments. Plus, at just 1.63kg and 1.79cm thickness, it's highly portable - especially considering the level of performance it offers.

Performance: The top-spec model is magnificently powerful for the size, price and build, achieving some excellent benchmarks during our testing across creative tasks from 3D to photo editing – all of which you might need in your animation workflow. The AI tech is to thank for that, as it gives a boost to the already capable combination of the Intel Core Ultra 9 processor and NVIDIA RTX 4070 graphics. Multitasking is smooth, owing to its 32GB of RAM, too.

Battery: Despite all that power, the battery life was ample. We got around seven and a half hours with a moderately heavy workflow.

Learn more in our full HP Omen Transcend 14 review.

"The HP Omen Transcend is a next-gen gaming laptop and creative pocket rocket. It comes packed with an Intel Core Ultra processor with GeForce graphics to suit both gamers and creatives."

The best MacBook for animation

Specifications

Reasons to buy

Reasons to avoid

30-second review: The MacBook Pro 16-inch (M4 Pro, 2024) is a dream machine for animators, excelling in both 2D and 3D workflows. Whether you’re crafting complex motion graphics or rendering high-resolution frames, this laptop delivers industry-leading performance with style.

Price: It's expensive: the base model starts at £2,499, with higher spec configurations reaching up to £7,349. But when compared to equivalent desktop workstations, it's still worth the money.

Design: Apple’s classic unibody design remains a hallmark of quality. The 16.2-inch Liquid Retina XDR display is a standout, offering vibrant colours, deep contrasts, and up to 1600 nits brightness for HDR content. The slim yet durable chassis makes it a perfect companion for on-the-go animators. Ports include MagSafe charging, Thunderbolt 5, and an SD card slot.

Performance: The M4 Pro/M4 Max chips are tailored for heavy animation workloads. Tests with industry-standard software like Blender, After Effects and Cinema 4D show remarkable fluidity, even during complex simulations and rendering. Unified memory options up to 128GB ensure seamless multitasking.

Battery: A major highlight of our testing was the 22-hour battery life for media playback and 10-12 hours of intensive animation work.

Read more: MacBook Pro 16 (M4 Pro, 2024) review

"This MacBook is powerful enough for any use case. The portability and excellent battery life also make it suitable for working on the move."

The best 2-in-1 laptop for animation

Specifications

Reasons to buy

Reasons to avoid

30-second review: It's not necessarily designed for animation first and foremost, but the HP OmniBook Ultra Flip 14 has enough chops to handle 2D work, thanks in part to the latest round of Intel processors. It's a 2-in-1, meaning it also has a tablet mode for those who like having the option to use that kind of configuration, and it has an embedded NPU for next-gen AI features.

Price: The HP OmniBook Ultra Flip 14 is gunning for a high price bracket. It goes for £1,899 (with 32GB RAM) in the UK and $1,599.99 (with 16GB RAM) in the States, and for that money you can get an M4 Mac. The HP does have a few assets of its own, like the 2-in-1 design or its generous 2TB storage, but it's still quite an ask.

Design: The distinctive 'cut-off corners' look of the OmniBook is pretty stylish as far as we're concerned, and it converts between laptop and tablet modes very smoothly. The screen isn't the brightest but it does offer good colour coverage, with our testing bearing out 99% of DCI-P3, 96% of Adobe RGB and the expected 100% of sRGB. There's also a big trackpad and plenty of ports, so all-in-all, we've no complaints in the design department.

Performance: It's worth saying up front that the GPU is probably the weakest aspect of the Omnibook. This may sound like a kiss of death for animators but it's all relative – the GPU offers decent enough power for 2D animation and makes this a solid choice for anyone starting out, or for anyone whose animation needs aren't too complex or demanding. Elsewhere, the laptop puts in a good shift thanks to its latest-generation Intel chip – its scores in our Geekbench tests put it around on par with a MacBook M2 or M3, which is certainly nothing to complain about.

Battery life: You can tell that Intel has been optimising its more recent chips for efficiency rather than raw power, and the Omnibook accordingly achieves a very respectable battery showing. We managed to keep it running for 12 hours before needing a charge, and if you're animating for longer than that, you probably need a work/life balance intervention.

Learn more in our HP OmniBook Ultra Flip 14 review

"This 2-in-1 laptop has entered AI-powered territory while combining the latest Intel processor with a sharp OLED screen. It’s a laptop for everyday use and is more suited to light creative work, rather than being a rendering powerhouse or a dedicated studio machine - and that’s OK. It's immensely portable and convenient for working on the go, and the excellent screen is a winner if you don’t need the big GPU."

The best OLED laptop for animation

Specifications

Reasons to buy

Reasons to avoid

30-second review: It's a close call between the Lenovo Yoga Pro 9i gen 9 and our overall top pick, the ASUS ProArt P16. In our review, we called it a 'must-have' for creatives, especially thanks to its beautiful display combined with the AI-boosted CPU plus, the fact that it's a little more affordable than some of its similarly-specced competition. It features some useful AI tools, too.

Price: The Lenovo Yoga Pro 9i gen 9 starts at £1,635/$1,699, making it fairly comparable to other laptops of its class and good value overall.

Design: Lightweight and sleek by design, its aluminium chassis and solid hinge ensure that it's durable while still being portable. The keyboard ergonomics are fantastic, but the real hero is its 2.3K HDR 16-inch display, which has 1600 Mini-LED dimming zones that make it fantastic for animation. Unlike many modern laptops, the Lenovo Yogo Pro 9i gen 9 also offers a wide array of connectivity ports including a full SD slot.

Performance: Depending on which configuration you opt for, the Lenovo Yoga Pro 9i gen 9 can get pretty powerful, making it plenty capable for animators. The model we tested proved plenty capable at handling heavy creative loads, and the AI-boosted CPU certainly helps in this department. Also of use is Lenovo's Creator Zone, which features AI tools specifically included with creatives in mind, from optimising your creative tools to offering generative image creation that can actually learn your personal visual style.

Battery life: Alas, the battery life is the concession made by this otherwise excellent all-rounder. In our gaming test (which emulates creative loads), we got only two hours of battery life. When streaming with a slightly dimmed screen, that upped to eight hours.

Learn more in our Lenovo Yoga Pro 9i gen 9 review.

"This Lenovo Yoga is one of the best MacBook Pro alternatives on the market right now, and a must-have for creative professionals. It pushes the boundaries of performance and design to appeal to creative professionals, gamers or casual users who crave spectacular visuals since the stunning 3.2K mini LED screen is a joy to work on".

The best larger laptop for animation

Specifications

Reasons to buy

Reasons to avoid

30-second review: The MSI Stealth 18 HX AI is a desktop replacement in all but name. With its Intel Core Ultra 9 CPU, RTX 5080 GPU, 64GB RAM and mini-LED 4K-equivalent display, it’s a dream machine for animation, 3D rendering, and video editing. It outpaces most rivals in benchmarks, rivalling even Apple’s M4 Max in creative tasks. But weighing almost 3kg, with noisy fans and modest battery life, it’s built for power, not portability.

Price: Starting at £3,799/$3,999.99, the Stealth 18 HX AI sits firmly in the ultra-premium tier, but performance this strong makes the cost easier to justify for pro-level creative workflows.

Design: At 18 inches, it’s unavoidably huge, though its magnesium-aluminium alloy chassis feels sturdy. MSI has kept the gaming flourishes subtle, with just a glowing dragon logo and RGB keyboard hints. The real star is its brilliant 715-nit mini-LED display with near-perfect colour coverage.

Performance: Our benchmarks show it’s among the fastest laptops tested, excelling in Premiere Pro, 3D rendering, and AI-driven tasks. The 64GB RAM and powerful cooling help it sustain top speeds, though you’ll want it on a desk, not your lap.

Battery: All that power comes at a price. Expect no more than 2–3 hours of light use. For serious creative work, it’s best left plugged in.

Learn more by reading our MSI Stealth 18 HX AI review.

"It’s enormous, can get hot and loud and shouldn’t be used on your lap, but it carries an excellent screen to complement its processing power."

The best dual screen laptop for animation

Specifications

Reasons to buy

Reasons to avoid

30-second review: The ASUS Zenbook Duo (2025) transforms animation workflows with its dual 14-inch OLED touchscreens that can be arranged vertically or side-by-side. The included ASUS Pen 2.0 stylus offers exceptional sensitivity with interchangeable tips that simulate different drawing tools, making it ideal for frame-by-frame animation and digital art creation.

Price: Starting at $1,799 / £2,099, this represents a significant investment for animation professionals. The innovative dual-screen design and powerful CPU help justify the cost for 2D animators and those creating motion graphics, though 3D specialists might prefer allocating this budget toward more graphically powerful alternatives.

Design: ASUS has perfected a transformative form factor that adapts to animation needs – use it as a standard laptop for scripting and editing, then unfold to dual-screen mode for animation production. The built-in stand enables multiple viewing angles, while the detachable keyboard creates an unobstructed drawing surface. At 1.65kg, it remains remarkably portable for animation work on the go.

Performance: The Intel Core Ultra 9 285H processor with 32GB LPDDR5X RAM gave us a Geekbench 6 multicore score of 16,052, which suggests it will handle 2D animation workflows seamlessly. However, the integrated Intel Arc graphics may become a bottleneck for complex 3D animation projects, especially when working with detailed textures or particle effects.

Battery life: 2D animators can enjoy over 10 hours of battery life using a single screen for drawing, though running animation previews on both displays will significantly reduce this duration. Studio-based animators should keep the charger handy during intensive work sessions.

Read more: Asus Zenbook Duo OLED (2025) review.

"Asus' gorgeous two-screened laptop returns for a 2025 refresh, with an improved Core Ultra 9 Arrow Lake Cpu model. It's as good as ever, although the lack of discrete graphics chip really let's it down - but if you can live with that then it’s a top-notch portable computing experience."

Best AI laptop for animation

Specifications

Reasons to buy

Reasons to avoid

30-second review: If you want the most powerful AI-ready laptop on the market, look no further than the Acer Predator Helios 16 AI. With an Intel Core Ultra 9 processor, RTX 5090 GPU, up to 64GB of RAM, and Intel’s dedicated AI Boost NPU, this laptop demolishes creative workloads like 3D rendering, animation, and generative AI. The 16-inch OLED screen with a 240Hz refresh rate makes both games and creative projects look phenomenal, and the extensive port selection (including Thunderbolt and Wi-Fi 7) keeps it futureproof.

Price: Starting at £3,799.99, it’s undeniably pricey – but that’s the cost of raw, unrestrained power. If your budget can stretch, you’ll be rewarded with a machine that leaves practically nothing out of reach.

Design: Let’s be clear: this is not a slimline laptop. At 2.62kg with a gamer aesthetic, chunky fans, and RGB lighting, it’s more of a desktop replacement than a travel-friendly companion. Still, the solid metal build inspires confidence, and the OLED panel’s 2.5K resolution and 100% DCI-P3 coverage make it a creative powerhouse for animation and design.

Performance: In both our benchmark test and real-world use, it comfortably outpaced RTX 4090 machines, with Geekbench GPU scores around 25% higher than last-gen rivals. 64GB of RAM and a 2TB SSD mean even the most intensive AI or animation projects are handled with ease. The catch? Battery life is practically an afterthought – keep it plugged in for serious work.

Battery: While the OLED panel and top-tier GPU look great, they drain power at an alarming rate. For all but the lightest workloads, you’ll need to stay near a socket – portability is its weakest point.

For full details, check out our Acer Predator Helios 16 AI review.

"The Predator is aimed at one very specific group: those who need the absolute ‘most’ from a laptop, and for whom budget is also not an issue. If you balk at the idea of compromise then this is the laptop for you, unless battery life is something you prioritise."

Also tested

The above may be our top picks for animation, but there are plenty of other options worth considering that we've also tested. Below you'll find some suggestions also well-suited for animation, which may be better suited to your particular needs and budget.

MSI Stealth 16 AI Studio A1V combines serious power with surprising portability. Packing an Intel Core Ultra 9, RTX 4090, and 32GB RAM in a sub-2kg chassis, it excels at creative workflows. It's expensive but offers value, with stunning 4K visuals, solid benchmarks and good portability.

Read our 4.5-star review

Dell Precision 7780 is a heavyweight 17-inch mobile workstation built for demanding 3D and video workflows. With an Intel i7, RTX 3500 GPU, and upgrade options, it delivers excellent performance. However, it’s bulky, lacks 4K, and offers poor battery life. Premium but powerful.

Read our 4.5-star review

Dell Precision 5470 It might not look like much, but this machine is a portable powerhouse that, on test, proved to be plenty capable of handling a range of creative tasks. Our benchmark tests put it close to a MacBook Pro M2 Pro.

Read our 4.5-star review

ASUS Zenbook 14X OLED Housing a NVIDIA GeForce RTX 3050 graphics card paired with a 14-core Intel i9 processor as well as 32GB of RAM, this machine rivals the MacBook Pro in power while keeping the price tag below £/$1,500. We love its beautiful OLED screen, however it's lacking in the battery life department.

Read our 4-star review.

MSI Creator Z17 HX Studio Yes, it's pricey, but that nets you a powerhouse machine with a 16:10 display which supports a stylus, making it excellent for creatives. In our benchmark testing, it handled multitask whilst running creative apps with ease, however battery life left something to be desired.

Read our 4-star review

FAQs

What specs do I need in a laptop for animation?

The specs you will need in a laptop for animation will depend on what software you use, and it's always worth checking the minimum specs quoted by the developer. In many cases, thee minimum system requirements are not particularly high. For example, CelAction2D states a minimum of 8GB of RAM and an Intel i5 CPU, which is fairly standard.

There is a difference, however, between minimum and recommended. To refer to CelAction2D once more, they recommended 16GB RAM and an Intel i7 processor. Furtherrmore, 3D animation software such as Autodesk Maya, Adobe Animate and Blender, do require significant processing power for complex calculations and renderings. So if you're using tools like this ,we'd recommend a 10th or 11th generation Intel Core i7 or i9 or AMD Ryzen 7 or 9 series H. We'd also recommend going for a laptop with a dedicated graphics card.

For 2D animation, meanwhile, we recommend at least a GPU with 4GB of VRAM. For 3D animation, 8 GB of VRAM would be preferable. As for RAM, we'd recommend at least 16GB to be able to handle multiple layers and complex character rigs and provide smooth playback. Going to 32GB is likely to provide a smoother experience for extensive rendering and simulation in 3D animation.

You'll also need enough storage space to store your animation files. Solid-state drives (SSDs) are the way to go for the best read/write speeds, and you'll probably want at least 512GB.

Finally, you'll want a display with high-resolution (at least Full HD, 1920x1080) and good colour coverage and accuracy. Some animators like a touchscreen so that they can use a stylus for drawing and sketching, although there's also the option to use a graphics tablet for this.

Are gaming laptops good for animation?

Gaming laptops are some of the most powerful machines on the market, and increasingly creatives are finding themselves investing in an RGB-clad laptop to handle their processing and rendering needs - we've even included a laptop from Razer, famed for its gaming hardware, in our selection of the best laptops for animation above. Most of the best gaming laptops will be perfectly capable of meeting an animator's needs. If you're wedded to Apple, see our best MacBooks for college.

How to choose the best laptop for animation

Choosing the best laptop for animation for you will depend upon what type of animation, how you work and your budget. We've tested the laptops above for use with animation software, but some are more geared to professionals while others provide more portability or better value. All have the minimum requirements of a modern processor and at least 8GB of RAM (most can be configured higher than that, and would recommend going for 16GB if your budget allows it).

With the increasing popularity of GPU render engines like Redshift and Octane, you should also think about whether you will be using your GPU or CPU to render animations. For CPU rendering, you want to look for a high clock speed so the machine can animate in real time and handle the complex calculations needed to render animations.

For more complex 3D animations, you’re probably better off using your GPU, and so we’d suggest looking at laptops with a dedicated graphics card - especially if you’re going to be attempting final-quality rendering. While these will inevitably be more expensive, the drastic impact it will have on the speed of your workflow is well worth the investment.

How we test the best laptops for animation

We have tested all the laptops in this guide hands on. We run a series of benchmark tests on each device to measure power and performance, including:

• Cinebench R23/2024 - this assesses the performance of a computer's CPU and GPU using real-world 3D rendering tasks

• Geekbench 5/6 - this tests the CPU's processing power, both by using a single core for a single task at a time as well as all the CPU's core to see its ability to multitask

• PCMark 10 - this test assesses a computer’s ability to run all everyday tasks from web browsing to digital content creation, testing app launch speeds, running drawing and animation software, performing 3D rendering, and it also tests its battery life

As well as technical benchmarking, we evaluate machines in real-world situations, using animation software such as Adobe Animate and AutoDesk Maya and also push them to the limit with multiple applications running. We evaluate power, speed, display, design and build as well as value. For more details, see our article on How we test.

Daily design news, reviews, how-tos and more, as picked by the editors.

Erlingur is the Tech Reviews Editor on Creative Bloq. Having worked on magazines devoted to Photoshop, films, history, and science for over 15 years, as well as working on Digital Camera World and Top Ten Reviews in more recent times, Erlingur has developed a passion for finding tech that helps people do their job, whatever it may be. He loves putting things to the test and seeing if they're all hyped up to be, to make sure people are getting what they're promised. Still can't get his wifi-only printer to connect to his computer.

- Tom MayFreelance journalist and editor