Even AI's mind is blown by this optical illusion

Sign up to Creative Bloq's daily newsletter, which brings you the latest news and inspiration from the worlds of art, design and technology.

You are now subscribed

Your newsletter sign-up was successful

Want to add more newsletters?

Five times a week

CreativeBloq

Sign up to Creative Bloq's daily newsletter, which brings you the latest news and inspiration from the worlds of art, design and technology.

Once a week

By Design

Sign up to Creative Bloq's daily newsletter, which brings you the latest news and inspiration from the worlds of art, design and technology.

Once a week

State of the Art

Sign up to Creative Bloq's daily newsletter, which brings you the latest news and inspiration from the worlds of art, design and technology.

Seasonal (around events)

Brand Impact Awards

Sign up to Creative Bloq's daily newsletter, which brings you the latest news and inspiration from the worlds of art, design and technology.

We know from our collection of the best optical illusions that our eyes and brains can play tricks on us. In many cases, scientists still aren't sure why, but the discovery that an AI model can fall for some of the same tricks could help provide an explanation.

While some researchers have been training AI models to make optical illusions, others are more concerned about how AI interprets illusions, and whether it can experience them in the same way as humans. And it turns out that the deep neural networks (DNNs) behind many AI algorithms can be susceptible to certain mind benders.

Eiji Watanabe is an associate professor of neurophysiology at the National Institute for Basic Biology in Japan. He and his colleagues tested what happens when a DNN is presented with static images that the human brain interprets as being in motion.

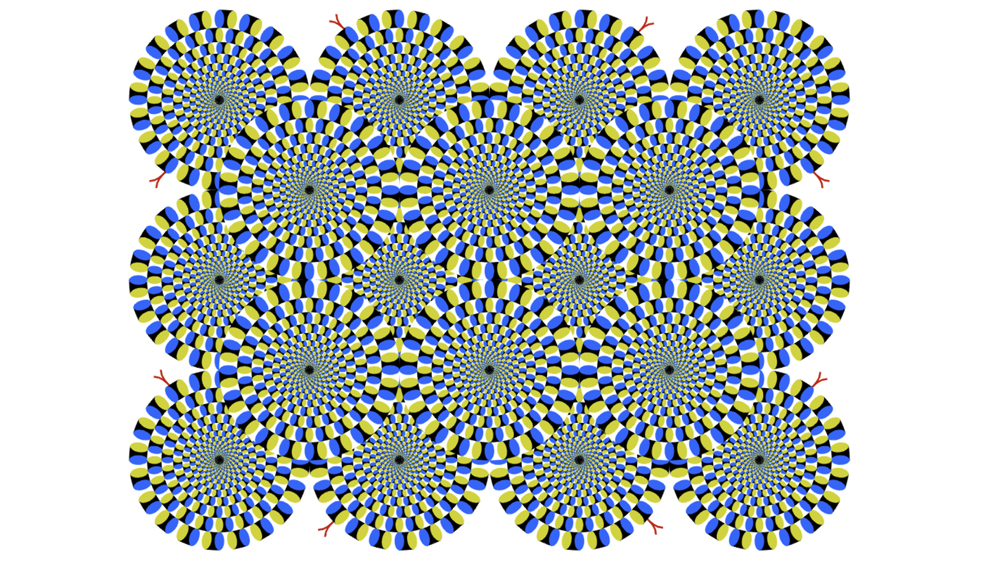

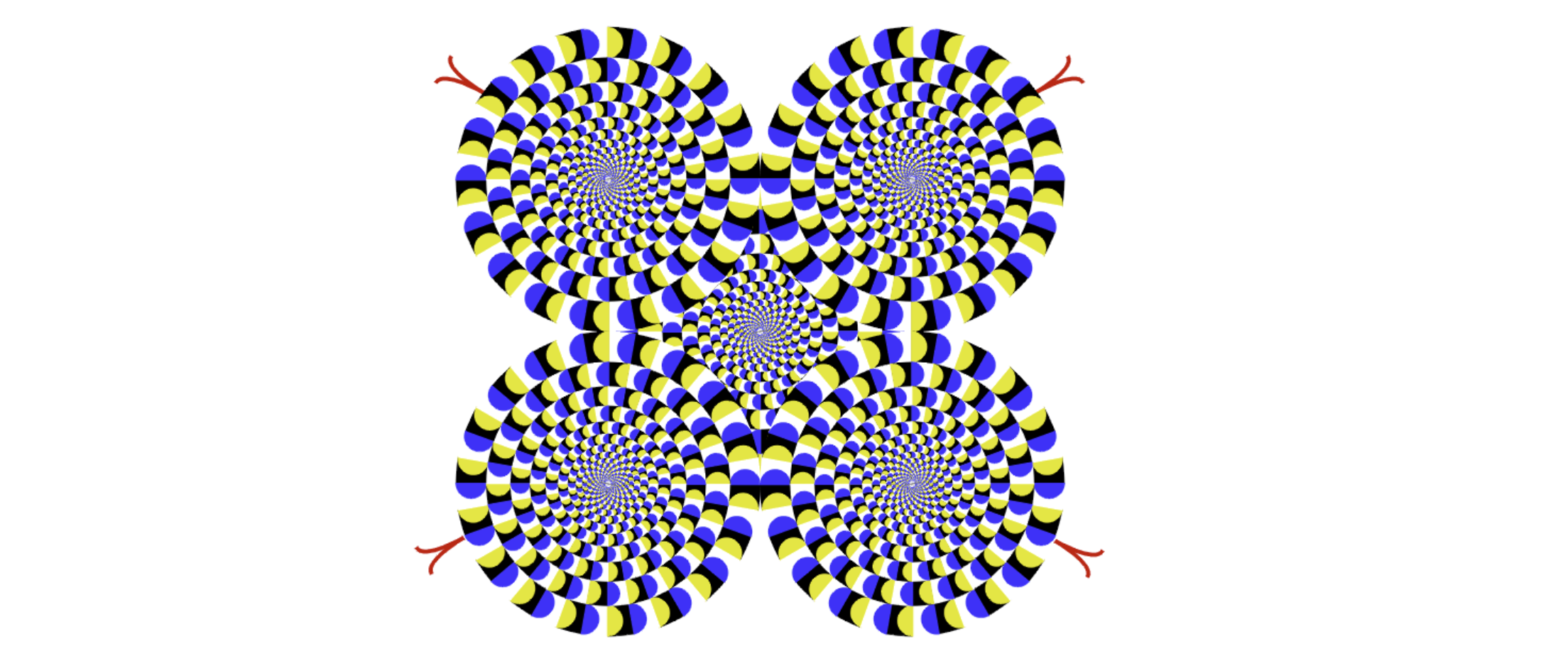

To do that, they used Akiyoshi Kitaoka's famous rotating snakes illusions. The images show a series of circles, each formed by concentric rings of segments in different colours. The images are still, but viewers tend to think that all the circles are moving other than the specific circle they focus on any one time, which the viewer will usually correctly perceive as static.

Watanabe's team used a DNN called PredNet, which was trained to predict future frames in a video based on knowledge acquired from previous ones. It was trained using videos of the kinds of natural landscapes that humans tend to see around them, but not on optical illusions.

The model was shown various versions of the rotating snakes illusion as well as an altered version that doesn't trick human brains. The experiment found that the model was fooled by the same images as humans.

The rotating snakes illusion explained?

Watanabe believes the study supports his belief that human brains use something referred to as predictive coding. According to this theory, we don't passively process images of objects in our surroundings. Instead, our visual system first predicts what it expects to see based on past experience. It then processes discrepancies in the new visual input.

Sign up to Creative Bloq's daily newsletter, which brings you the latest news and inspiration from the worlds of art, design and technology.

Based on this theory, the assumption is that there are elements in the rotating snakes images that cause our brain to assume the snakes are moving based on its previous experience. The advantage of this way of processing visual information would presumably be that it allows us to interpret what we see more quickly. The cost is that we sometimes misread a scene as a result.

There were some discrepancies between how the AI saw the illusion, though. While humans can 'freeze' any specific circle by starting at it, the AI was unable to do that. The model always sees all of the circles as moving. Watanabe put this down to PredNet's lack of an attention mechanism preventing it from being able to focus on one specific spot.

He says that, for now, no deep neural network can experience all optical illusions in the same way that humans do. As tech giants invest billions of dollars trying to create an artificial general intelligence capable of surpassing all human cognitive capabilities, it's perhaps reassuring that – for now – AI has such weaknesses.

Joe is a regular freelance journalist and editor at Creative Bloq. He writes news, features and buying guides and keeps track of the best equipment and software for creatives, from video editing programs to monitors and accessories. A veteran news writer and photographer, he now works as a project manager at the London and Buenos Aires-based design, production and branding agency Hermana Creatives. There he manages a team of designers, photographers and video editors who specialise in producing visual content and design assets for the hospitality sector. He also dances Argentine tango.

You must confirm your public display name before commenting

Please logout and then login again, you will then be prompted to enter your display name.