Design the perfect URL

Graphics, content and navigation are all well and good, but it’s important not to neglect the URL. Faruk Ates explains how to get the most from your site’s address

Daily design news, reviews, how-tos and more, as picked by the editors.

You are now subscribed

Your newsletter sign-up was successful

Want to add more newsletters?

Five times a week

CreativeBloq

Your daily dose of creative inspiration: unmissable art, design and tech news, reviews, expert commentary and buying advice.

Once a week

By Design

The design newsletter from Creative Bloq, bringing you the latest news and inspiration from the worlds of graphic design, branding, typography and more.

Once a week

State of the Art

Our digital art newsletter is your go-to source for the latest news, trends, and inspiration from the worlds of art, illustration, 3D modelling, game design, animation, and beyond.

Seasonal (around events)

Brand Impact Awards

Make an impression. Sign up to learn more about this prestigious award scheme, which celebrates the best of branding.

This article first appeared in issue 215 of .net magazine - the world's best-selling magazine for web designers and developers.

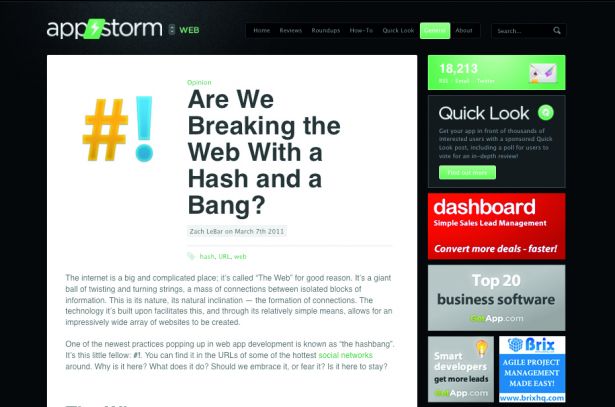

URL design has recently become a topic of discussion again over the past year. It started with Twitter’s autumn 2010 redesign, which seems to have validated what was generally considered to be a poor web design technique for public-facing websites: the ‘hash-bang’ URL.

These are URLs that, directly after the domain itself, start with ‘#!’ or ‘£!’ – for example, twitter.com/kurafire becomes twitter.com/#!/kurafire. The part of the URL that uniquely identifies the content of the page is then added at the end. This technique is aimed at improving performance – it’s essentially aimed at not reloading an entire page when you only need to reload a small piece of it. But it doesn’t come without serious downsides.

This tutorial will examine the finer details of URL design and explain why hash-bangs are to be discouraged. But first let’s take a look at the fundamentals.

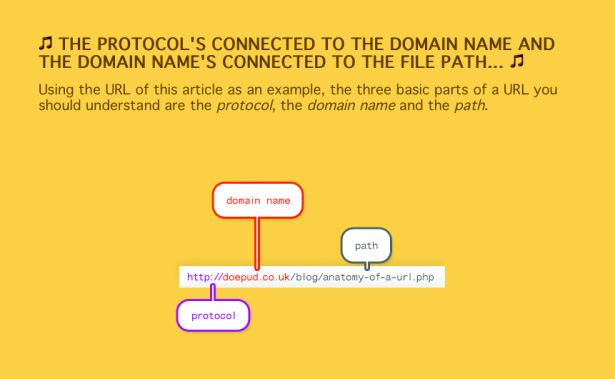

What is a URL?

The term URL stands for Uniform Resource Locator, and specifies the location of a certain resource, such as a web page. Since a location is the identification of a place, any URL is also a URI, or Uniform Resource Identifier.

However, a URL specifies not just the location of a URI but also the method for accessing it – the scheme or protocol. The syntax of a URL is as follows:

scheme://domain/path?query_string#fragment_identifier

Daily design news, reviews, how-tos and more, as picked by the editors.

Here, we’ll focus on web addresses that use the HTTP protocol, and ignore things such as MAILTO, FTP or FILE, as well as ports, embedded usernames and passwords. An HTTPS address is the same as any regular HTTP URL, with the added requirement that it uses a secure connection.

Domain

While the domain part is obvious, it’s worth mentioning that www. is not part of a domain. It’s merely a subdomain that’s commonly used by websites but is technically unnecessary. Many non-technical people think it’s needed, so whether you should use www.yourdomain.com or just yourdomain.com in your marketing or as the primary web address depends on your audience. Regardless, both addresses should get visitors to one and the same website.

Path

The path is one of the most important parts of URL design and should be created like a folder structure, using forward slashes, regardless of your backend server setup. Each unique page of your website or web application should have its own unique path.

This should be as descriptive and meaningful as possible, and be readable to humans. After all, URLs are meant for people, not search engines – the latter won’t have trouble remembering a long string of random characters, but users will share your URLs with other people.

Keep your paths as short as possible. /about-this-company is unnecessarily long; /about will do. Readable phrases such as yourname.com/wrote/some-blog-post or yourname.com/works-for/a-cool-company can add a nice touch, but it’s preferable to retain brevity.

Query strings

The majority of websites enable visitors to search. This is what query strings are best for, as well as related actions such as filtering and sorting the contents of a page.

In the past, a lot of server-side systems misused query string parameters to serve different pages of a site, such as somesite.com/index.php?p=about. Other sites went one step too far in the right direction and rewrote search query strings as a path, to something resembling this: /q/My%20search%20 query/sortby/date/order/desc/.

Both of these approaches are bad practices that I recommend you avoid. Most importantly, a query string should be treated as an optional addition to the page; the URL should work to produce a valid and useful page even when it’s removed. Pagination is a valid query string use for pages with a changing content stream.

Fragment identifiers

Fun fact: the fragment identifier is the only part of a URL that doesn’t get sent to the server hosting the page. Instead, it’s meant to identify a specific location inside the resulting page, such as a certain section of an FAQ or a footnote at the end of an article.

Browsers can navigate between multiple fragment identifiers without reloading the page, and it’s this mechanism that people have chosen to abuse to make entire sites work without any page reloads between navigating (the new twitter.com, for example).

Since that’s a desirable user experience, browser vendors created the HTML5 History API, which is an appropriate (albeit brand new) technique for navigating around sites without triggering page reloads or abusing fragment identifiers.

For detailed instructions on how to use the HTML5 History API, I recommend the ‘Manipulating History’ chapter of Mark Pilgrim’s online book Dive Into HTML5.

Breaking the agreement

Any combination of URL components represents a quiet agreement: this particular URL will return a unique resource or data object, optionally referring to a specific subsection within that resource.

Because fragment identifiers aren’t sent to the server, one could argue that hash-bang URLs aren’t technically valid.

Citing the Wikipedia page on URLs: “In computing, a Uniform Resource Locator (URL) is a Uniform Resource Identifier (URI) that specifies where an identified resource is available and the mechanism for retrieving it.” A hash-bang-based URL insufficiently specifies the mechanism for retrieving the content, as it requires a JavaScript round trip to the server after the server has already sent the browser an HTML page – a page that doesn’t have the content associated with the requested URL (yet).

Put another way, hash-bangs change the mechanism for retrieving a resource. It’s no longer defined simply and solely by a URL’s scheme, but by “fully functioning JavaScript as determined and delivered by the server and interpreted by a browser-grade JavaScript processor”.

This may all seem pedantic, but the significance becomes clear when you consider the reality of how resources are accessed. A browser loading a URL is obviously the most common way for a web page to get loaded, but it’s not the only method. Any simple wget- or curl-based attempt to pull in content from the web will no longer work, and any piece of software that loads web content now has to include a full JavaScript parser to support such URLs. And that’s all assuming the JavaScript doesn’t get filtered out by some proxy server or firewall, and doesn’t contain any errors anywhere in the page. When users turn JavaScript off in their browser, these sites will stop working.

If breaking the quiet agreement and having the entire site rely on fragile techniques isn’t bad enough, hash-bangs are also a one-way street to permanent maintenance and support. You can’t use server-side rewriting for your URLs, even when you redesign again. Thus, unless you want to break your incoming links and people’s bookmarks, you’ll always have to do some processing on your domain’s primary landing page to support these URLs once you’ve put them out there.

Bad practices

There are many different ways to design your URLs. The fundamentals above are all great techniques, but we should know what makes for bad URL design in order to fully understand and appreciate what makes good URL design good. Here are some practices to avoid, starting with the worst offenders and proceeding to methods that are merely ill-advised:

Page identification hashes

Some (mostly ancient) content management systems or blog engines identify each unique page with a long string of random characters; something like this: 5F0C866C-6DDF-4A9A-9515-531B0CA0C29C.html. If your content management system or site engine generates such URLs, find out how to overwrite or turn off that behaviour immediately; if that’s not possible, you really are better off getting a more modern CMS. There are only downsides to these URLs – for your users and yourself – and countless good, modern systems available to power your site that avoid this terrible technique.

Session hashes

While not as bad as when used for pages, hashes used for sessions on your site are still bad.

For a start, they can negatively affect SEO. But the bigger concern is that most systems employing them use SHA-1, which is relatively insecure – certainly for user sessions or logins containing any sensitive data.

File extensions

Your URLs should be free of .php, .aspx and so forth. File extensions are not forward-compatible, so if you change backend systems and all your URLs contain .aspx you are forced to do server-side rewriting for every single page on your site. Costly, inefficient and completely unnecessary. The .html extension isn’t really recommended either, but if you’re confident you’ll only ever serve the pages you’re building as static files it’s an acceptable technique.

Non-ASCII characters

Sites with a character language as the primary content language are somewhat excused, but accented Latin and non-basic punctuation is best avoided.

Underscores

These have poorer usability and SEO value, and no tangible benefits to over hyphens.

Keyword stuffing

Adding multiple keywords to URLs may help with SEO, but it will confuse your users. Also, you’ll quickly run the risk of being marked as a keyword spammer.

Good practices

While it’s important to know what techniques you should avoid, it’s obviously more worthwhile to know which you should use. We now have all the basics covered, so let’s look at some advanced tactics that make for great URLs.

“Cool URIs don’t change”, as Tim Berners-Lee said back in 1998, but aside from keeping them permanent across redesigns, what else makes for great addresses? Some key considerations are robustness, hackability and namespacing.

Robust URL mapping

People will share your URLs, and sometimes they’ll do so in a medium where the recipients’ environment might wrap the URL across two lines. This is most common with blog posts that include a full date and long title in the URL.

One solution is to keep all your URLs shorter than 70 characters, but that’s not always ideal. Furthermore, the nature of relational database systems is such that ID values are quick to look up but strings aren’t.

With large amounts of traffic, this can be a serious enough bottleneck to take a server down. Adding more hardware can be an expensive solution.

Robust URL mapping can solve both of these problems for you. By embedding a unique ID early on in your path, you can have long, fully descriptive URLs when needed but still enjoy the reliability of shorter URLs and the speed of ID lookups.

Take this URL: yourdomain.com/news/1982-this-is-a-longer-news-posts-title- which-would-almost-certainly-get-broken-onto-a-new-line-in-some-clients. In this example, ‘1982’ is the ID value of the database record for this particular post. Your CMS could then use only this part of the URL to do a successful lookup: yourdomain.com/news/1982.

Everything after that is optional, and nice for humans and SEO, but it won’t matter if it gets wrapped onto two lines.

The only downside with this technique is that IDs themselves aren’t that human-friendly, so that’s a trade-off to consider.

Hackable URLs

In a good, hackable URL, a human can adjust or remove parts of the path and get expected results from your site. They give your visitors better orientation around your pages, and enable them to easily move up levels. An example is: yourdomain.com/blog/2011/05/20/some-article. Reducing that to each forward slash should produce expectable results. For example, your domain.com/blog/2011/05/20/ should return all posts published 20 May 2011. yourdomain.com/blog/2011/05/ will give an overview of May 2011’s posts, while yourdomain.com/blog/2011/ could be used to bring up an overview of 2011’s posts, or, if that’s too granular, just post totals for each month. yourdomain.com/blog/ should return the latest updates, regardless of their actual publication date.

How detailed you should be about designing such URLs really depends on the site’s content and audience. The more topical content is, the more it benefits from publication dates in the URL; the more frequently new content gets published, the more it benefits from finer granularity.

Other areas – such as categories, products and services – don’t need date components, but no matter how detailed (or not) your URLs end up being, they should ultimately be completely hackable.

It’s a mistake to say that hackable URLs are only used by tech-savvy visitors, and dismiss them if your audience aren’t in that niche. For one, users will only get more tech-savvy over time, not less. But, more importantly, you don’t know every one of your visitors, current and future.

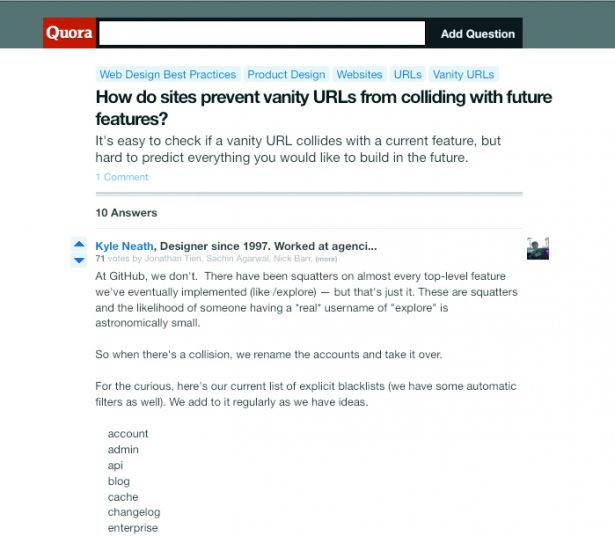

Namespaces

The top-level section of the path is the most valuable real estate in a URL. If your site enables users to sign up and have their own profile at this level, you should create a blacklist of usernames containing all current and possible future features you may wish to have. You can find some great example lists on Quora for this.

Namespacing features behind the username: <username>lists or <username>/followers are great solutions for public features that belong to each user individually.

Private things, such as account settings, should never be namespaced behind the username, and should just appear after /account or /settings. Also, don’t mix and match techniques here. If you start putting some features under /feature/<username> and others under <username>/feature, you’ll only end up confusing your users.

If you begin a site as a blog but expect to build it up more in the future, consider adding all posts under /blog/ as a top-level namespace, to avoid potential conflicts later on.

The business case

Because URLs are such an important part of your website or application, they ought to be among the first things you plan and work out with your team. Not just because you don’t want to have to change them over time, but because creating a great structure up front significantly helps with understanding and crystallising your user’s needs and requirements, as well as your own business requirements.

Designing great URLs should be a collaborative effort; if you have dedicated information architects on your team, they should be involved. The same goes for database architects, front-end managers and lead designers. Coming up with a great URL isn’t just a job for your marketing or user experience people; it’s relevant and important for everyone involved in making the product.

Once you have your URL structure, you can quickly and easily plot out a complete site map. This helps information architects design a great hierarchy and navigation, back-end engineers work efficiently and front- end developers turn the scope of sections and pages into clean markup and code. From the conceptual design phase onwards, a great URL structure that’s designed up front and collaboratively will help to make your web product better in every way.

The Creative Bloq team is made up of a group of art and design enthusiasts, and has changed and evolved since Creative Bloq began back in 2012. The current website team consists of eight full-time members of staff: Editor Georgia Coggan, Deputy Editor Rosie Hilder, Ecommerce Editor Beren Neale, Senior News Editor Daniel Piper, Editor, Digital Art and 3D Ian Dean, Tech Reviews Editor Erlingur Einarsson, Ecommerce Writer Beth Nicholls and Staff Writer Natalie Fear, as well as a roster of freelancers from around the world. The ImagineFX magazine team also pitch in, ensuring that content from leading digital art publication ImagineFX is represented on Creative Bloq.